Home » Create a Decoupled Backend Architecture Using Lambda, SQS and DynamoDB

Create a Decoupled Backend Architecture Using Lambda, SQS and DynamoDB

Introduction

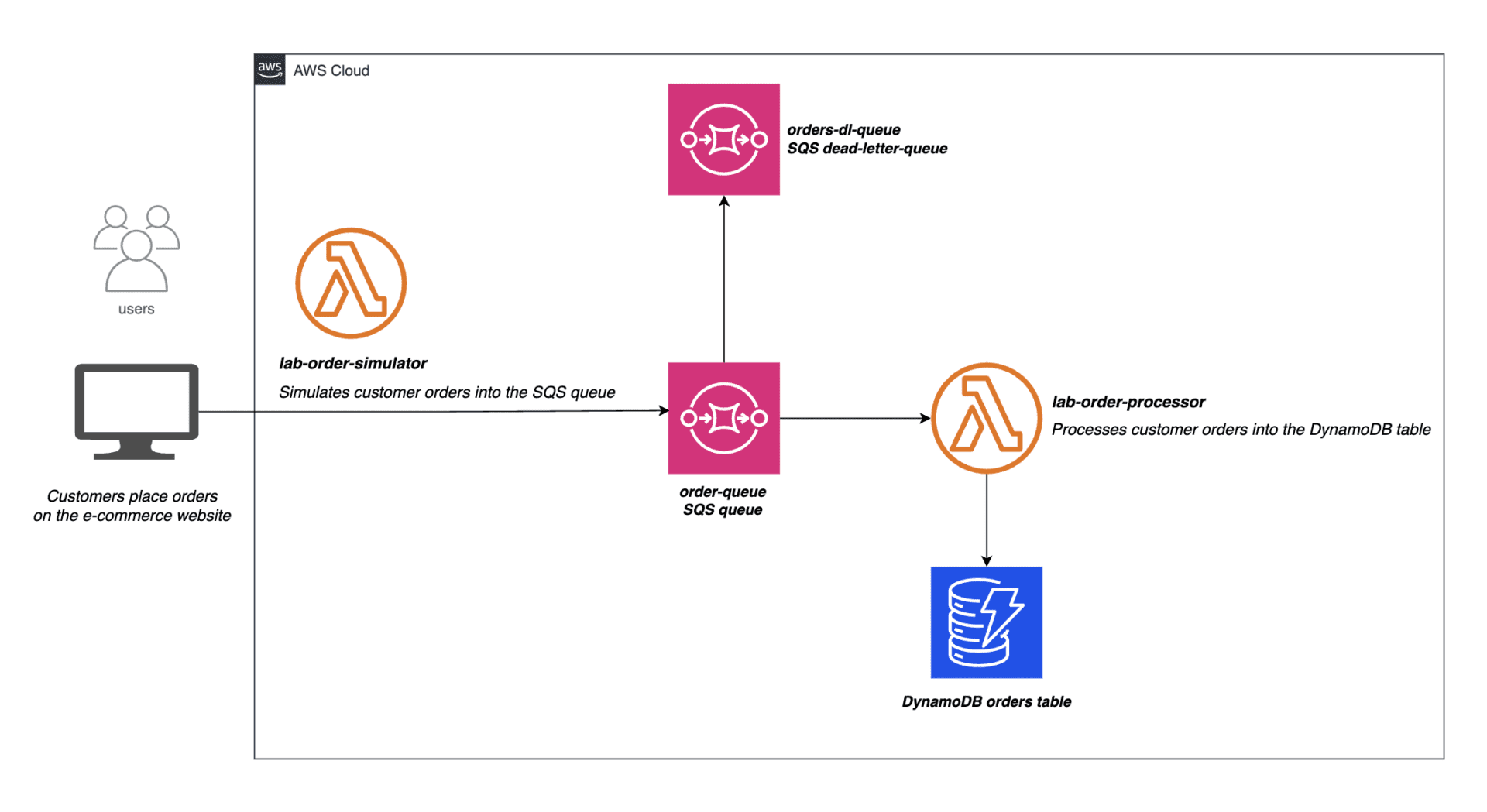

As part of a hands-on lab module, I implemented a decoupled backend architecture using AWS serverless components like Lambda, SQS and DynamoDB. The lab simulated an e-commerce order processing workflow under peak load to teach concepts around building resilient cloud-based systems.

The goal was to maximize reliability and scalability in the context of this simulated scenario where extreme spikes were expected during holiday sales seasons. By refactoring into a queue-driven decentralized design, I learned how these architectures can adapt better to rapid surges in traffic.

In the following sections, I demonstrate the workflow developed to ingest orders via an SQS queue, process them through a Lambda function, and store them in a DynamoDB table. This hands-on experience helped me upgrade my skills in architecting distributed solutions for variable workloads.

Objectives

- Create SQS standard queue

- Develop Lambda function to process orders into DynamoDB table

- Configure SQS Event Source to trigger the Lambda function

- Demonstrate decoupled backend architecture

You will need to create an SQS standard queue to process the customer orders captured on the e-commerce website. You are also required to create a dead-letter-queue to ensure that you dont lose any orders that might have missing data or badly formatted data. Dead-letter queues are important because they provide a way to reprocess failed orders and debug application issues.

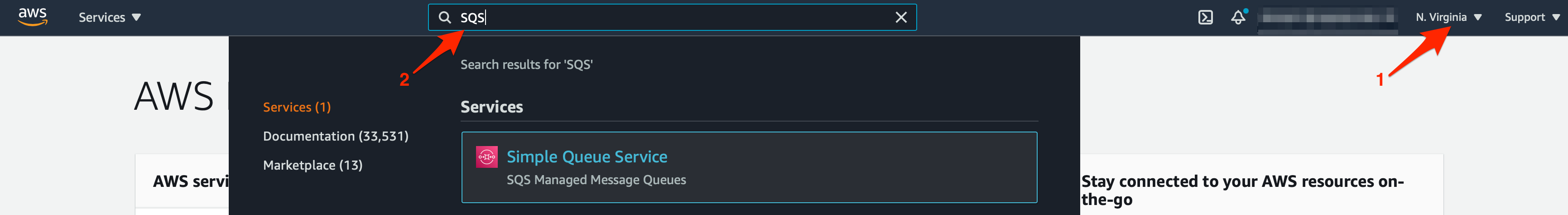

- Make sure that you are in the N.Virginia AWS Region on the AWS Management Console. Enter SQS in the search bar and select Simple Queue Service.

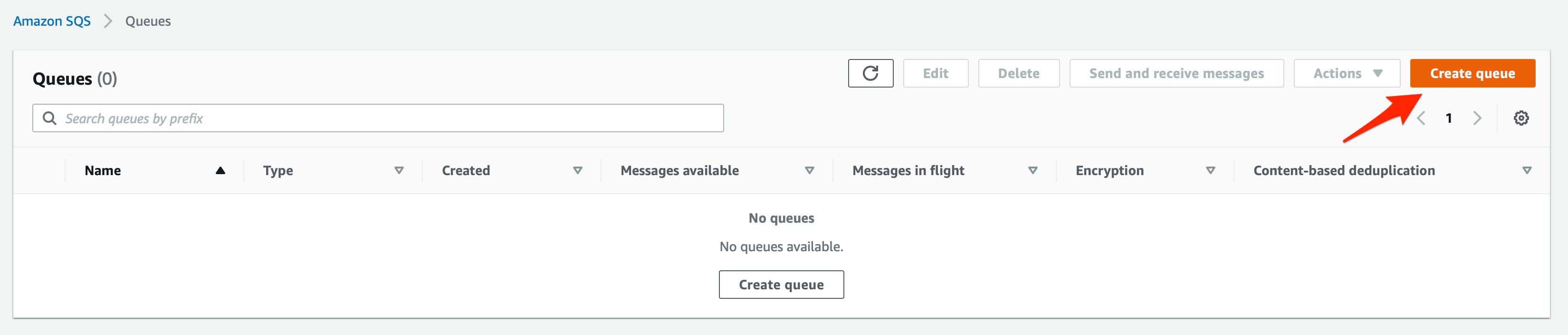

2. Click Create queue

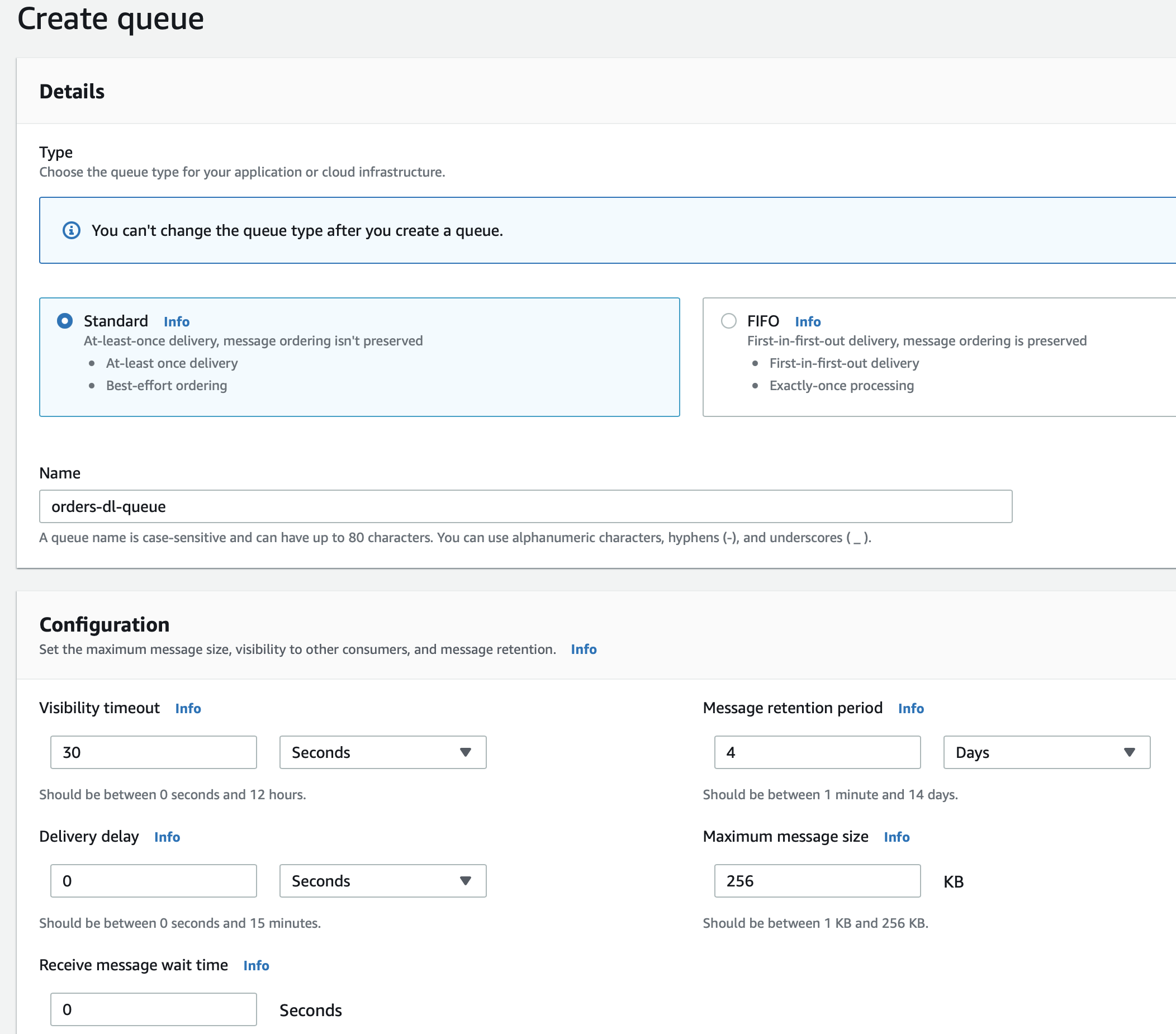

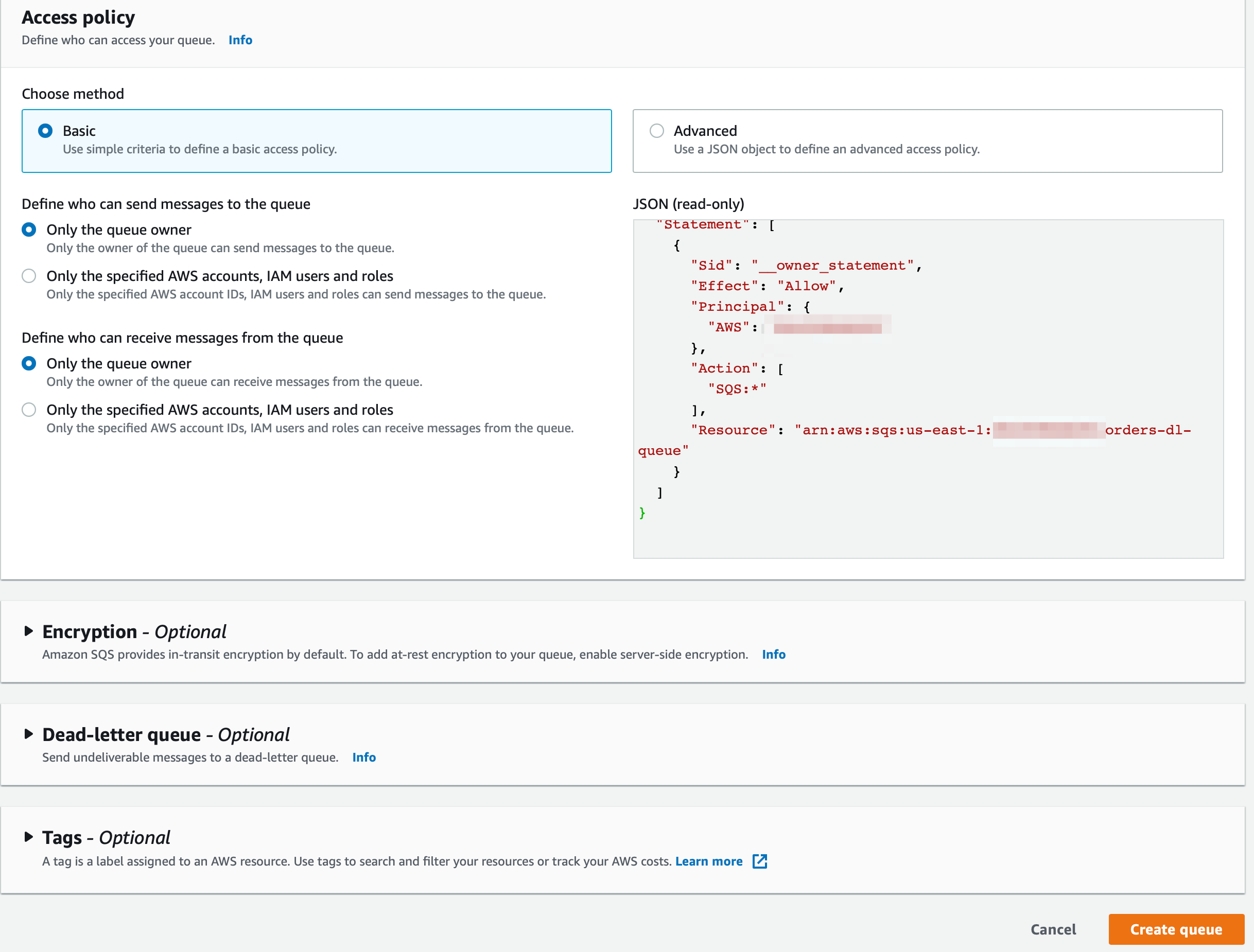

3. Lets create a SQS standard queue which will be used as a dead-letter-queue to ensure that we dont lose any orders that might have missing data or badly formatted data to process the customer orders. You can create this queue by filling in the values as shown below:

Select the Standard type

Enter queue name as orders-dl-queue

Leave the default values unchanged for the Configuration, Access policy, Encryption, Dead-letter queue and Tags sections. Then click on Create queue to set up the queue.

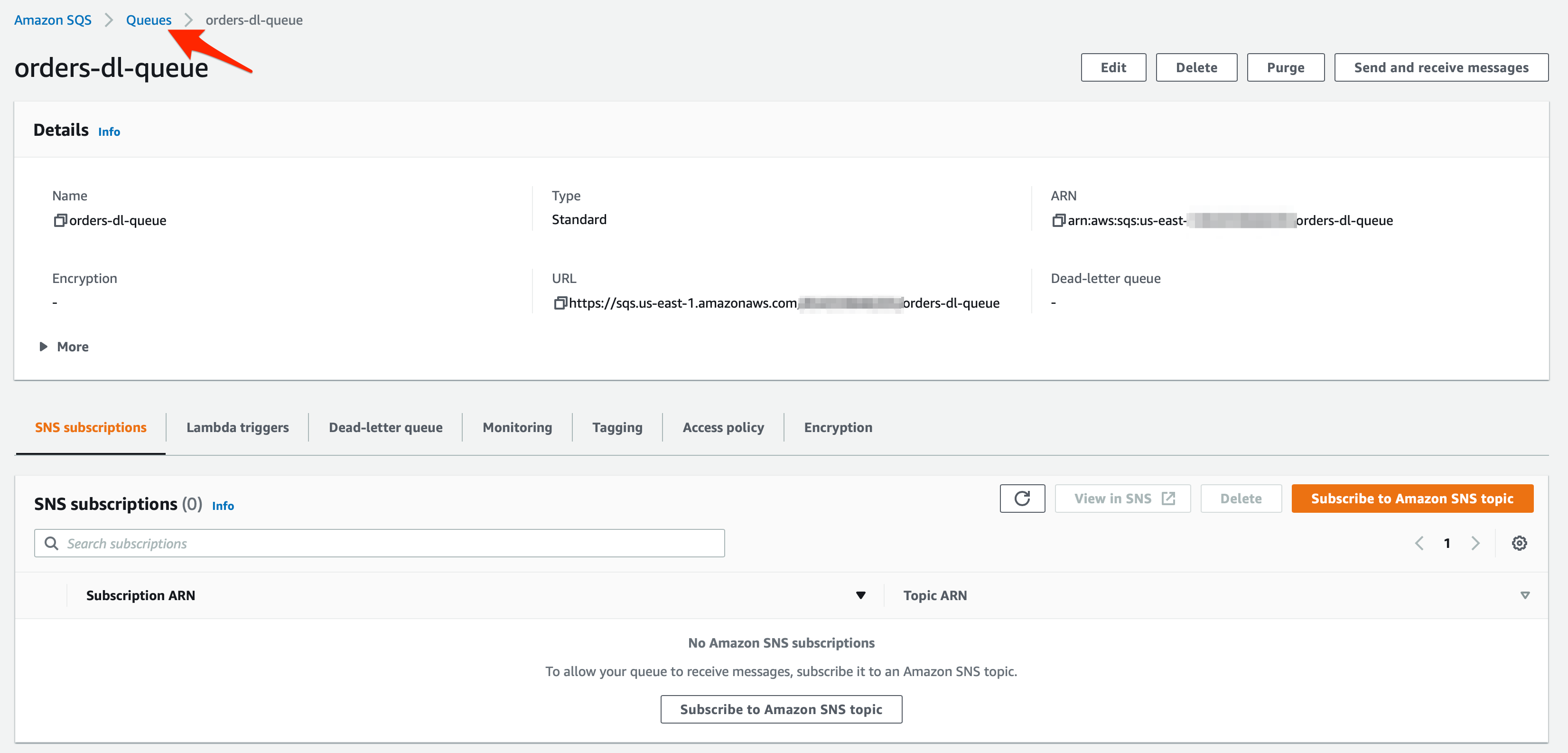

4. You should see that your orders-dl-queue has been successfully created. Now, let’s create the SQS standard queue to process the customer orders. Click on the Queues link shown below to go back and then click on the Create queue button.

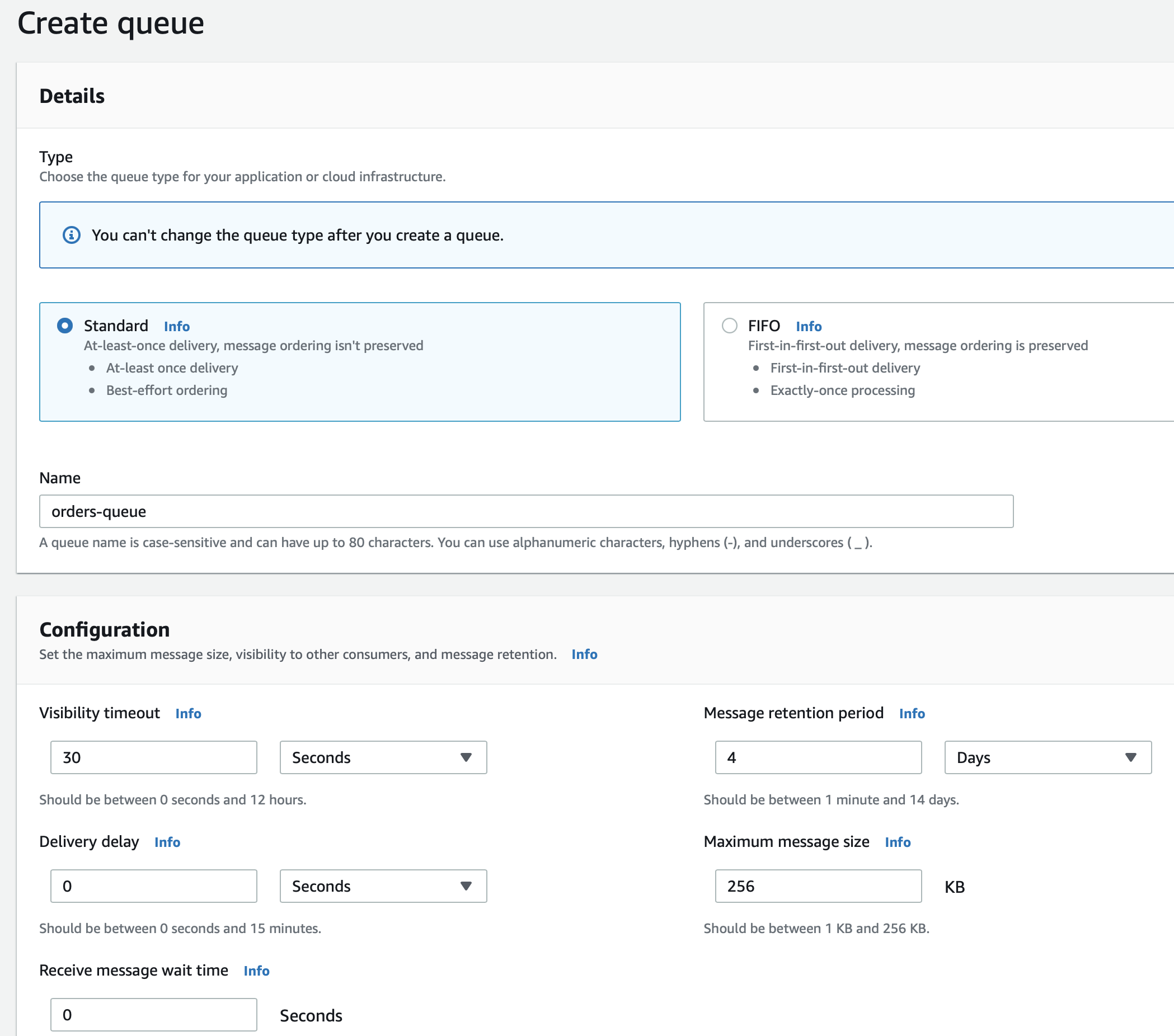

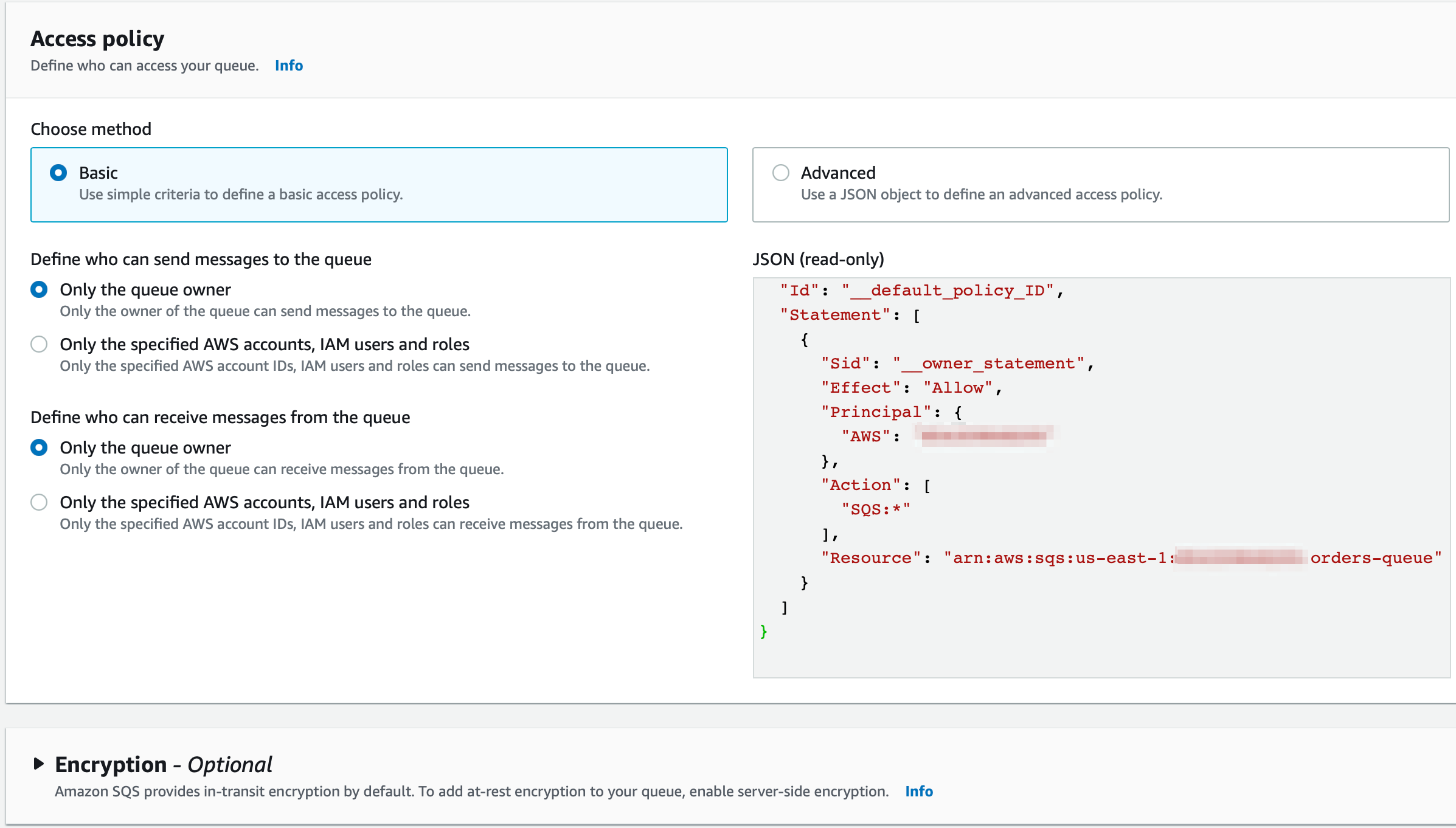

5. Let’s fill in the values for the customer orders queue as shown below:

Select the Standard type

Enter queue name as orders-queue

Leave the default values unchanged for the Configuration, Access policy, Encryption and Tags sections.

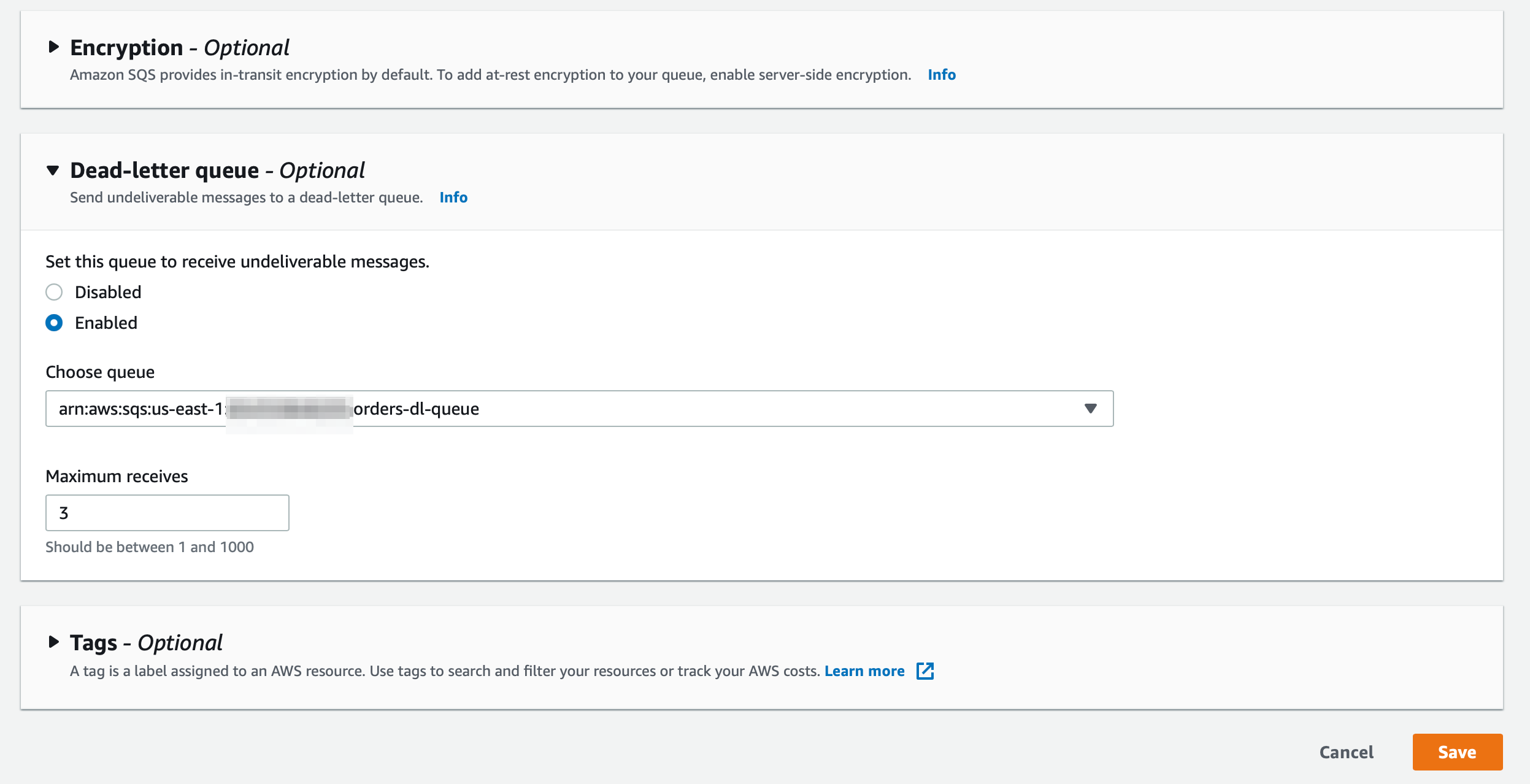

- For the Dead-letter queue section, you should enable the option to receive undeliverable messages. Then, you should select the orders-dl-queue queue that you created in Step 3. Lastly, you should also note that the Maximum receives is set to 3. Then click on Create queue button.

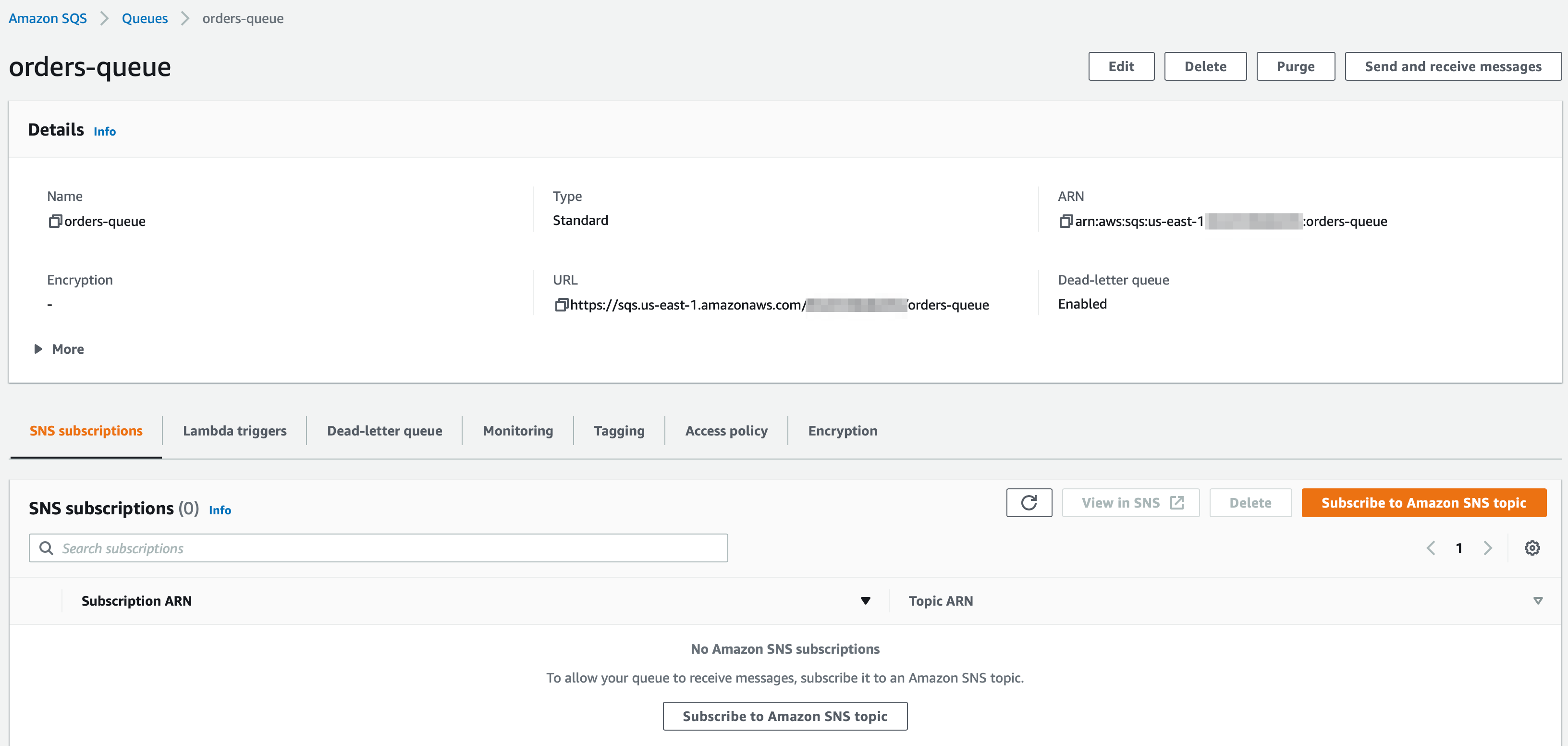

6. You should see that your orders-queue has been successfully created

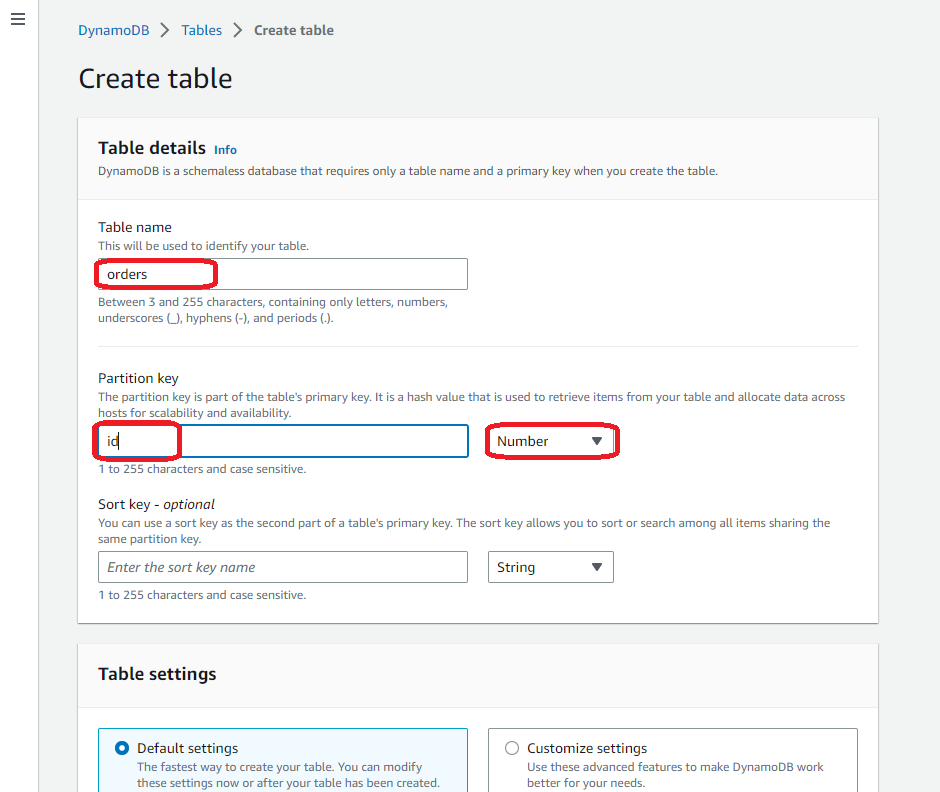

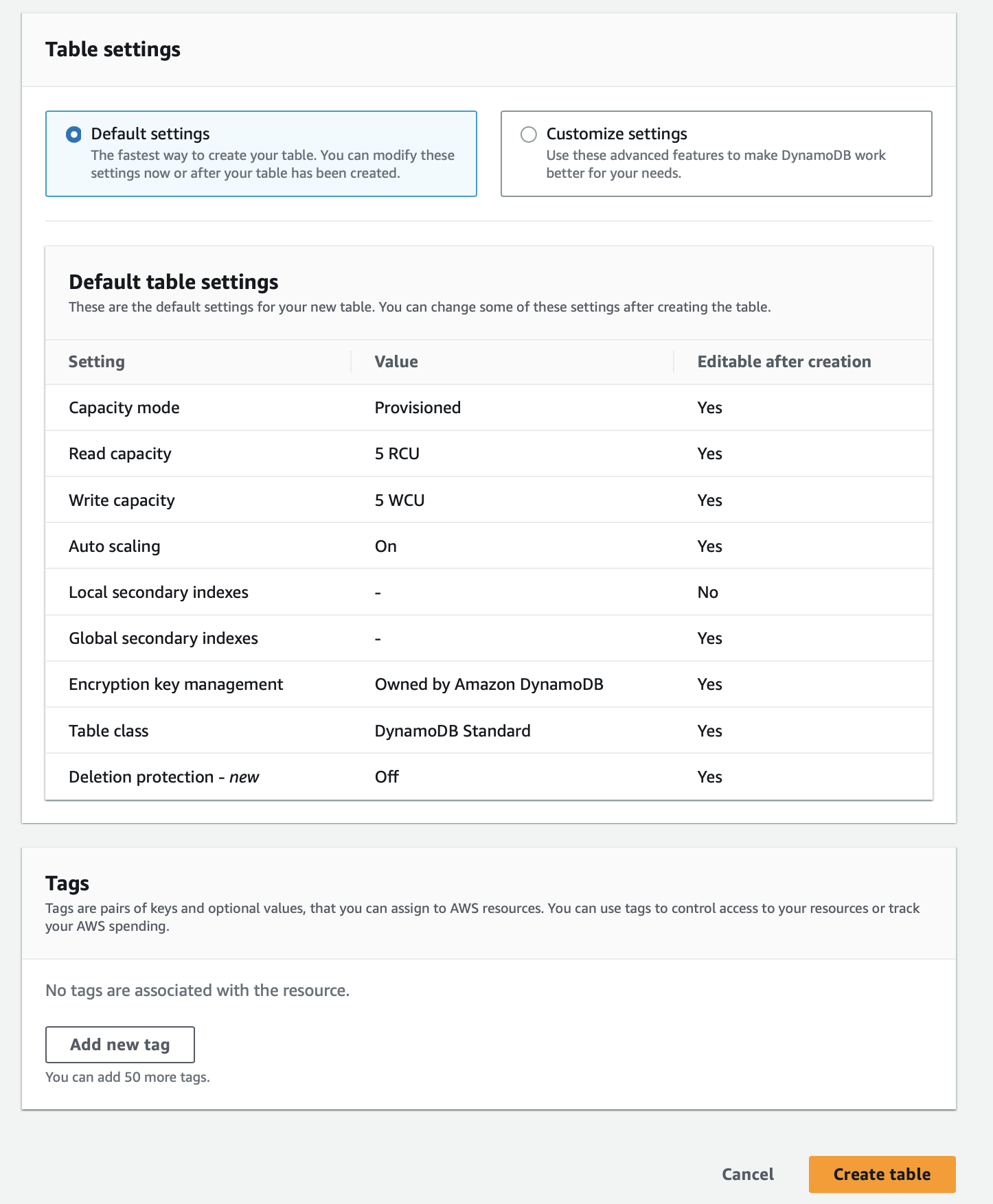

We need to create a DynamoDB Table to capture the processed order data. You can use the default settings for creating the table in the provisioned mod

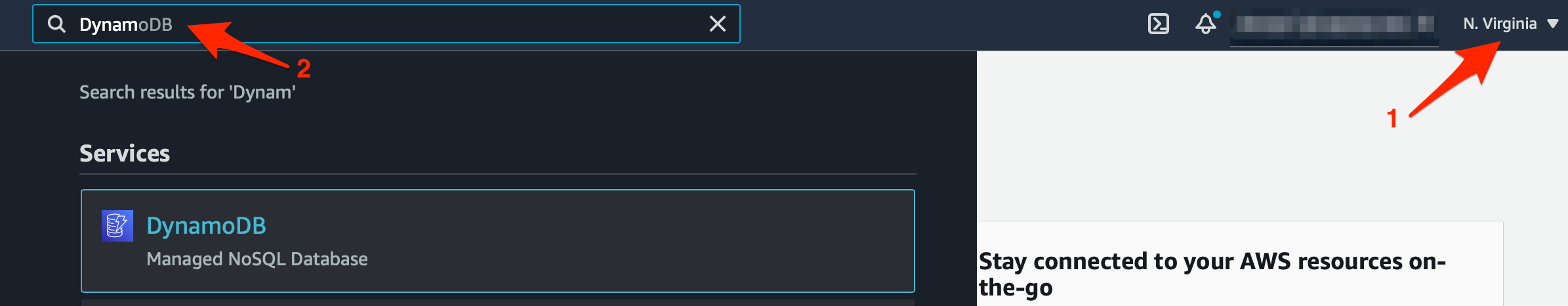

- Make sure that you are in the N.Virginia AWS Region on the AWS Management Console. Enter DynamoDB in the search bar and select DynamoDB service.

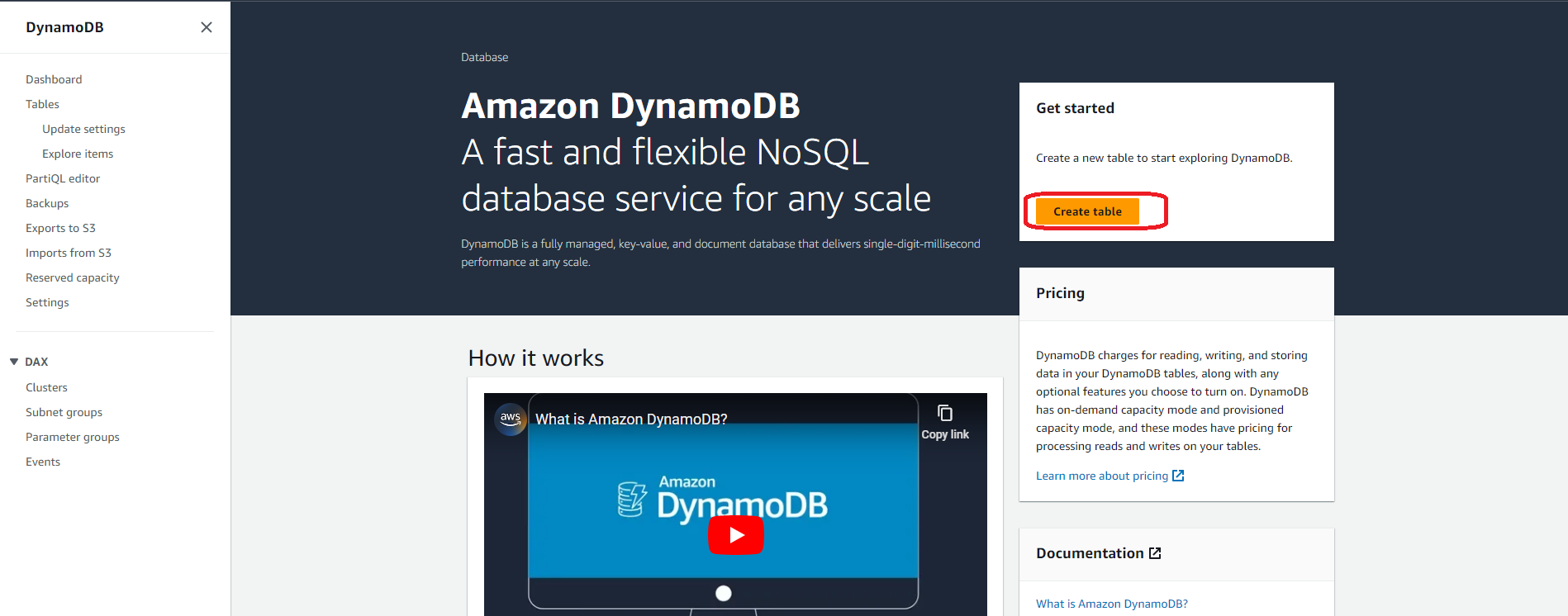

2. Click on the Create table button

3. Enter the required values for the table as follows:

Table name: orders

Primary key: id

Make sure that you select the type as Number for the Primary key id

Select the Default settings option from the Table settings section

- Click Create table button to complete the configuration for the table

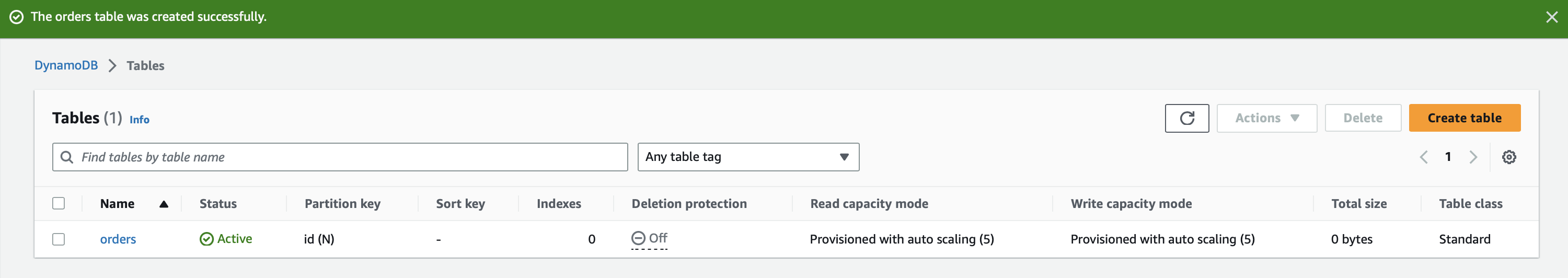

4. You should see that the orders table has been successfully created

We will need to create a Lambda function to put some customer order messages into the designated SQS queue. These messages would later be used to trigger the order processing lambda that will eventually store the order data in the DynamoDB table.

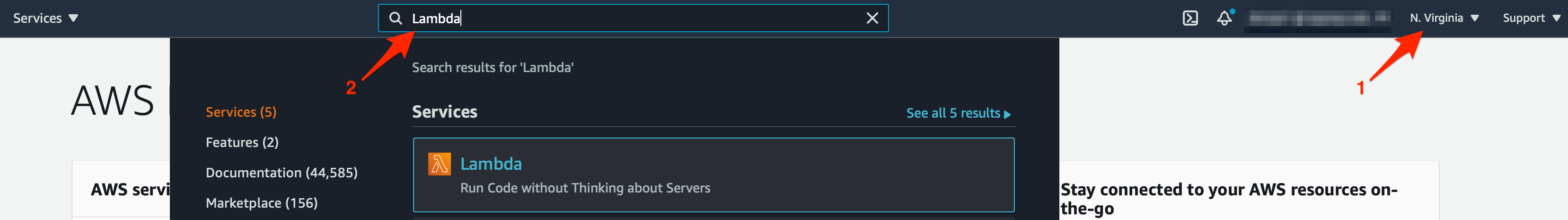

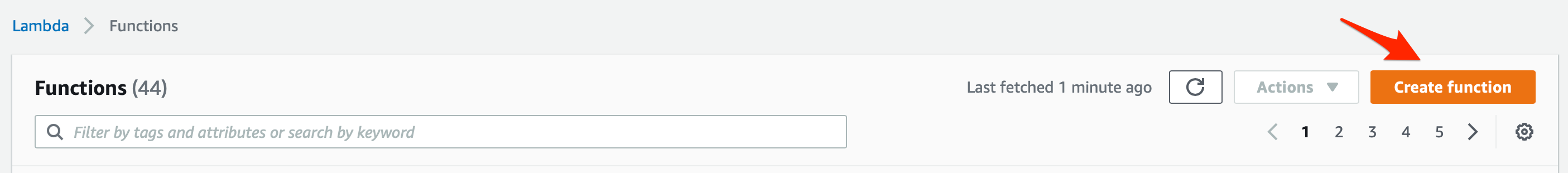

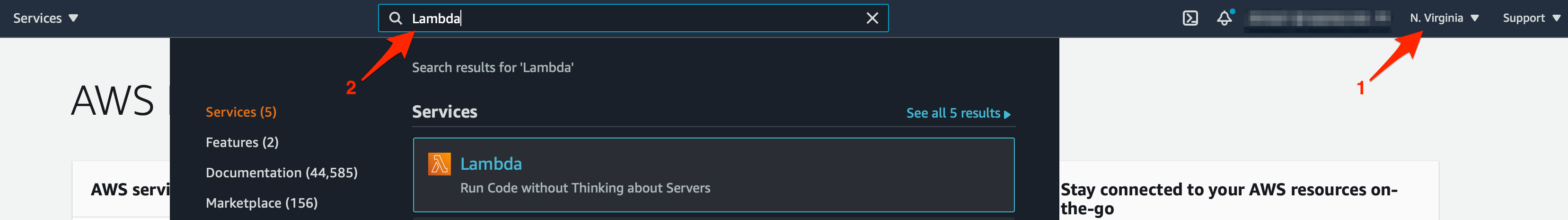

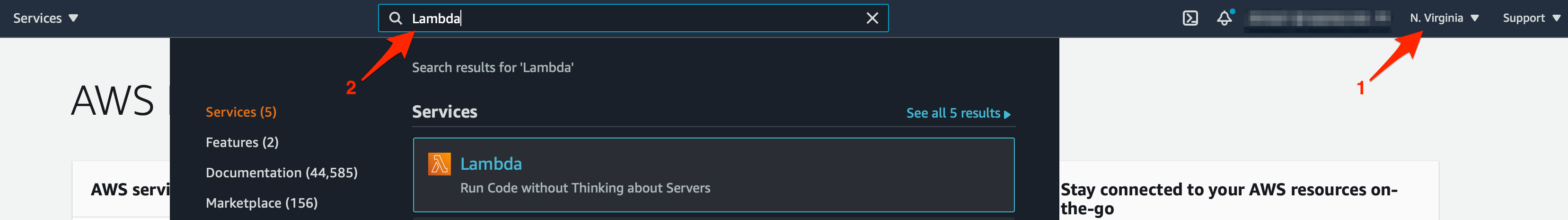

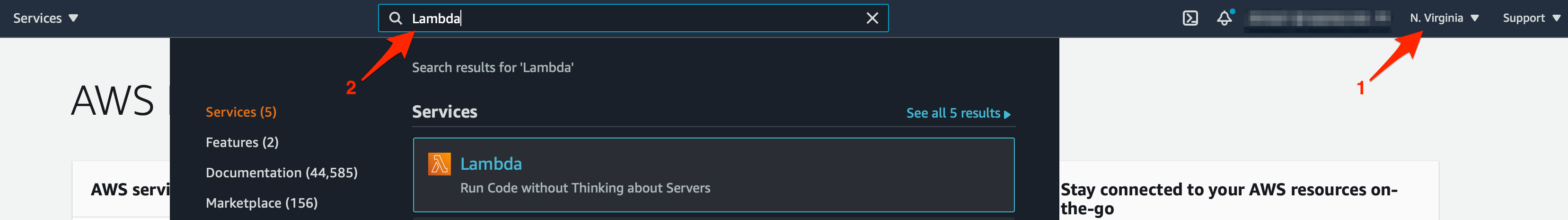

- Make sure that you are in the N.Virginia AWS Region on the AWS Management Console. Enter Lambda in the search bar and select Lambda service.

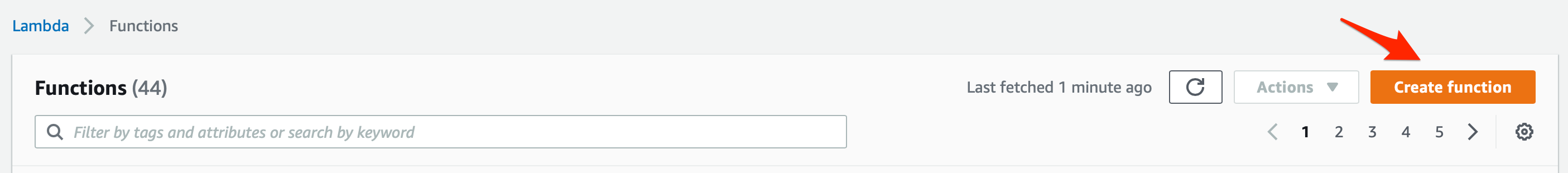

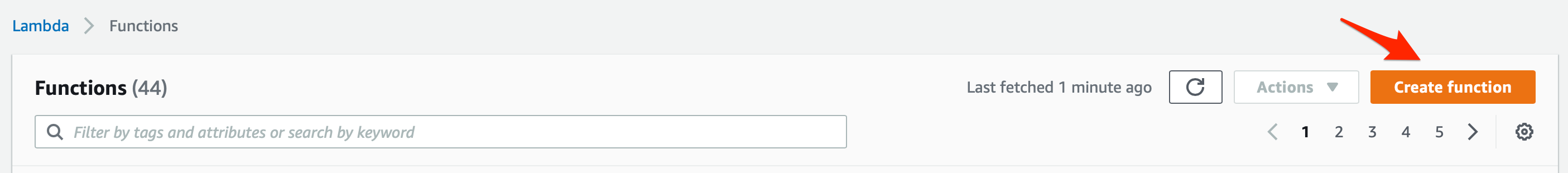

Click on Create function button

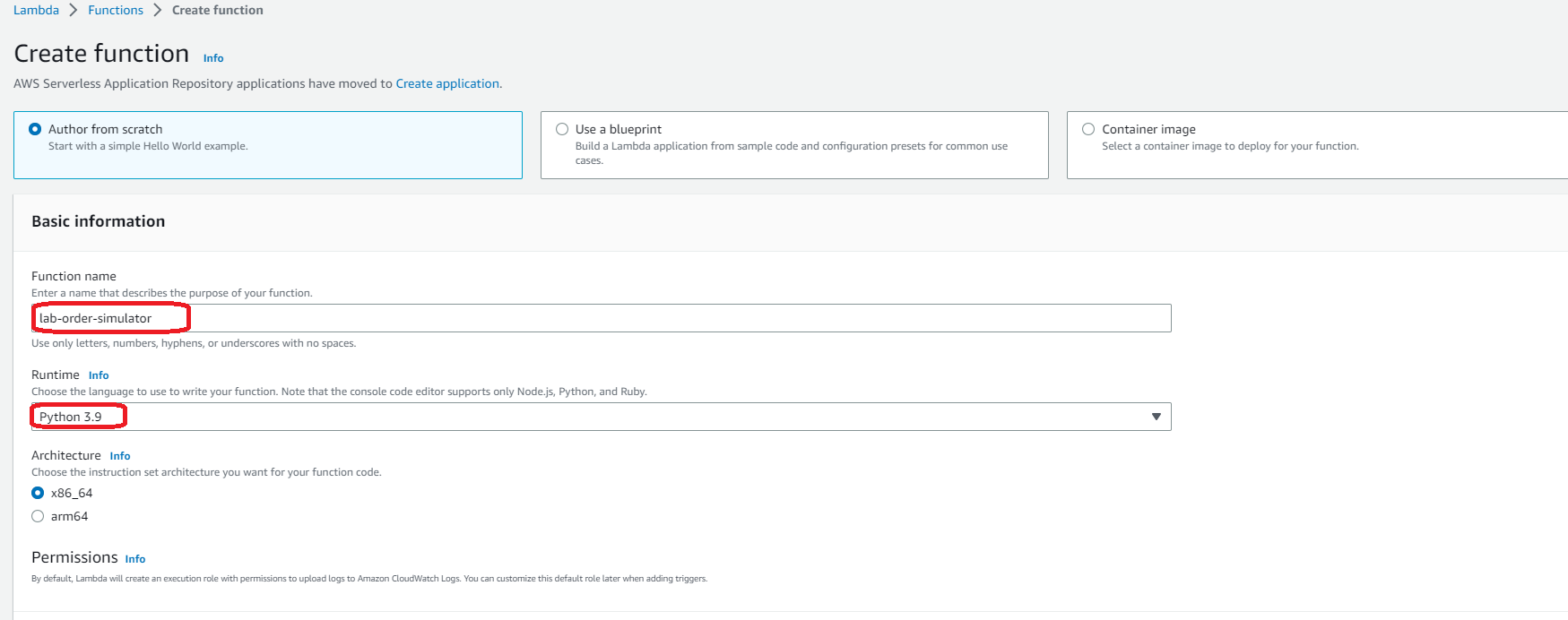

2. Let’s create the Lambda function by filling in the following values:

- Select the Author from scratch template

- Function name: lab-order-simulator

- Runtime: Python 3.9

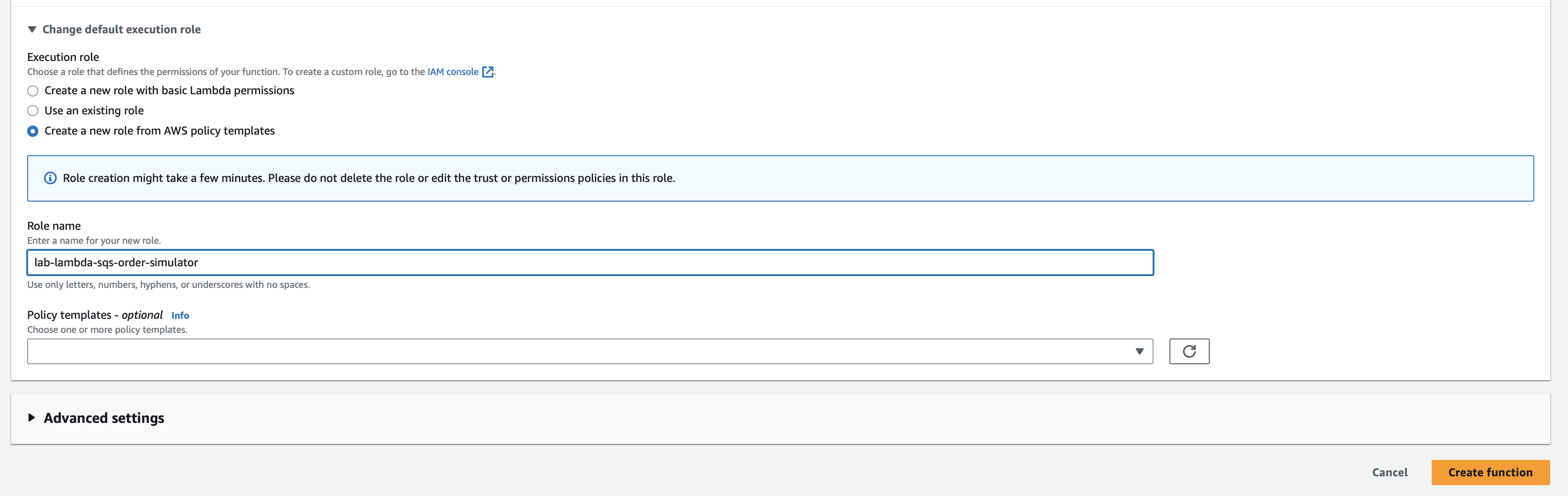

- For execution role, select the option that says Create a new role from AWS policy templates

- Role name: lab-lambda-sqs-order-simulator

- Click Create function to complete this step

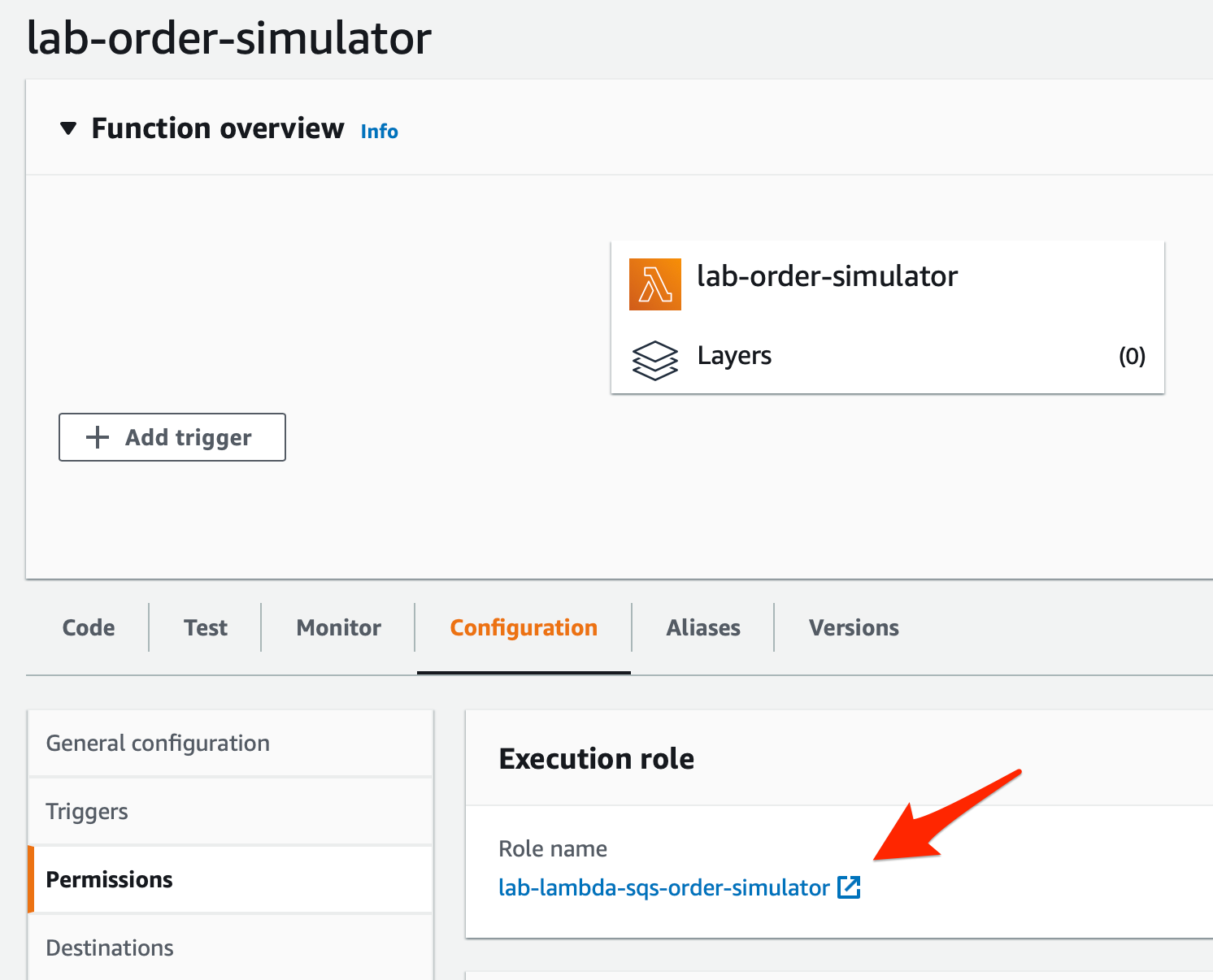

3. Configure the Lambda to modify the permissions for its execution role.

- Select the Configuration tab and click on the Permissions link on the left sidebar. Then, click on the hyperlink for the execution role.

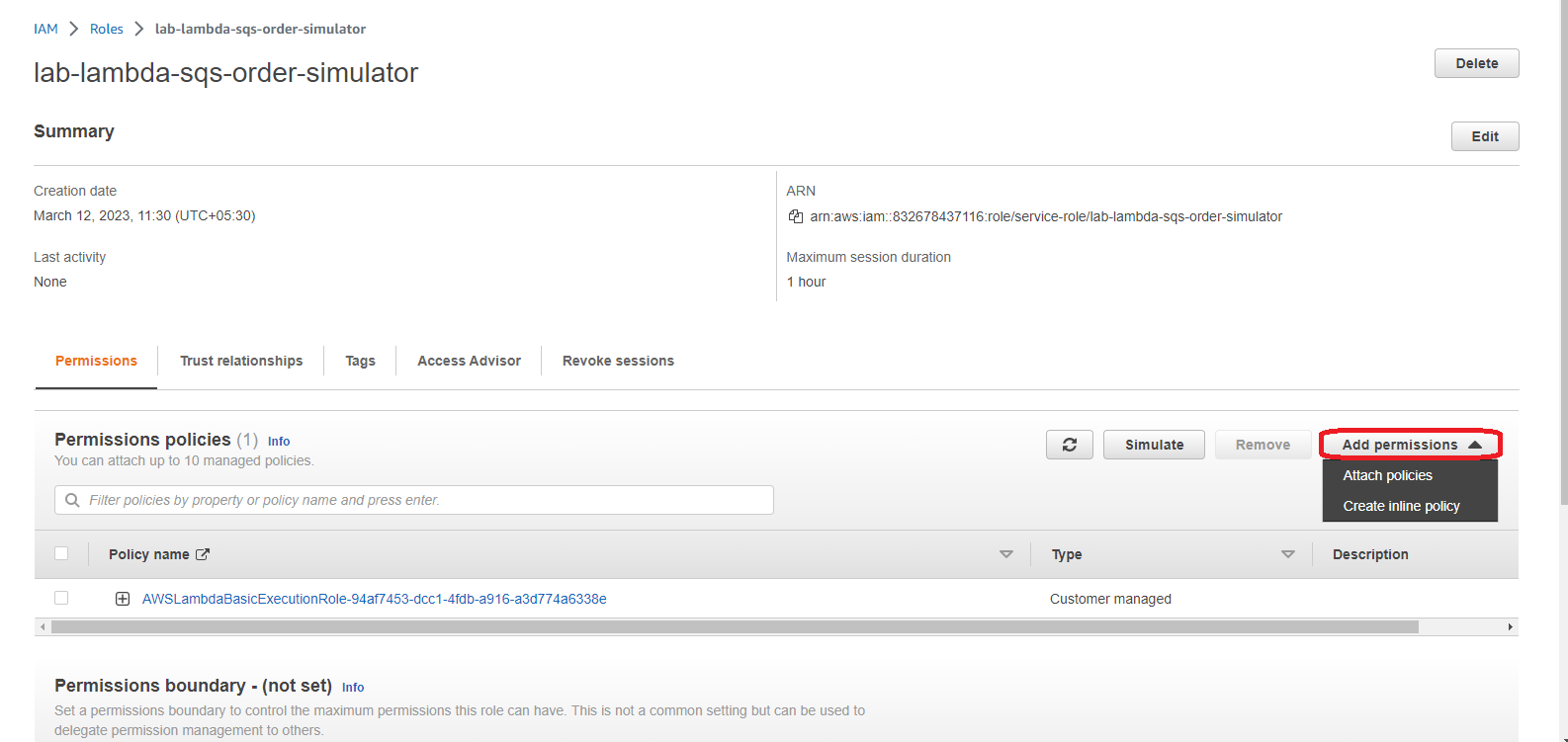

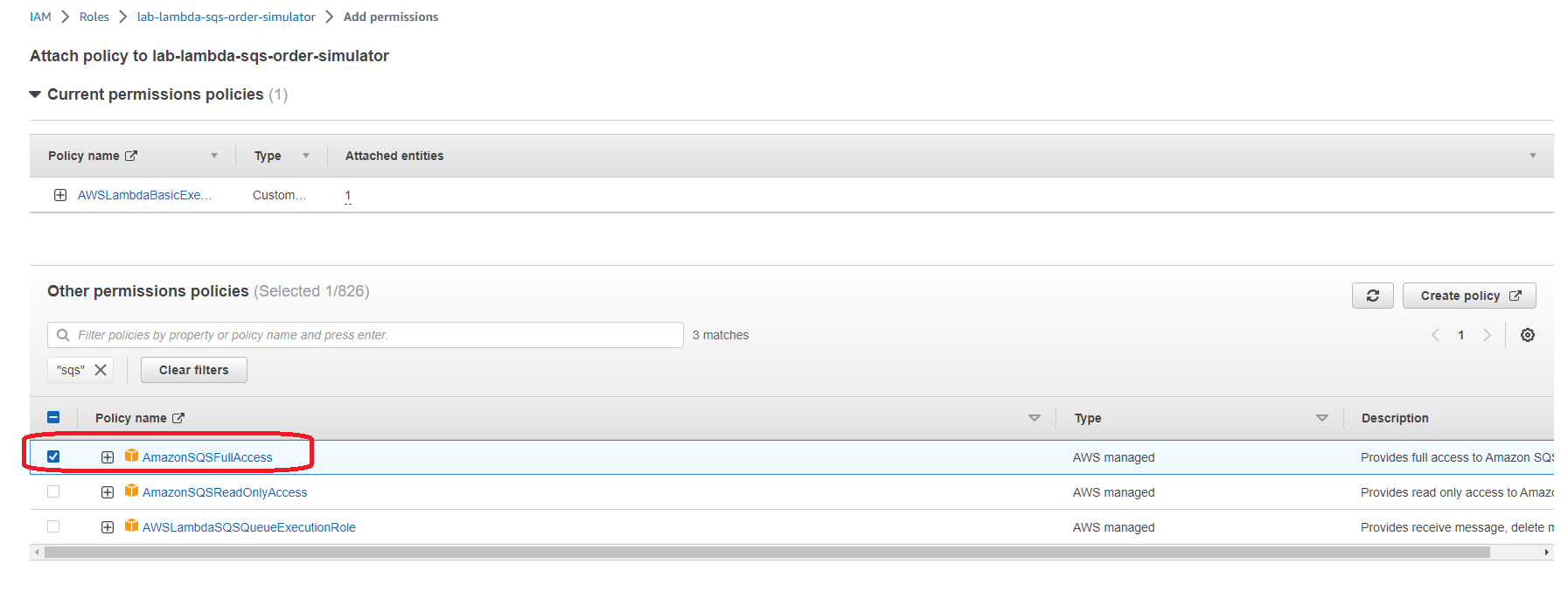

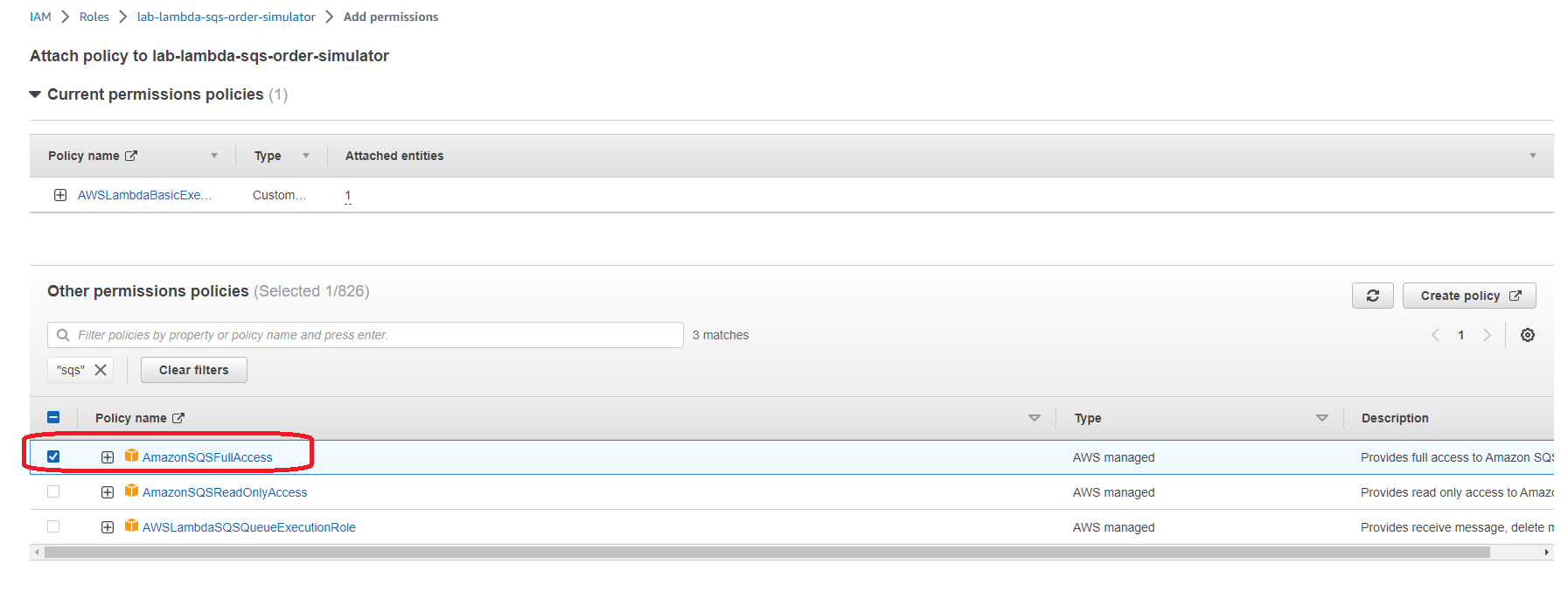

- You will notice that only the AWSLambdaBasicExecutionRole policy is attached to the Lambda in the Permissions tab. Click on the Add permissions button and then select the Attach policies option.

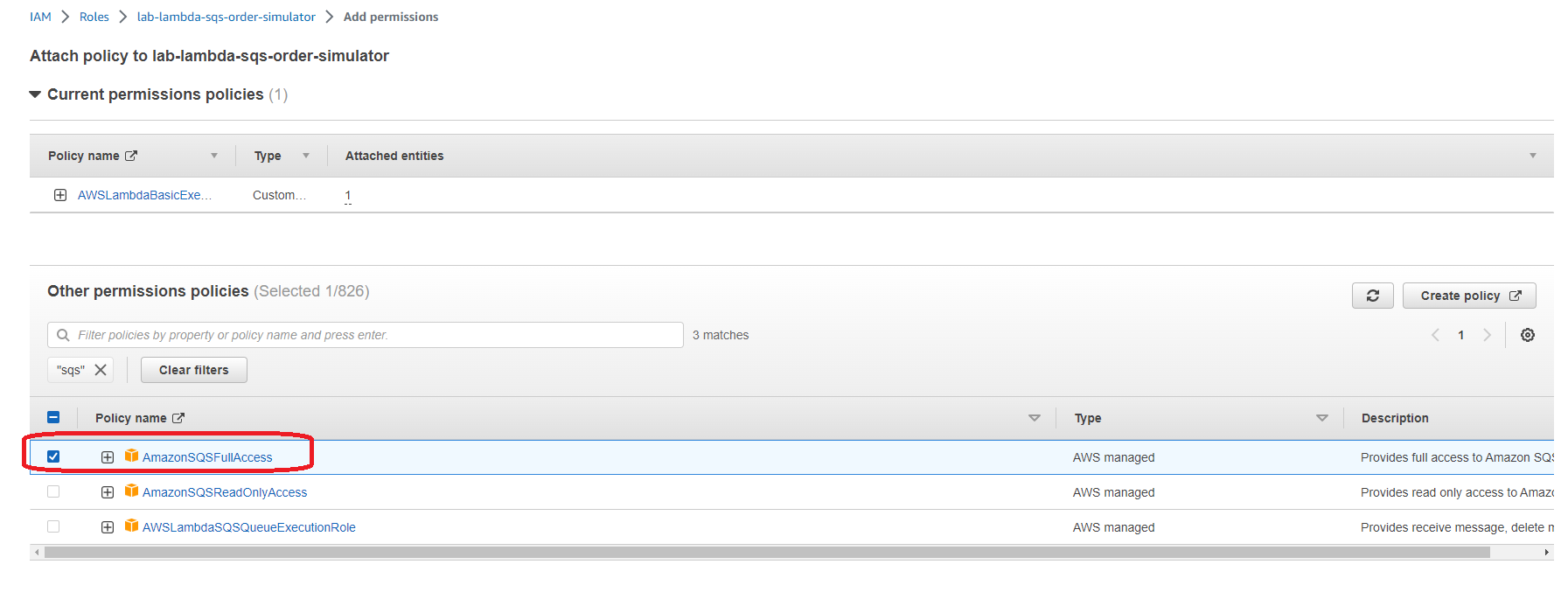

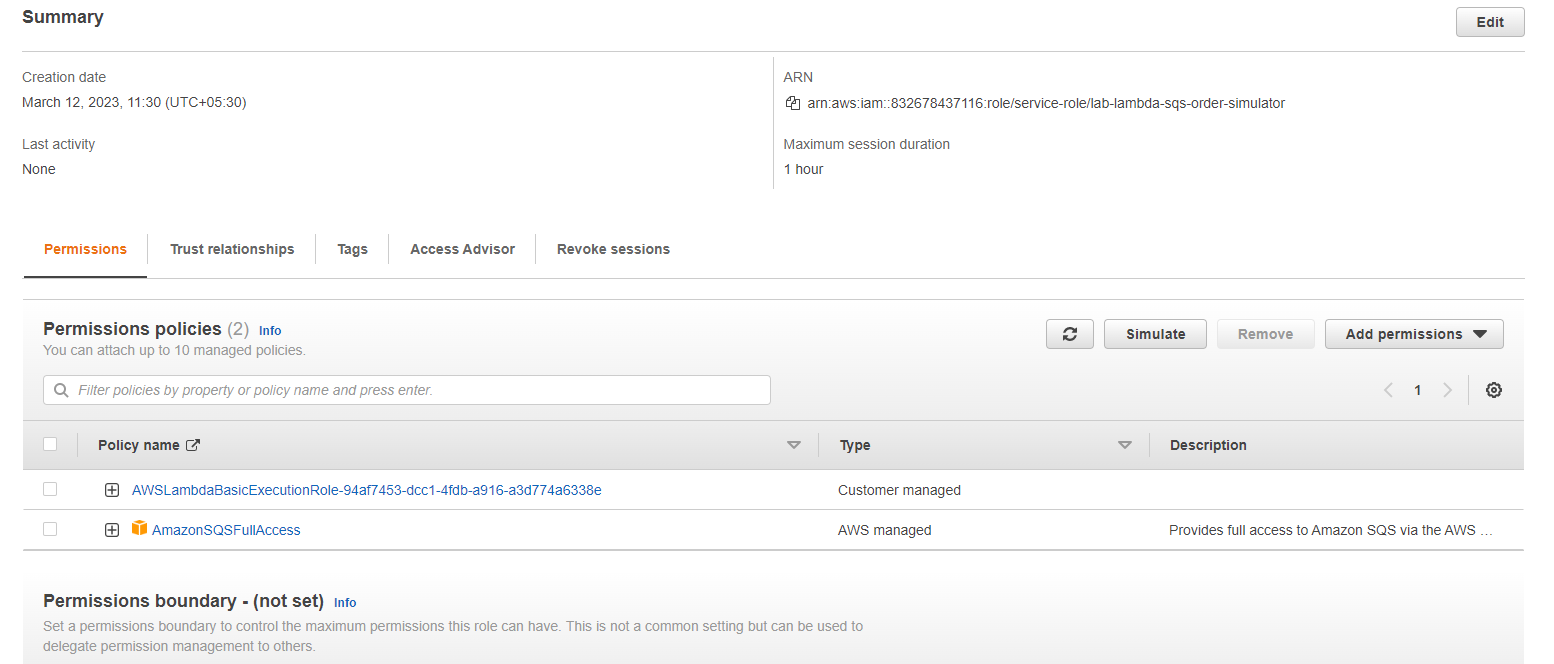

- Enter SQS in the search bar and select the policy AmazonSQSFullAccess for the Lambda so that it can write order messages into the SQS queue. Click on the Attach policy button to add this policy.

- You should see a confirmation message that says that the AmazonSQSFullAccess policy has been attached to the Lambda

4. Configure Lambda to set up the environment variable for the target SQS queue.

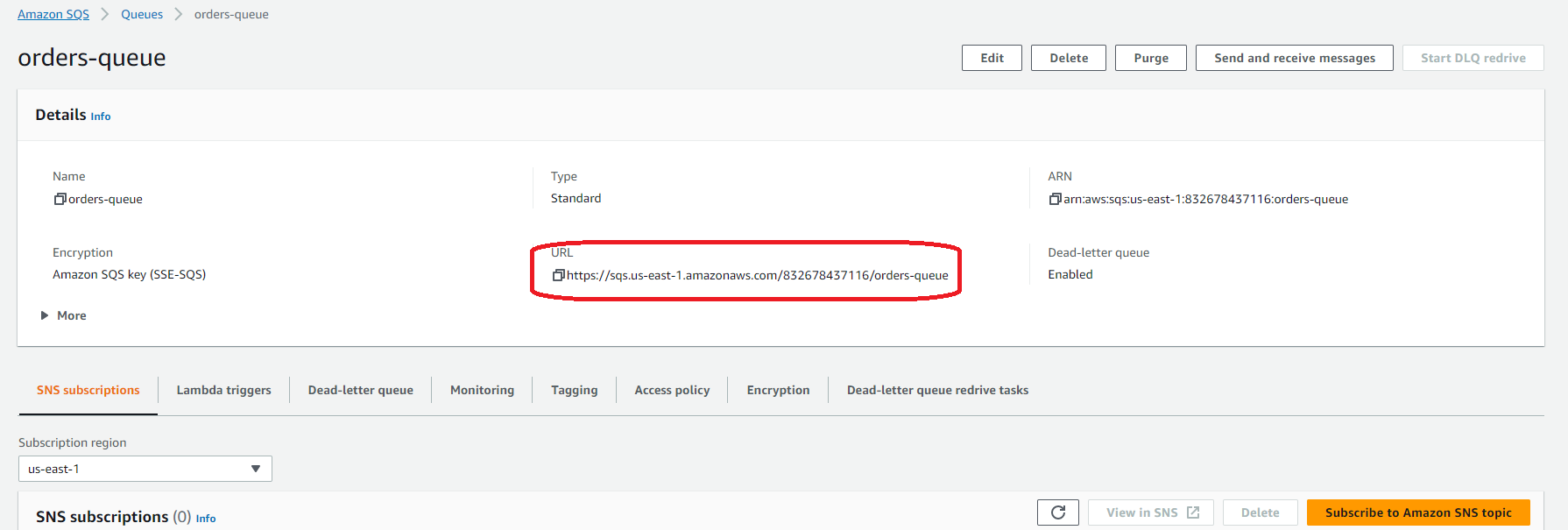

- Go to the SQS queue orders-queue created in Task 1 and copy the queue URL

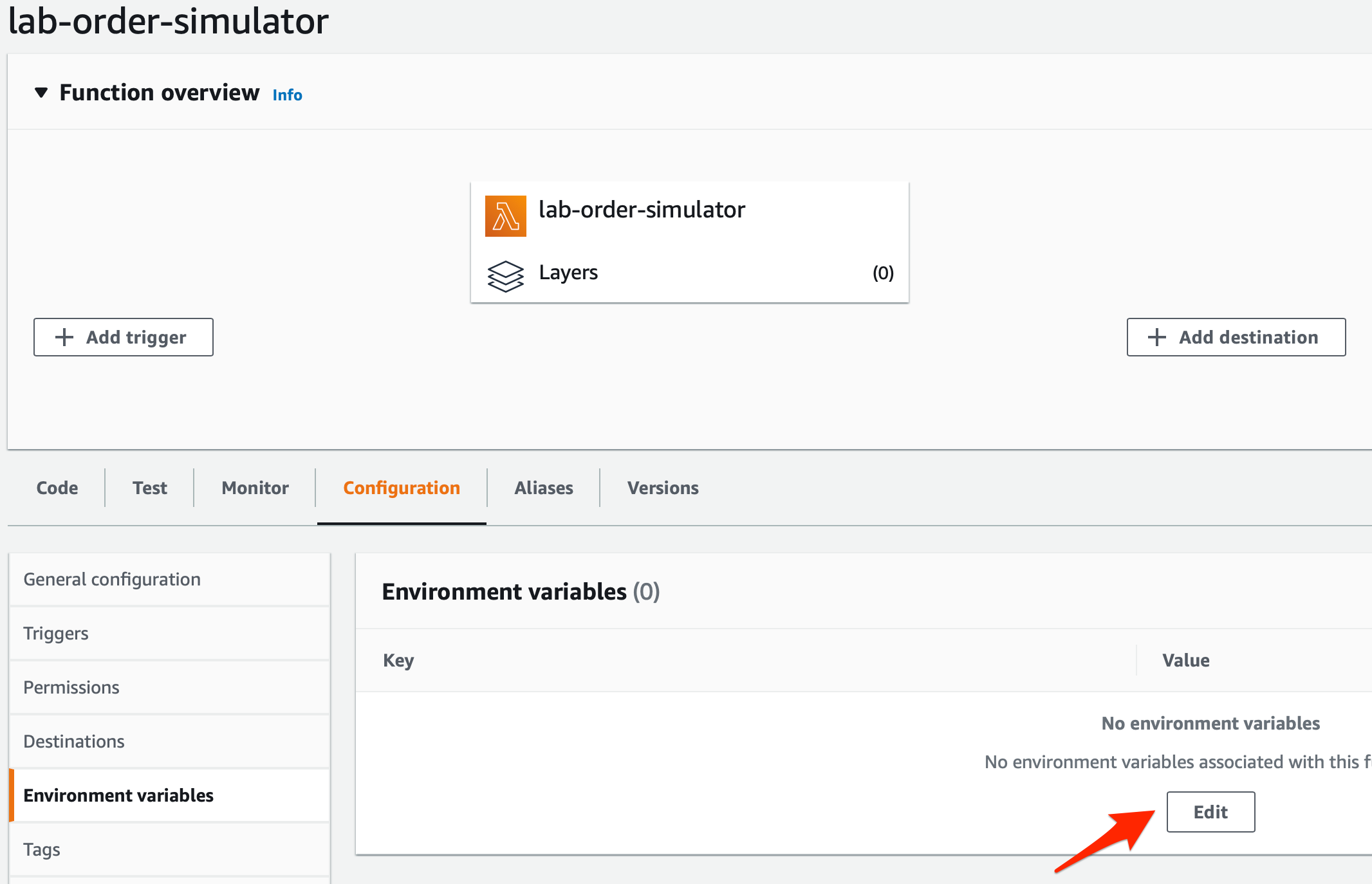

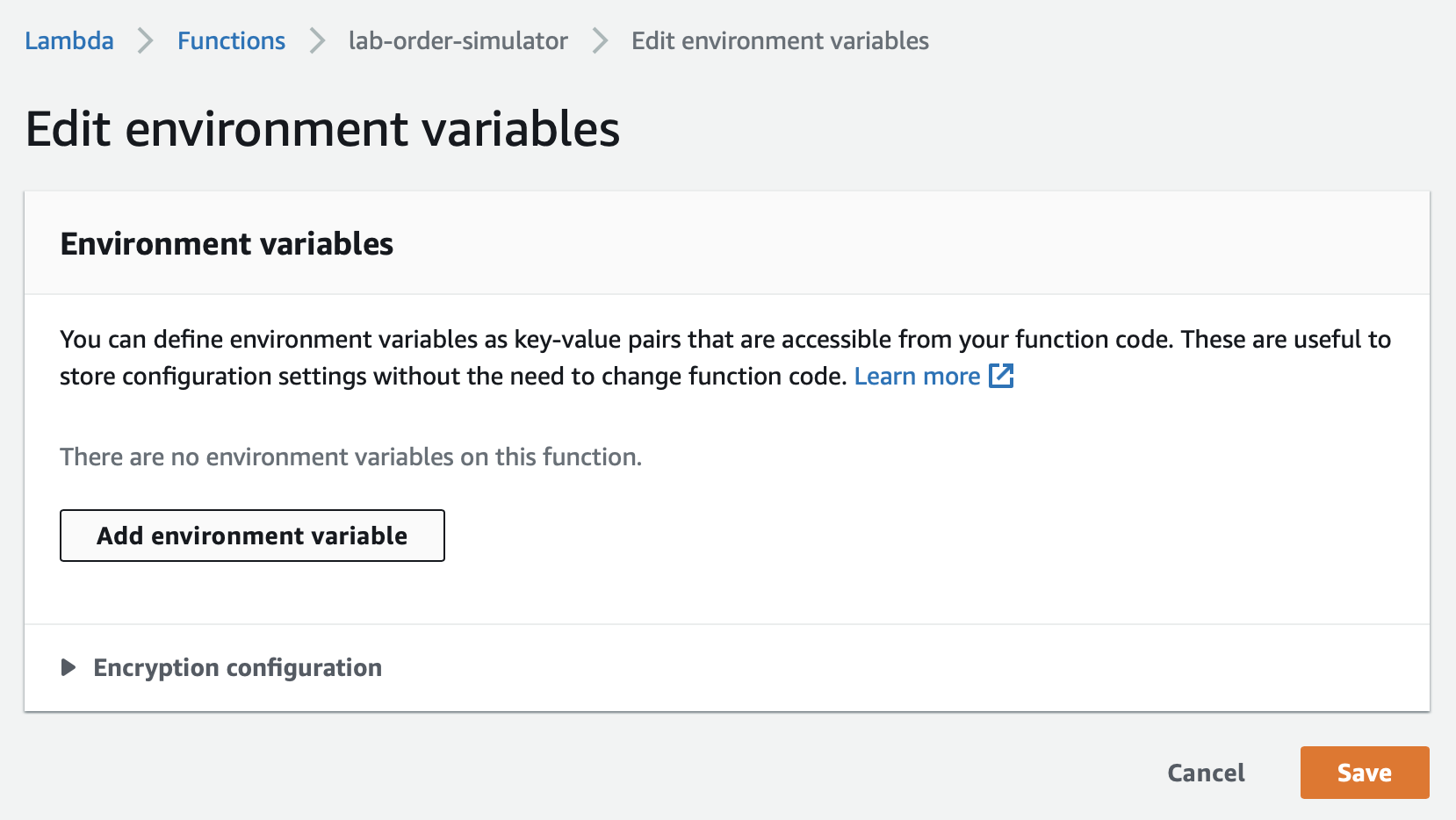

- Select the Configuration tab and click on the Environment variables link and then click on the Edit button.

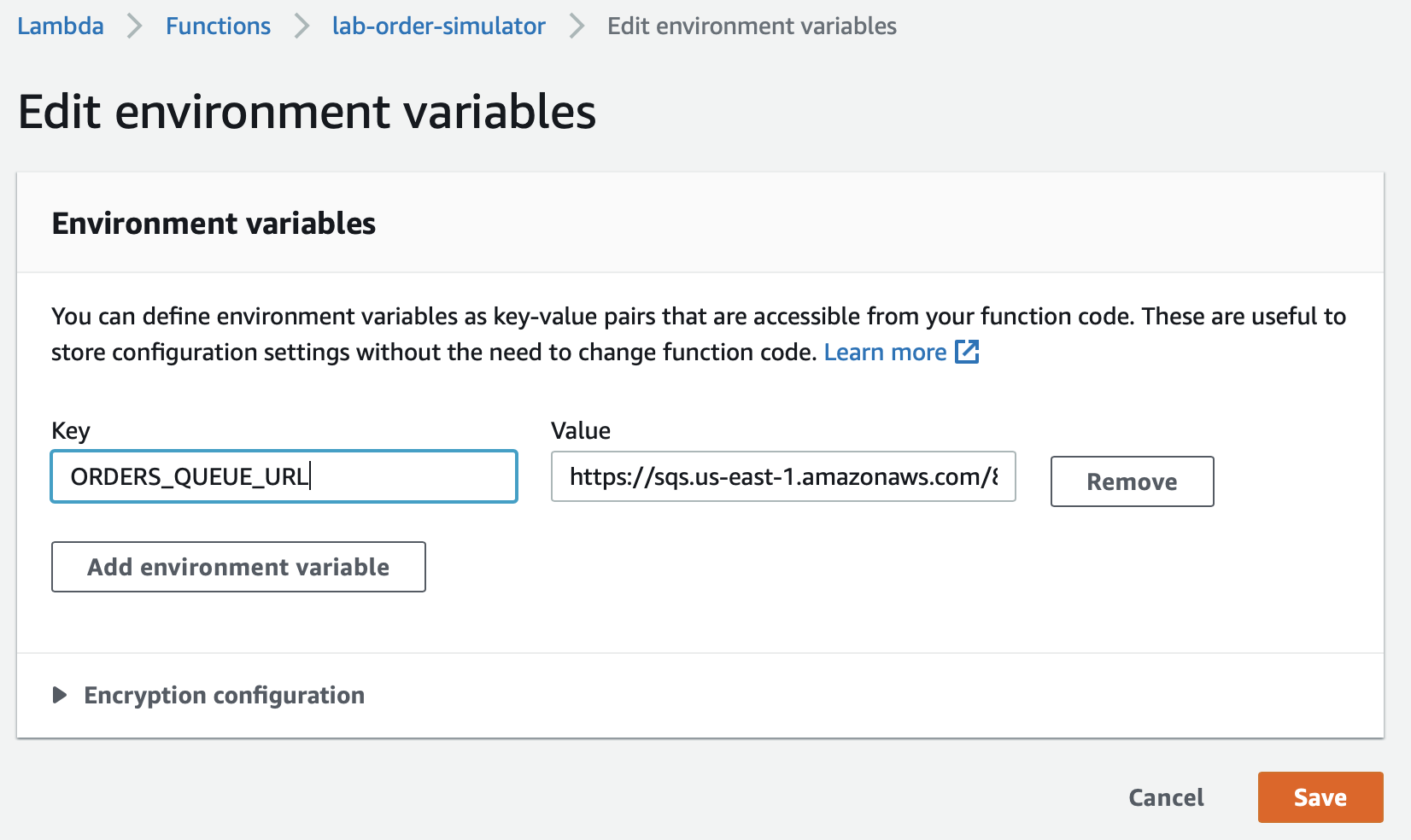

- Click Add environment variable

- Enter ORDERS_QUEUE_URL as the Key. Paste the orders-queue URL that you copied into the Value field. Click on Save.

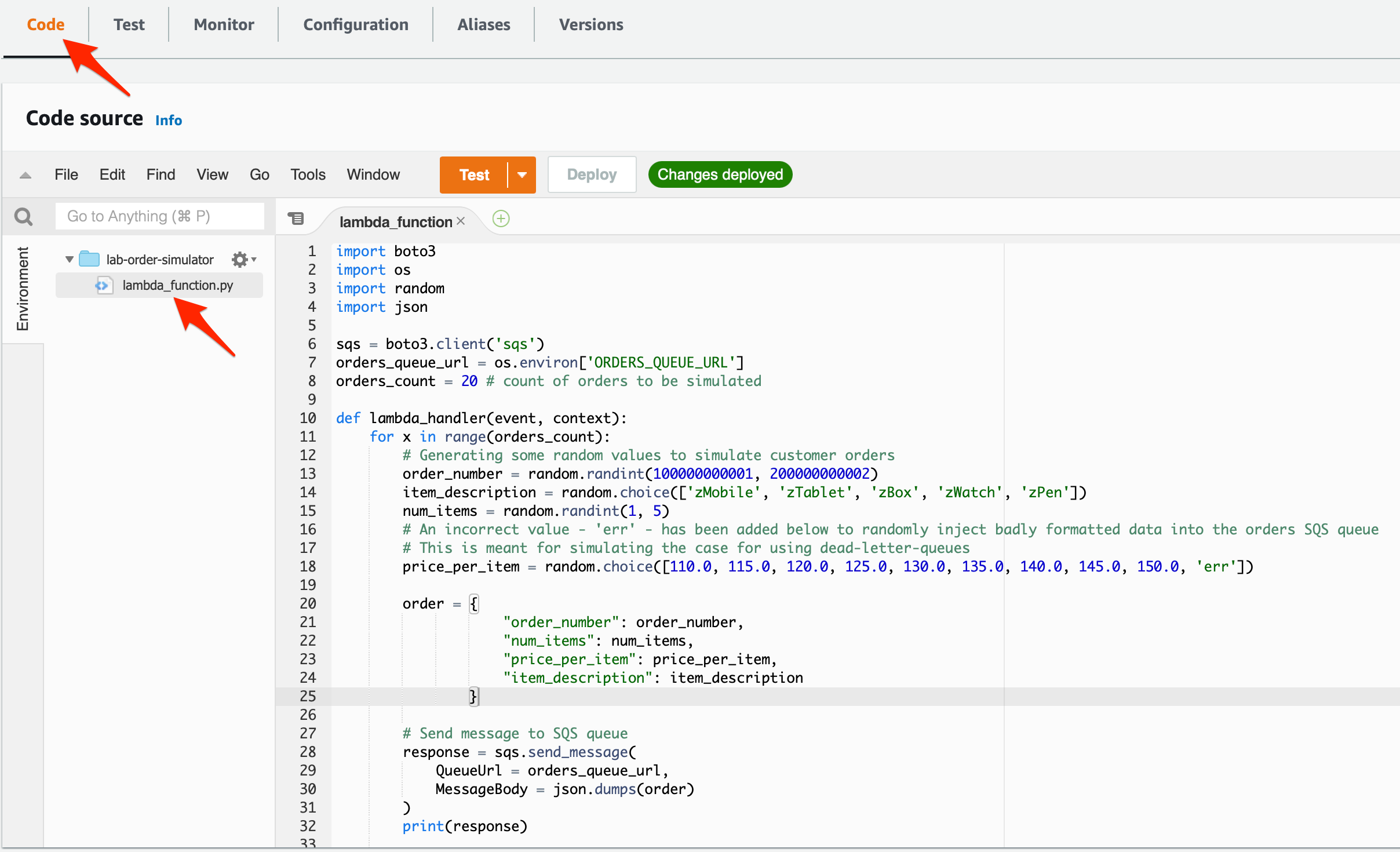

5. Click on the Code tab and then select the lambda_function.py file. Now clone the file lab-order-simulator.py from github:https://github.com/pporumbescu/Create-a-Decoupled-Backend-Architecture-Using-Lambda-SQS-and-DynamoDB.git and paste in the Lambda function code editor and click Deploy.

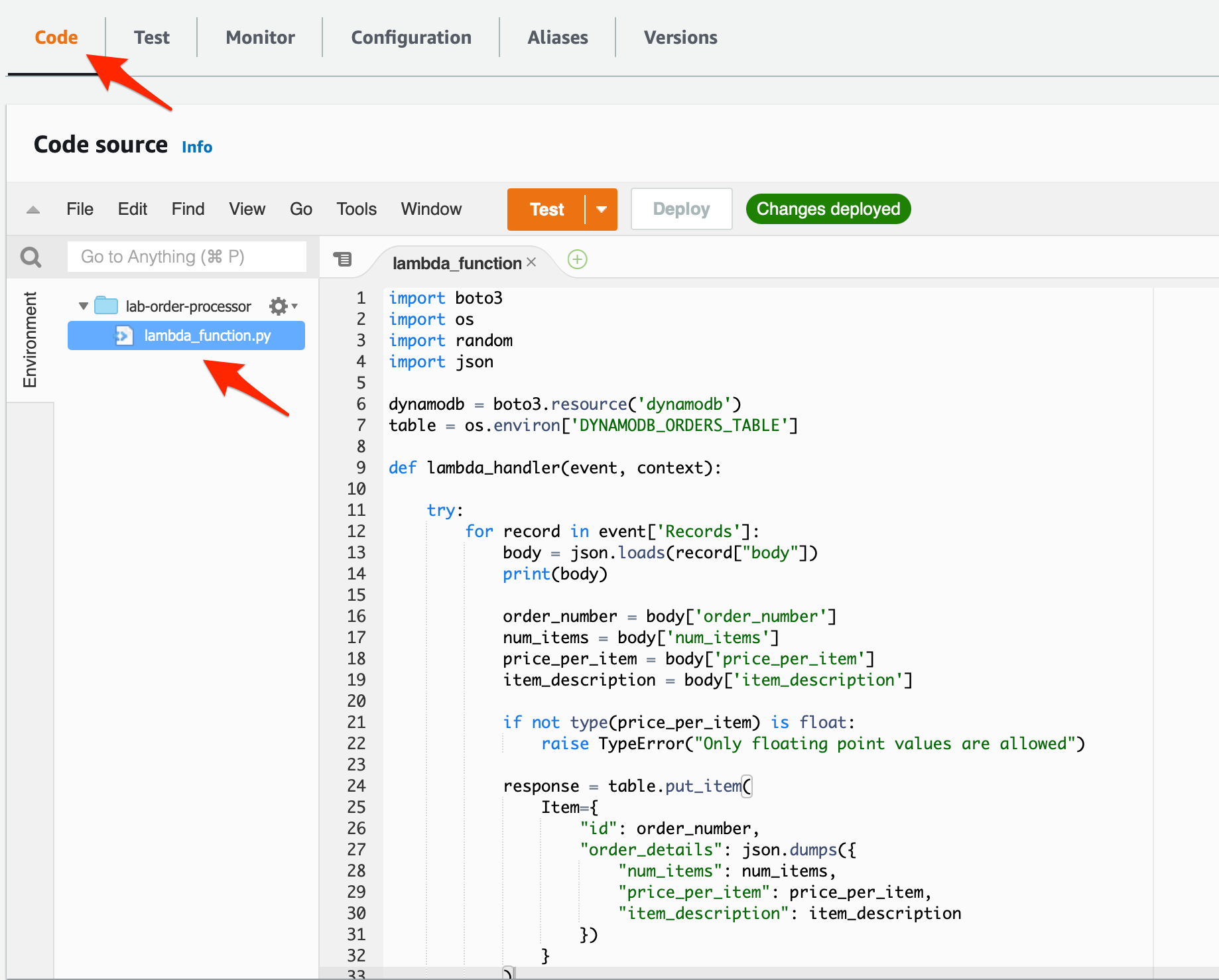

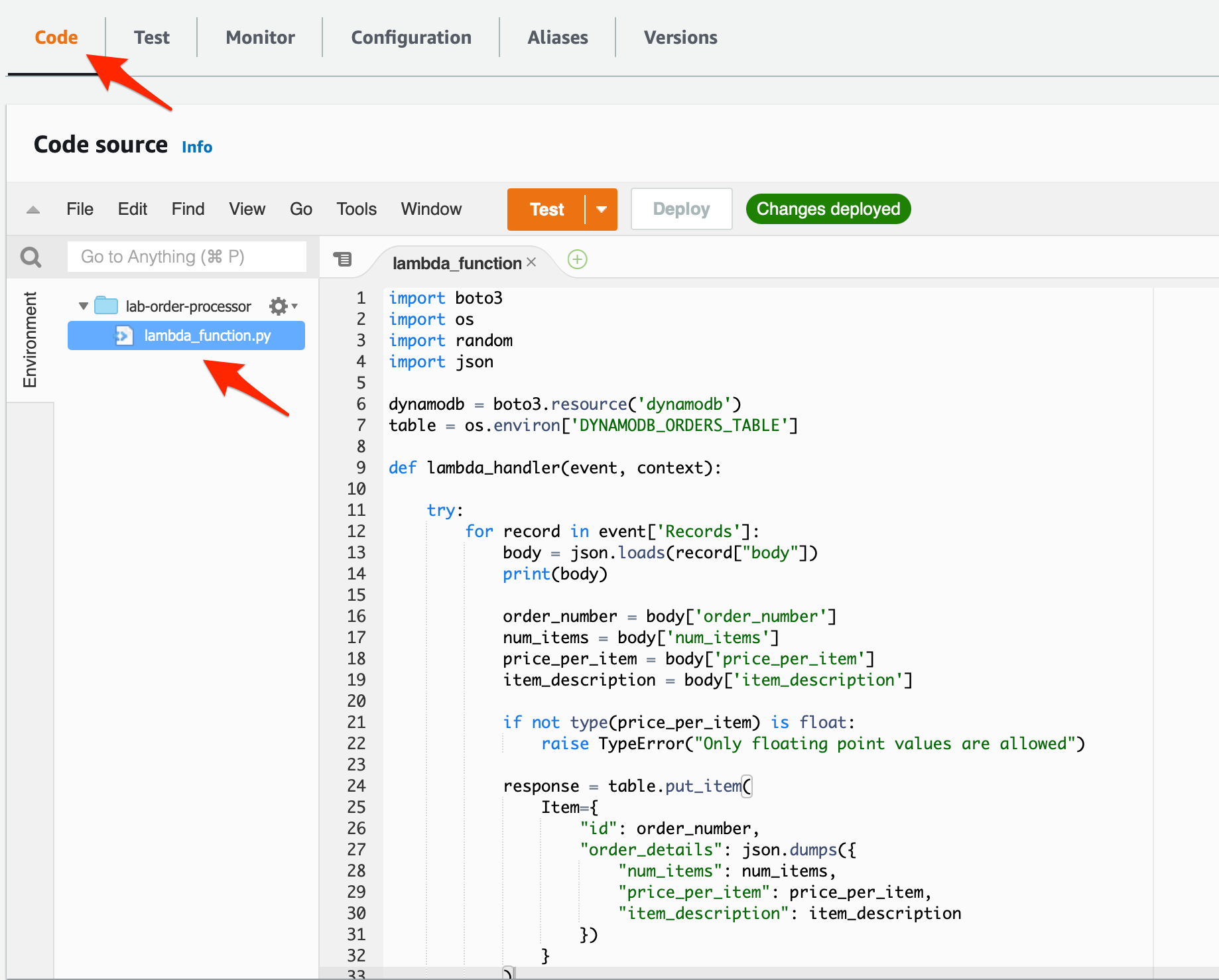

You need to create a Lambda function that processes each SQS message containing the order data. You also need to configure the Lambda function to use the customer orders queue as the event source. The Lambda function will complete the order processing by writing the order data into a DynamoDB table.

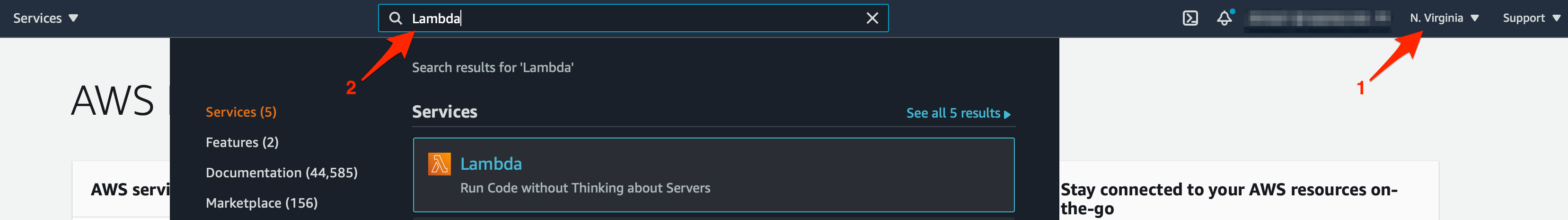

- Make sure that you are in the N.Virginia AWS Region on the AWS Management Console. Enter Lambda in the search bar and select Lambda service.

Click on Create function button

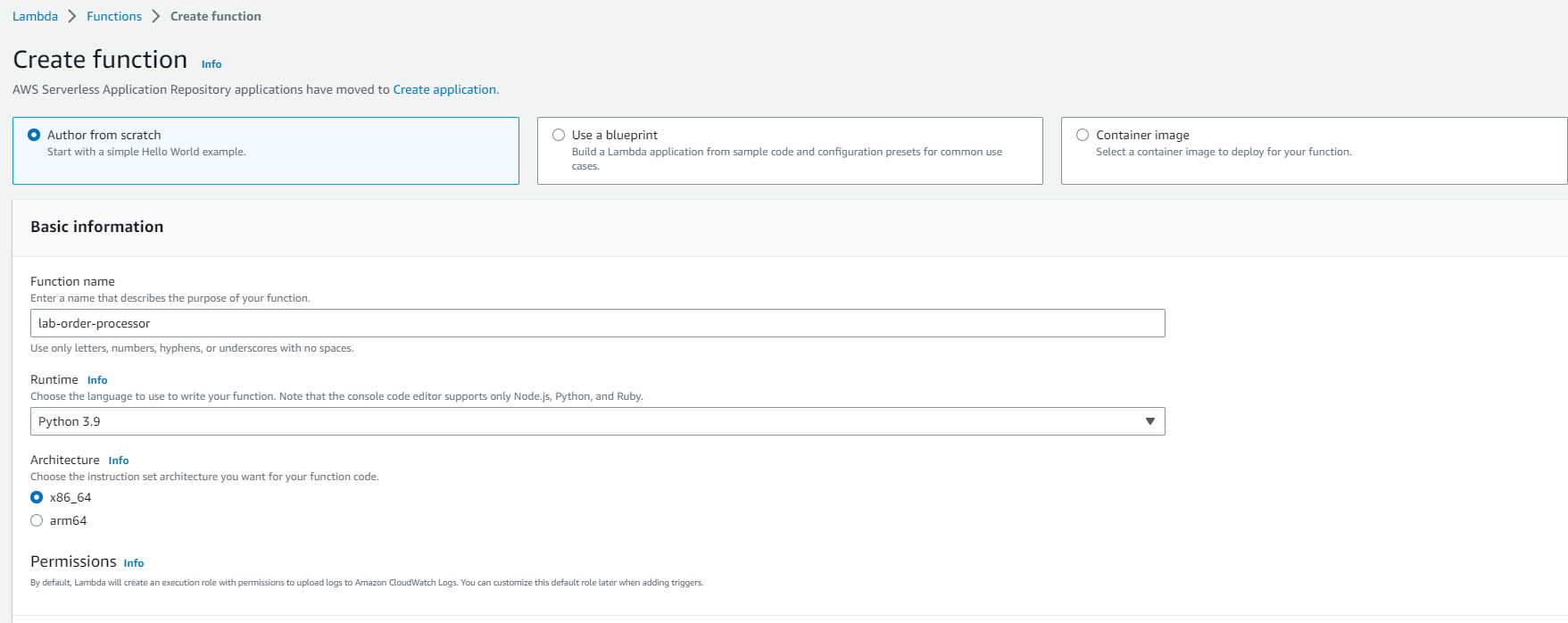

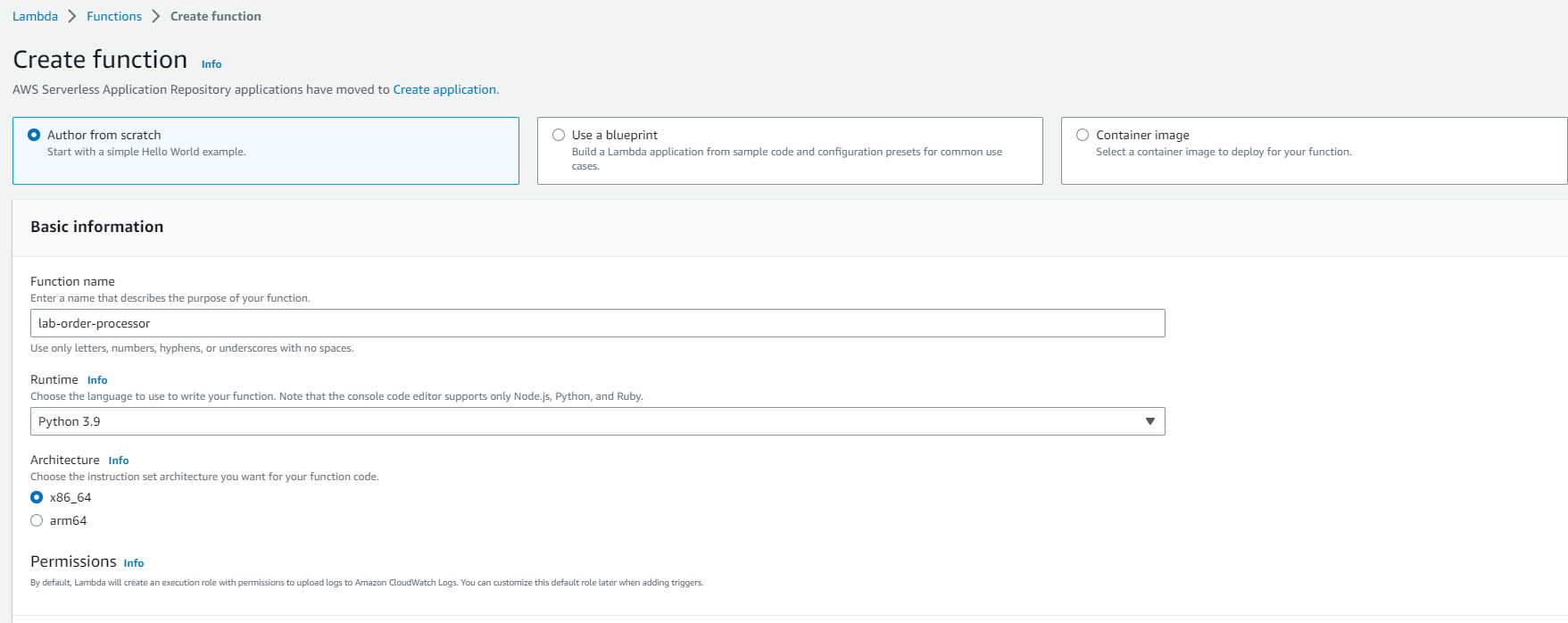

2. Let’s create the Lambda function by filling in the following values:

Select the Author from scratch template

Function name: lab-order-processor

Runtime: Python 3.9

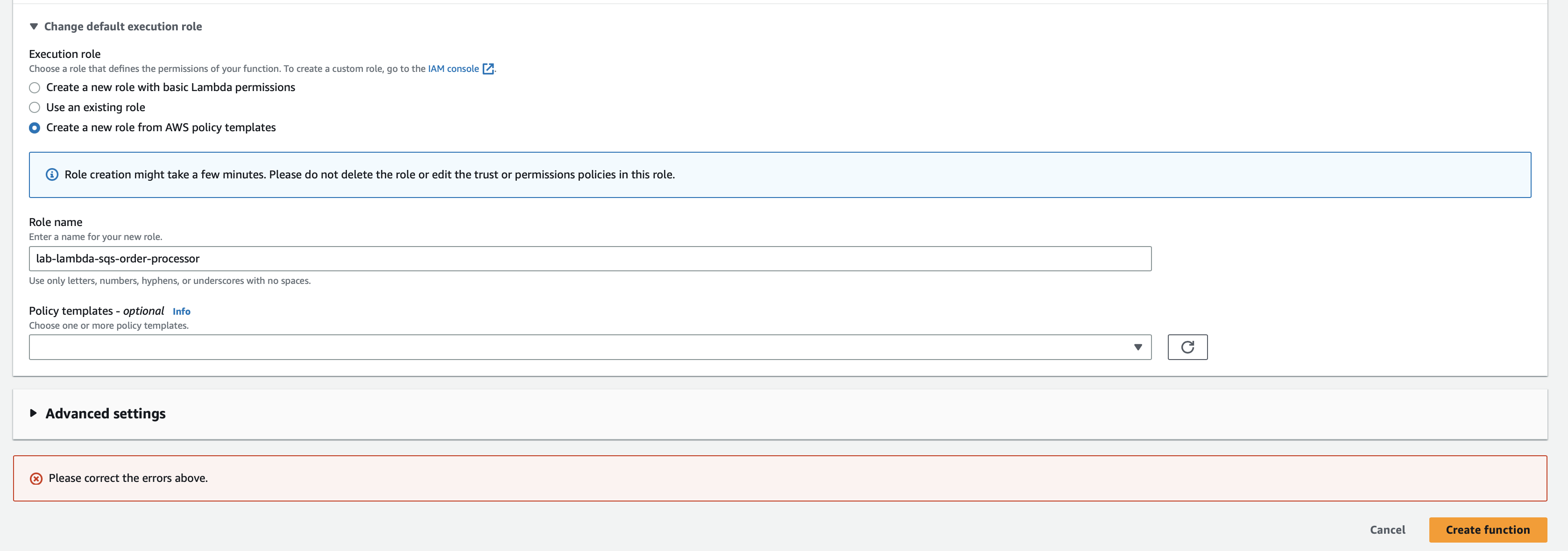

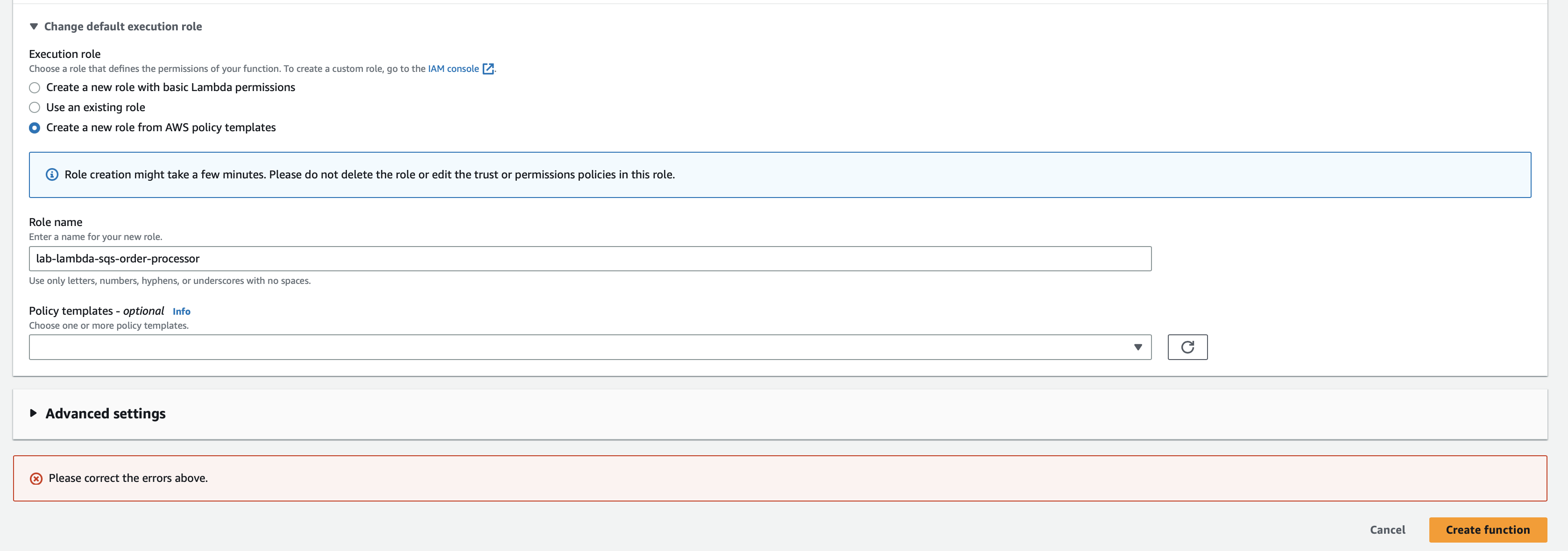

For execution role, select the option that says Create a new role from AWS policy templates

Role name: lab-lambda-sqs-order-processor

Click Create function to complete this step

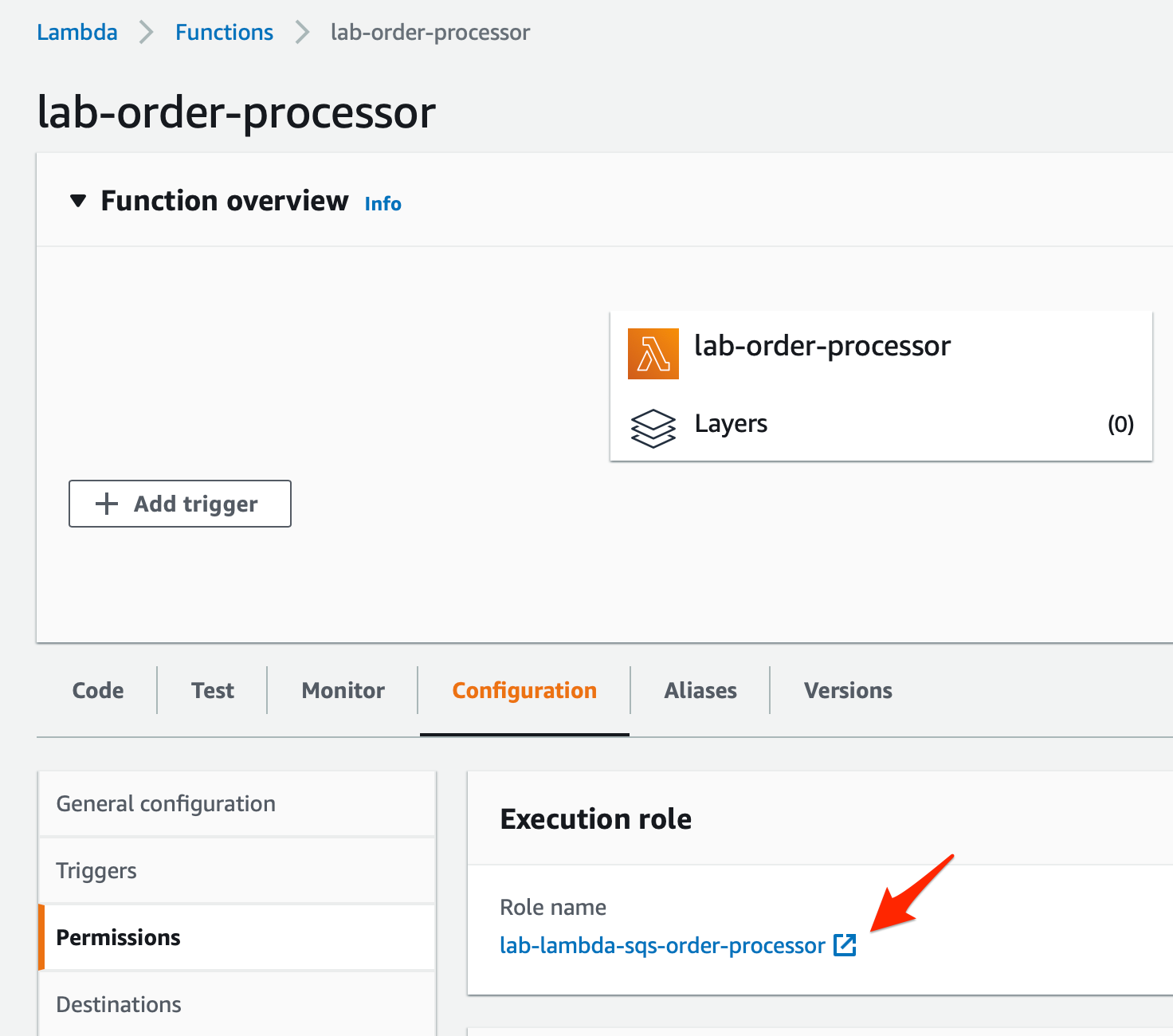

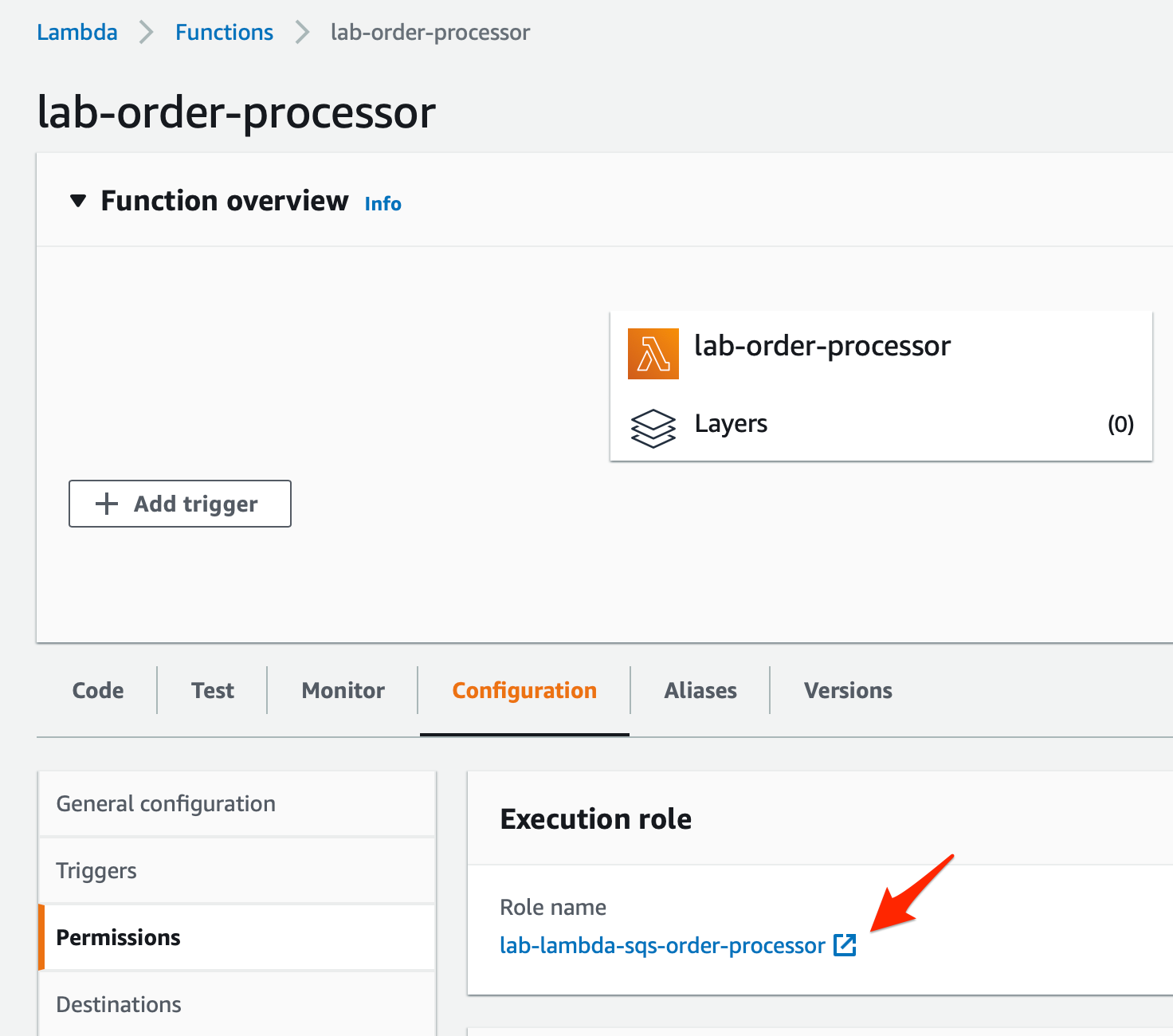

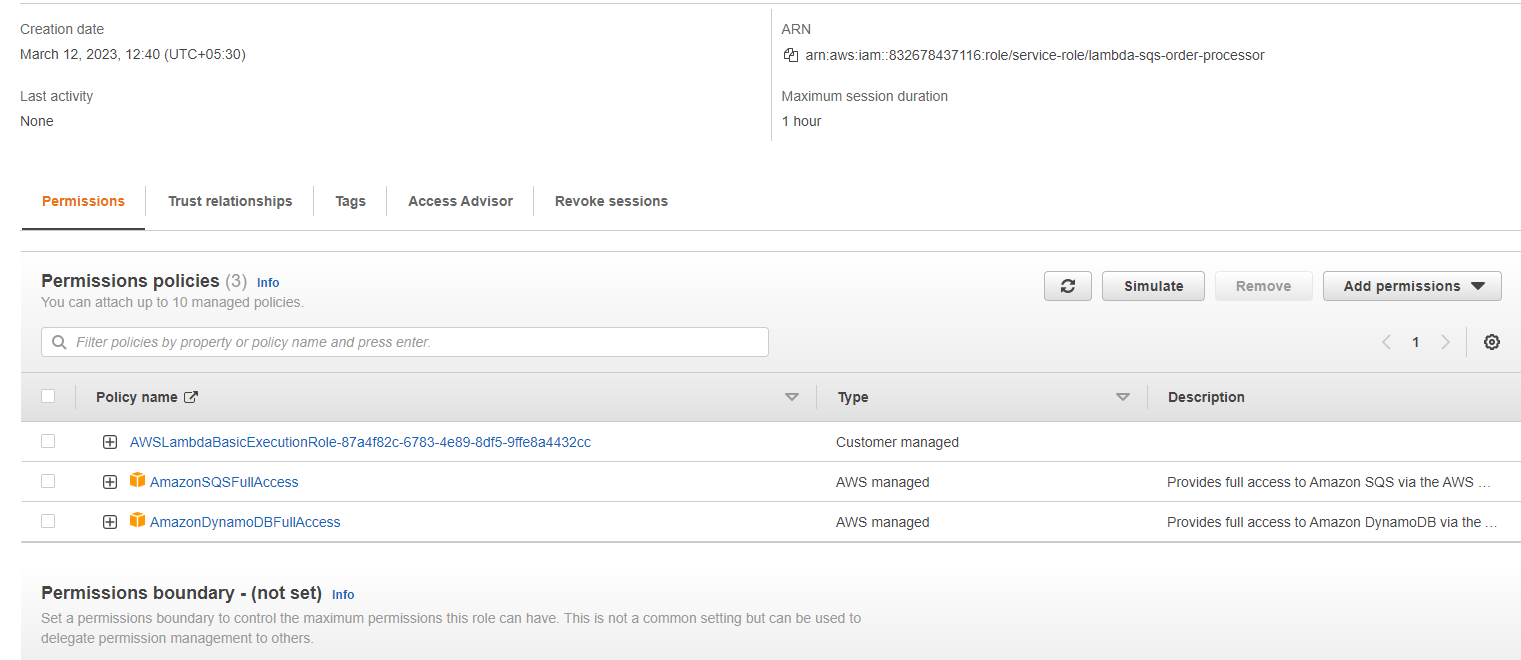

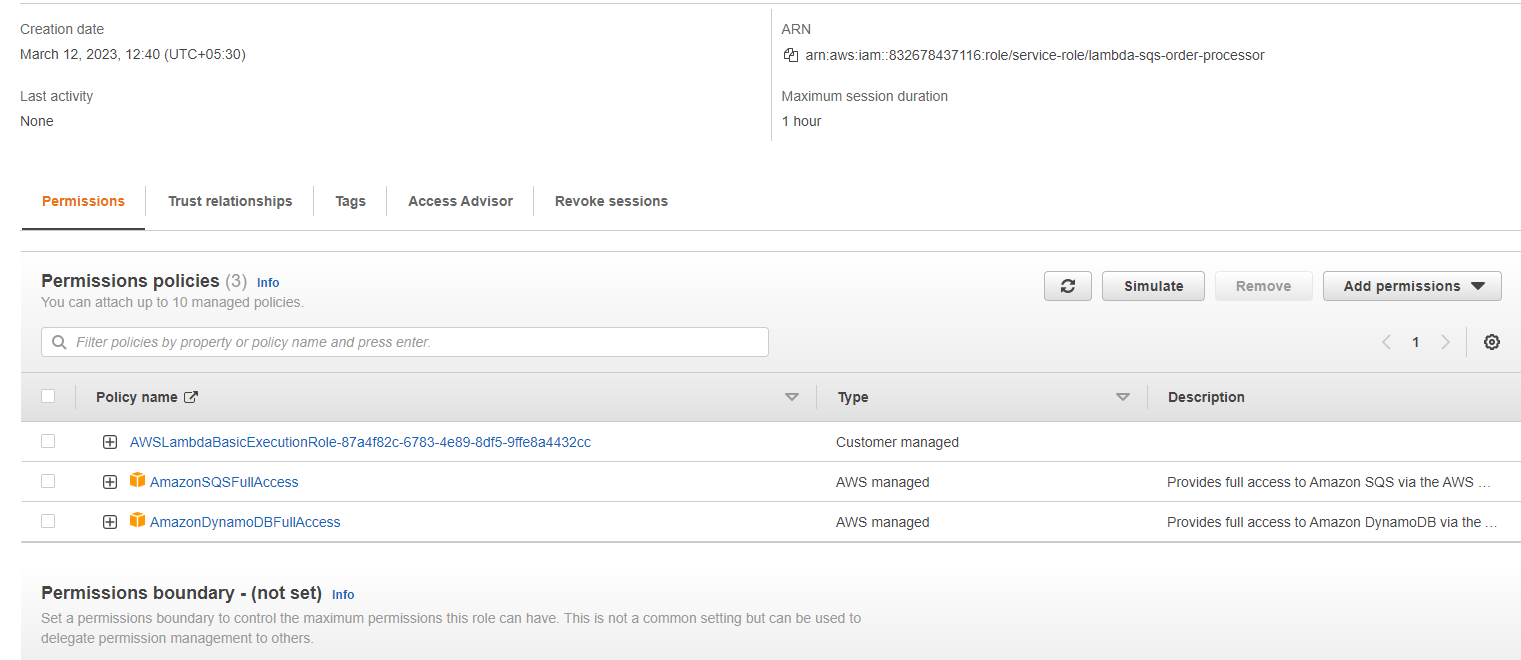

3. Configure the Lambda to modify the permissions for its execution role.

Select the Configuration tab and click on the Permissions link on the left sidebar. Then, click on the hyperlink for the execution role.

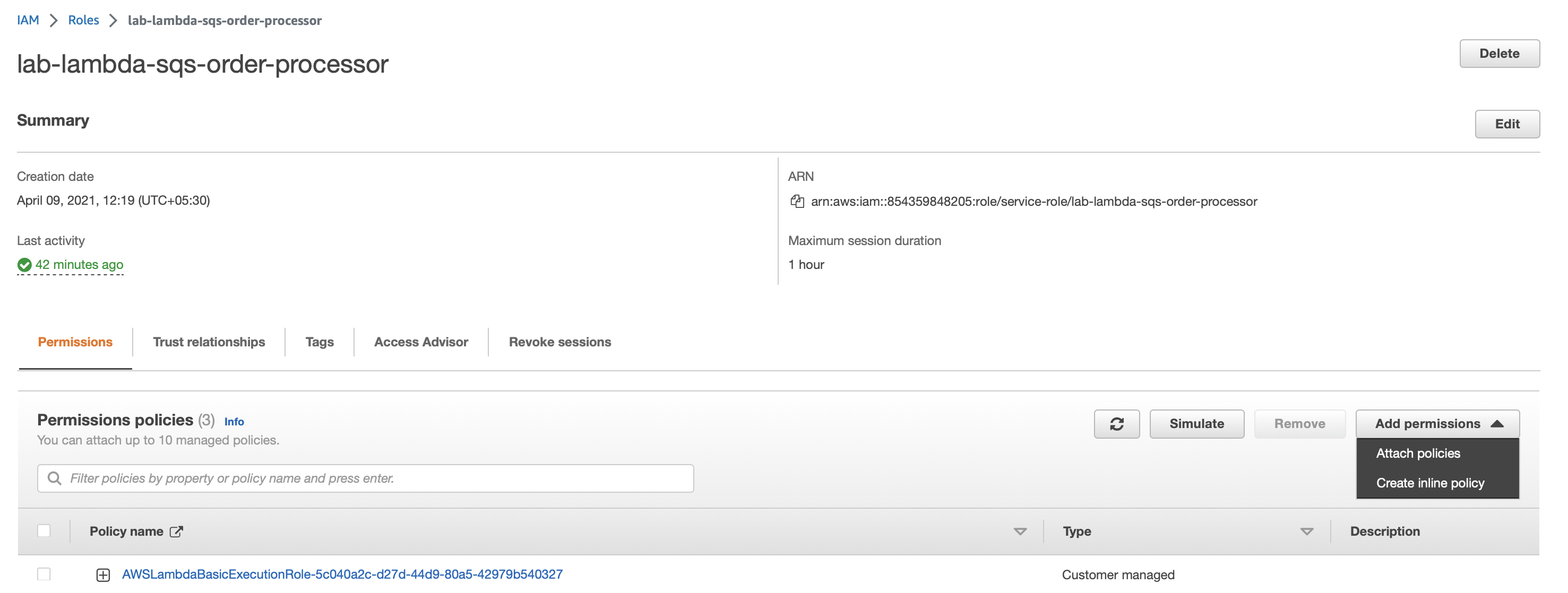

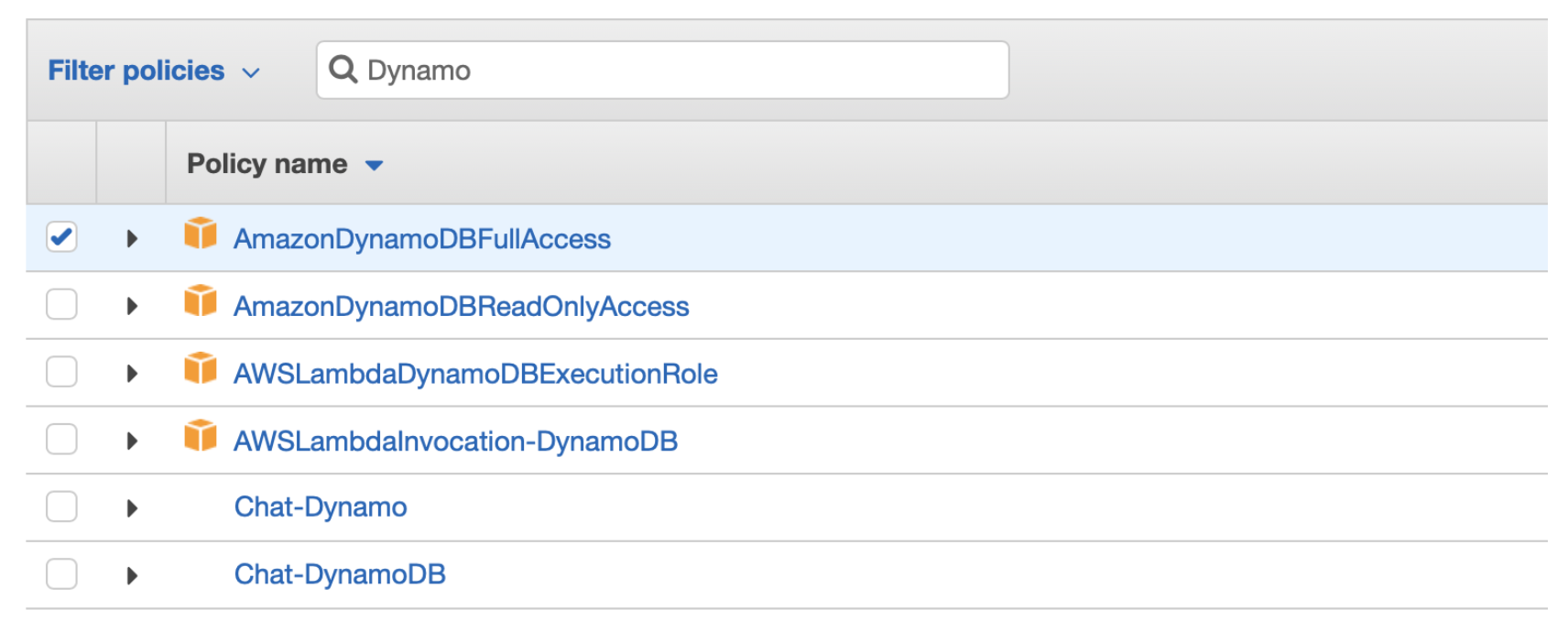

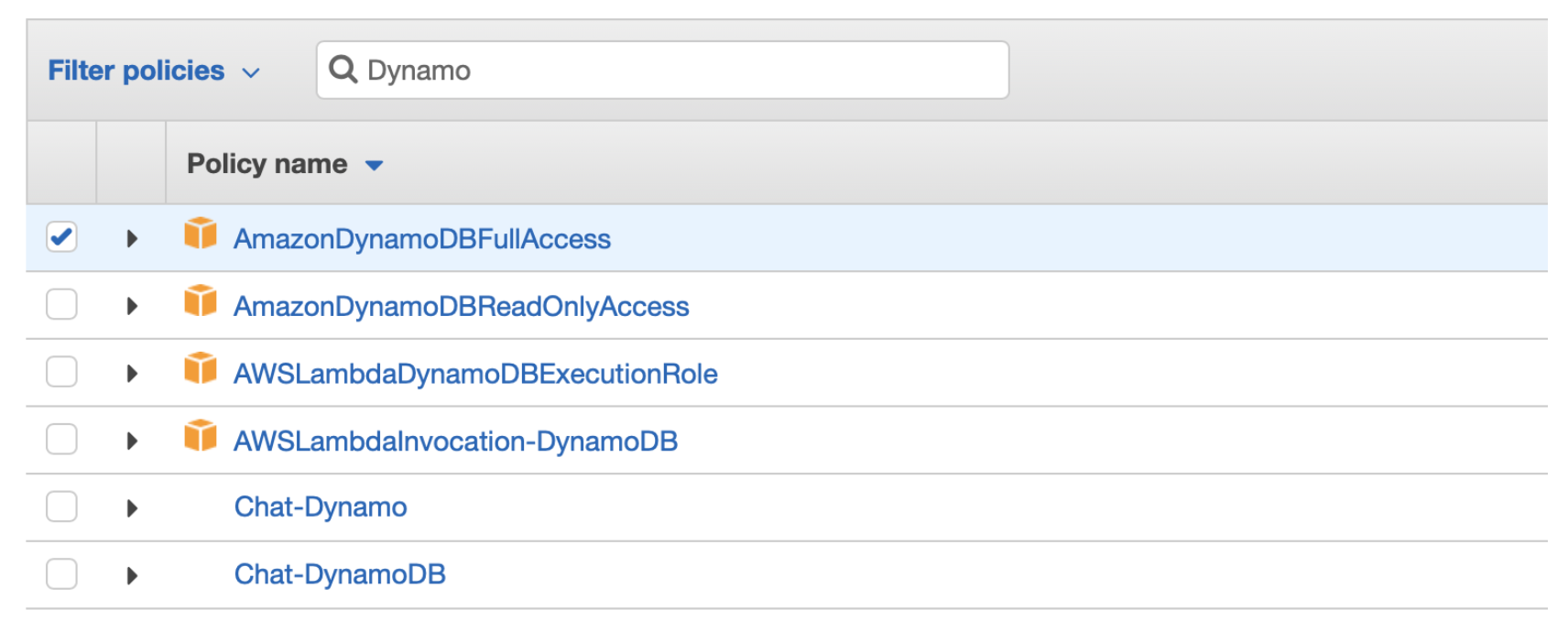

- You will notice that only the AWSLambdaBasicExecutionRole policy is attached to the Lambda in the Permissions tab. Click on the Add Permissions button and then select the Attach policies option.

- Enter SQS in the search bar and select the policy AmazonSQSFullAccess so that the queue can trigger the AWS Lambda function. Click on the Attach policy button to add this policy.

- On the Permissions tab, click again on the Add Permissions button and then select the Attach policies option. Enter DynamoDB in the search bar and select the policy AmazonDynamoDBFullAccess for the Lambda so that it can write the order data into the DynamoDB table. Click on the Attach policy button to add this policy.

- You should be able to see that all policies are attached to the Lambda function.

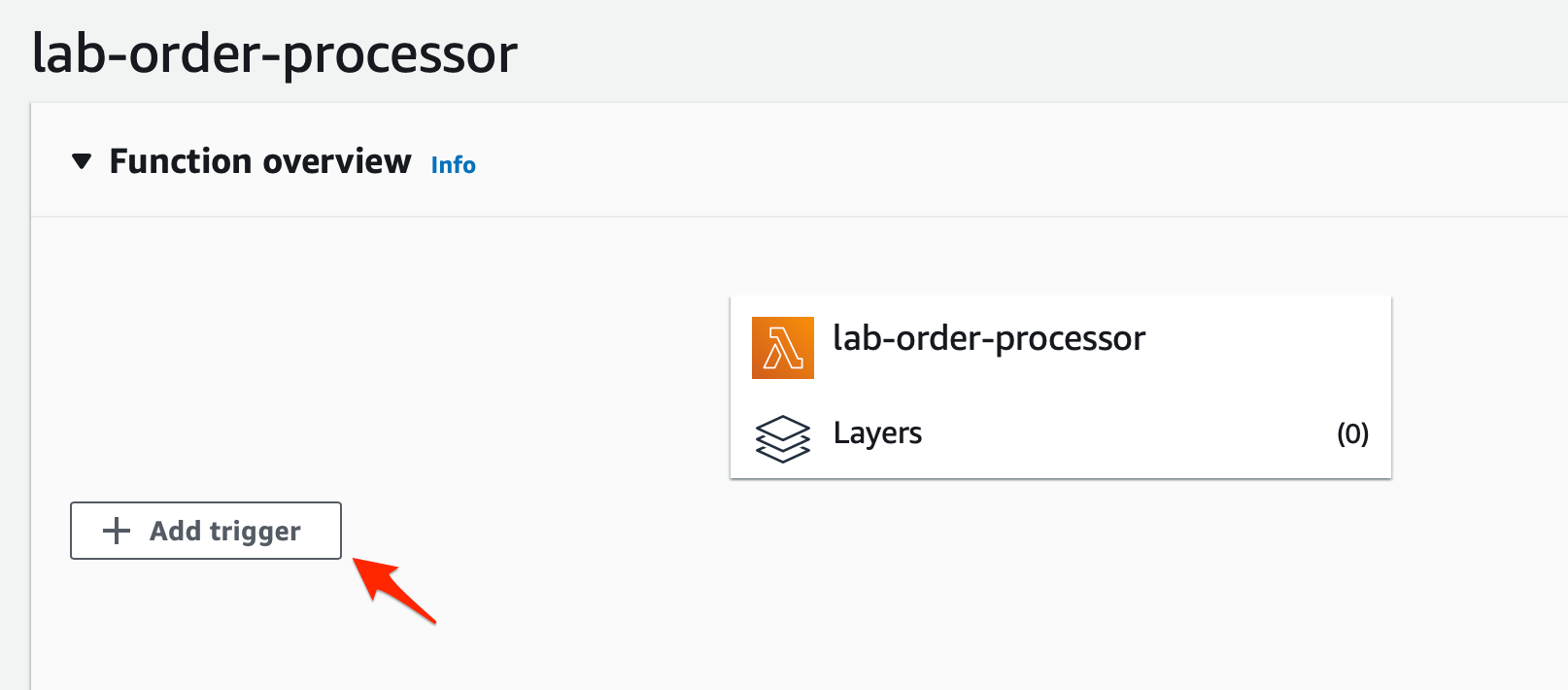

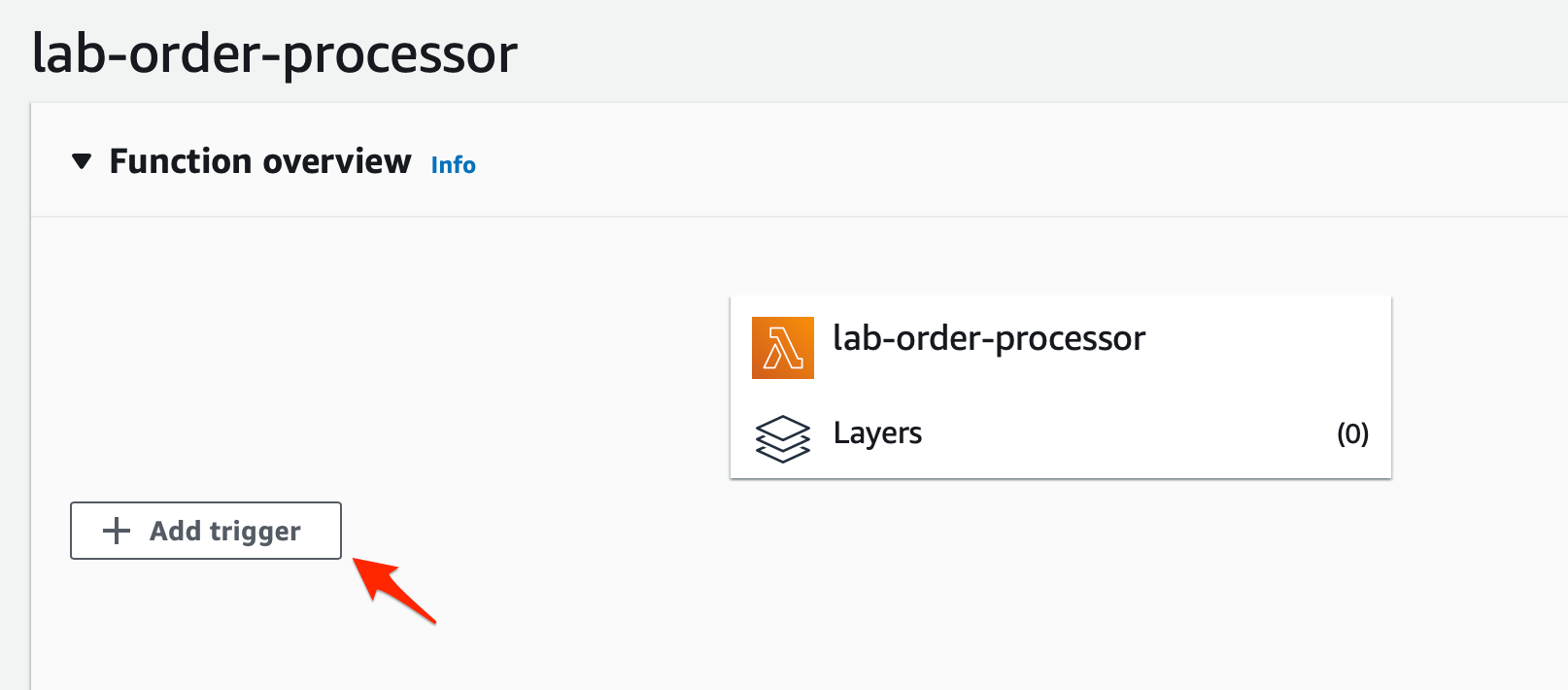

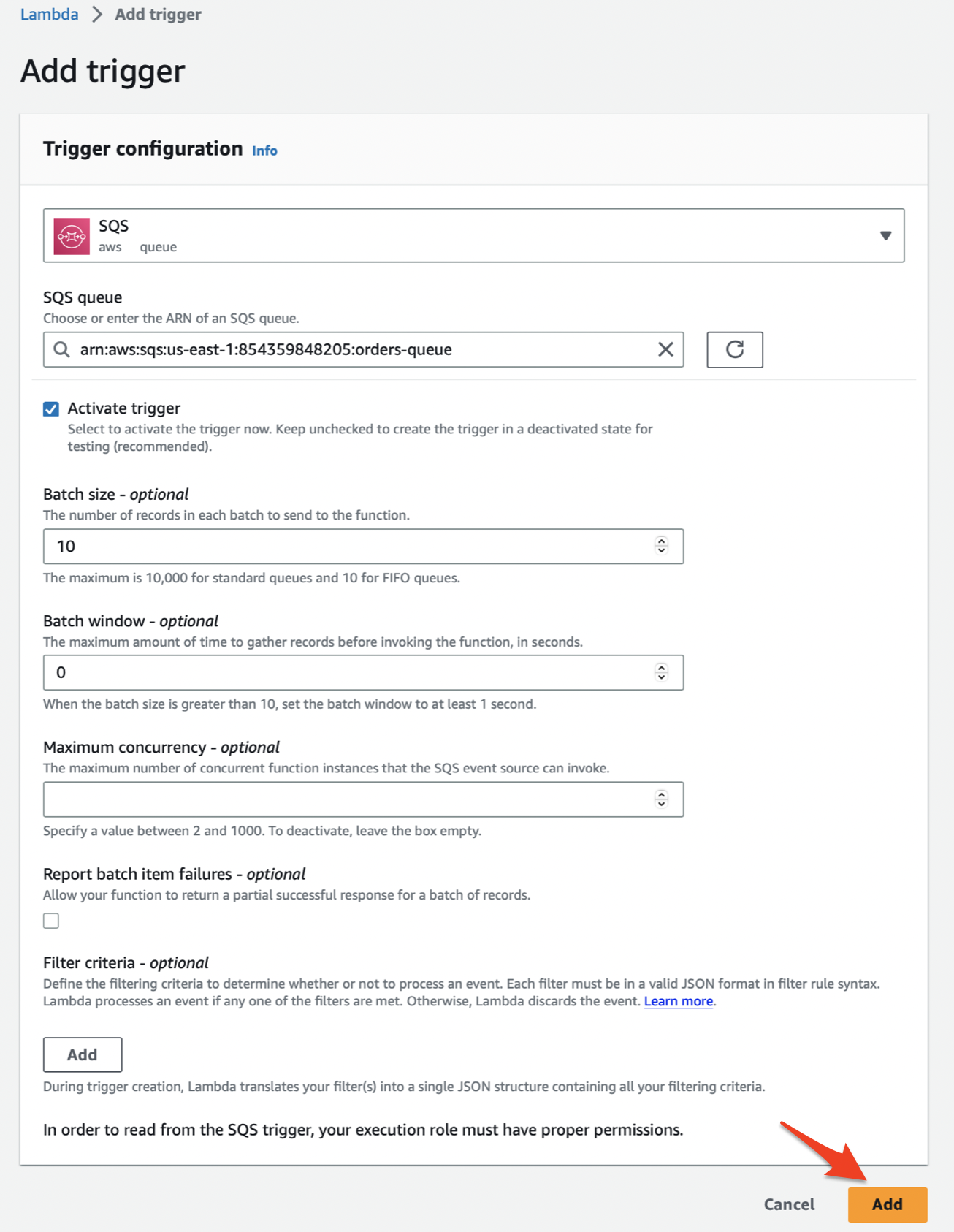

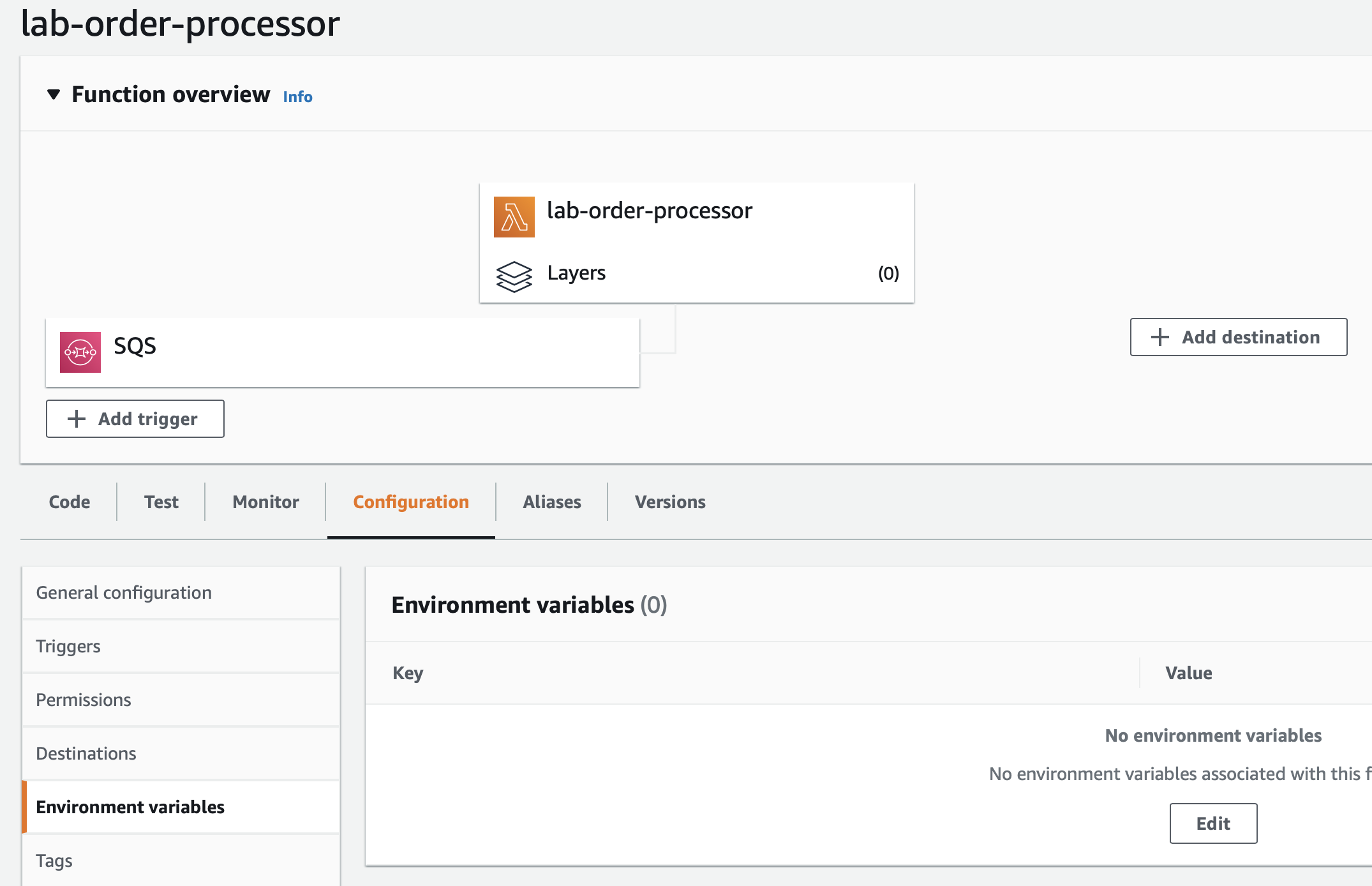

4. Configure the Lambda to set up a trigger from SQS

Go to the Function overview section of the Lambda and click on Add trigger

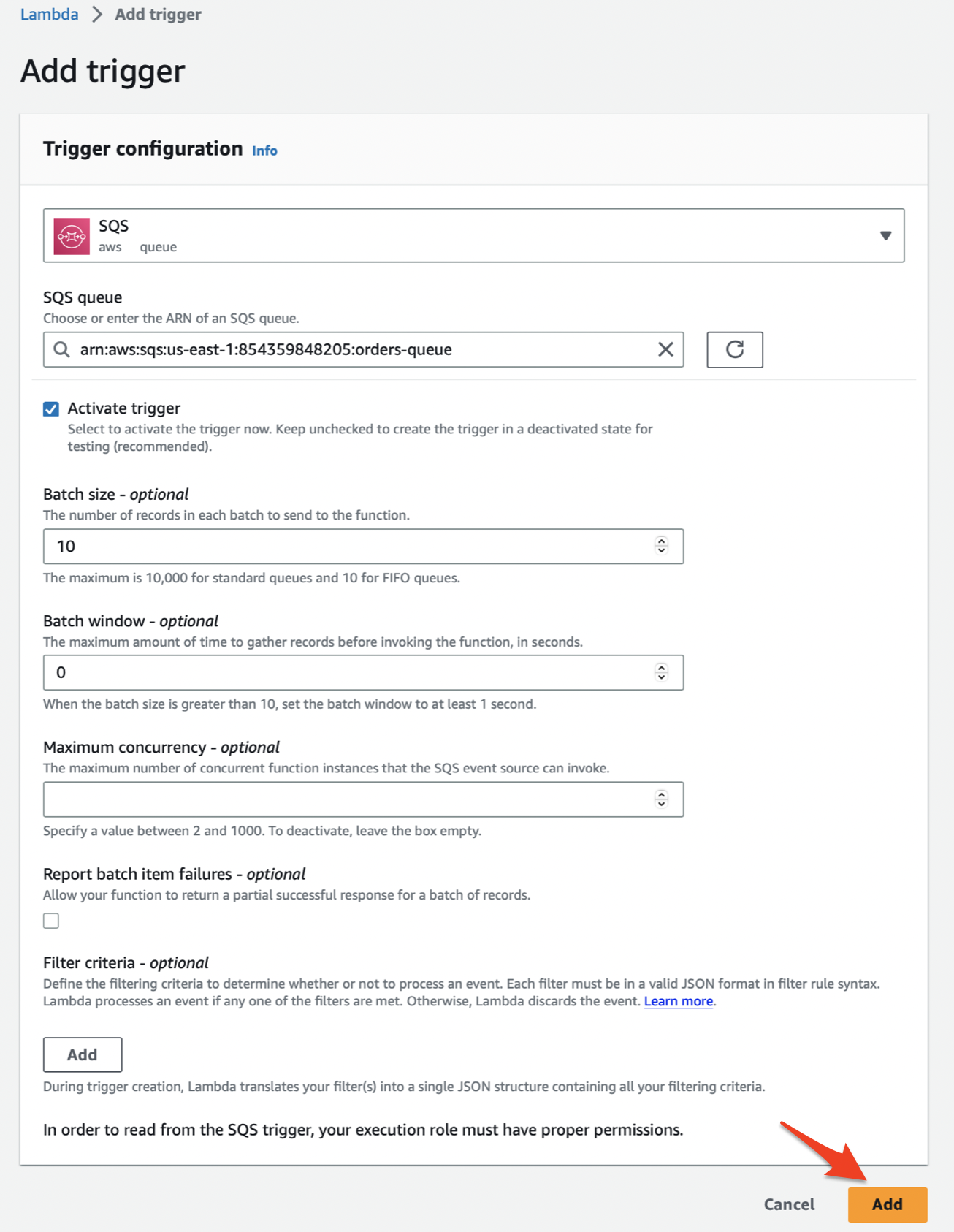

- Set the values for the Trigger configuration as follows:

- Trigger source: SQS

- SQS queue: select the orders-queue as the source queue

- Select the Activate trigger checkbox

- Batch size: 10

- Batch window: 0

- Click on the Add button to complete this step

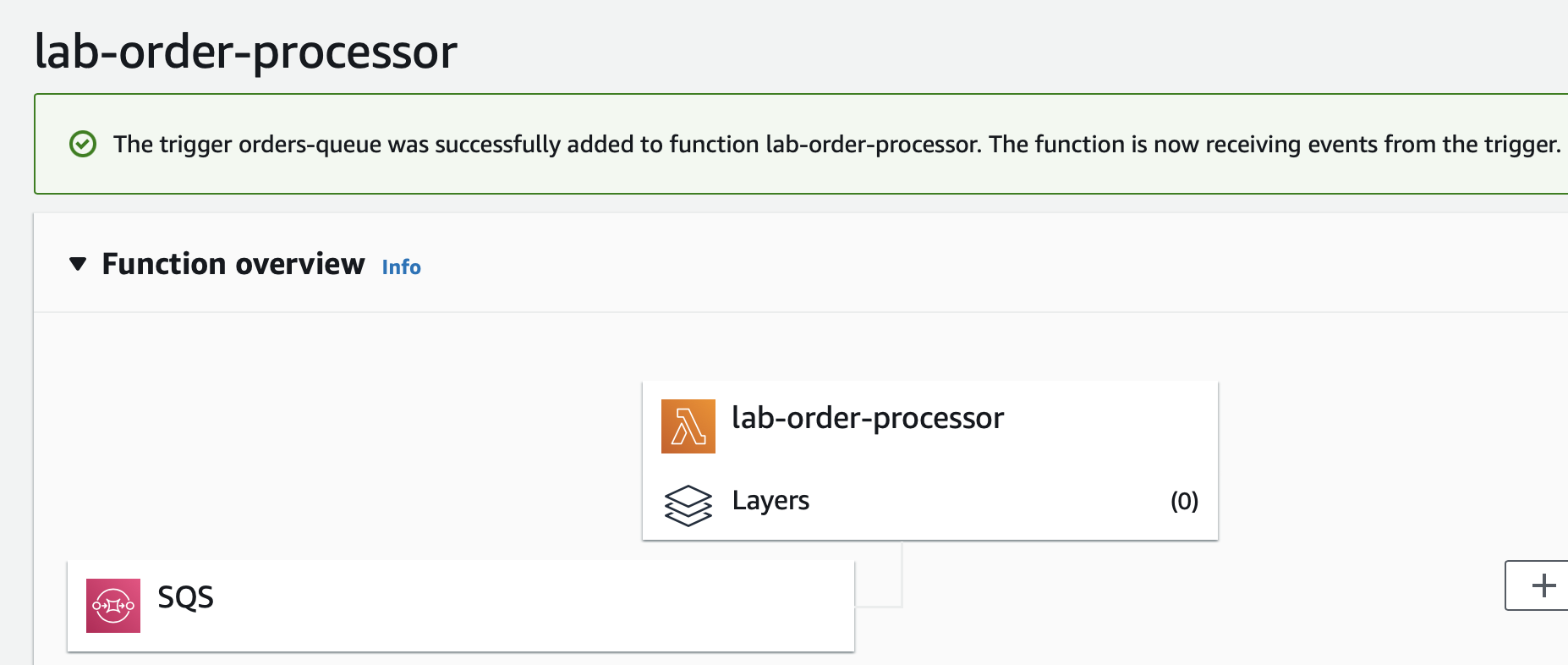

- You should be able to see that trigger has been set up successfully

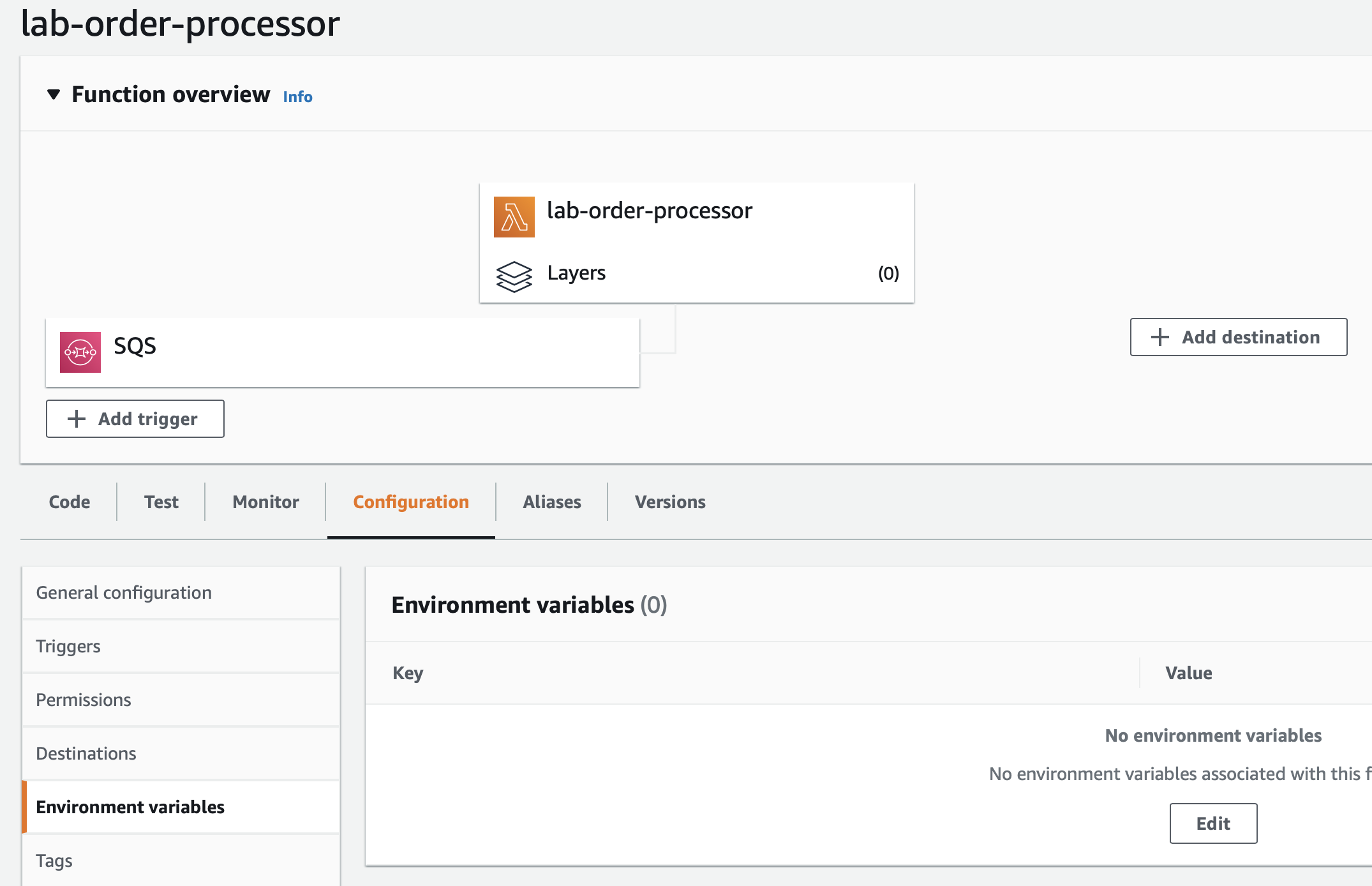

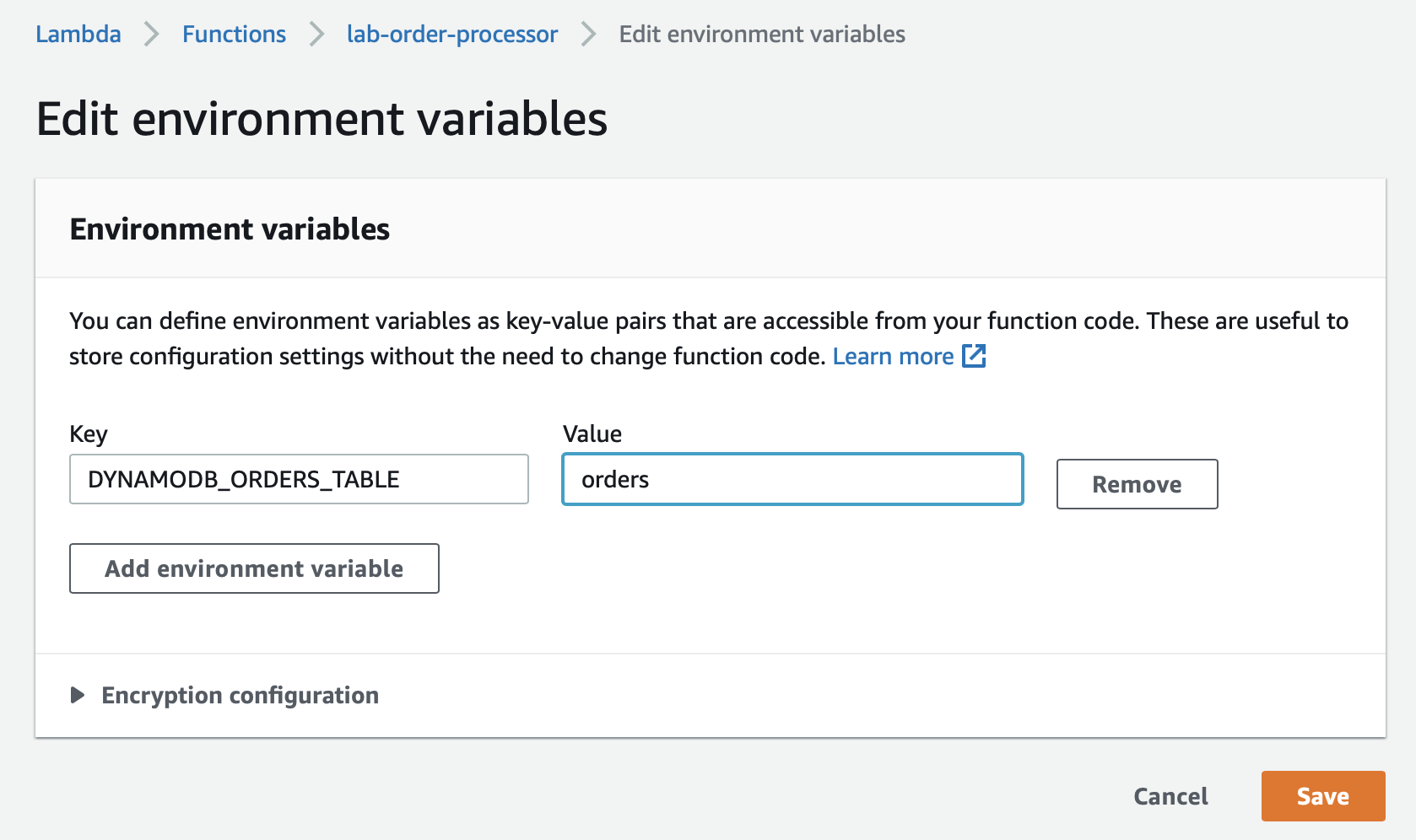

5. Configure Lambda to set up the environment variable for the DynamoDB table used to store the order data.

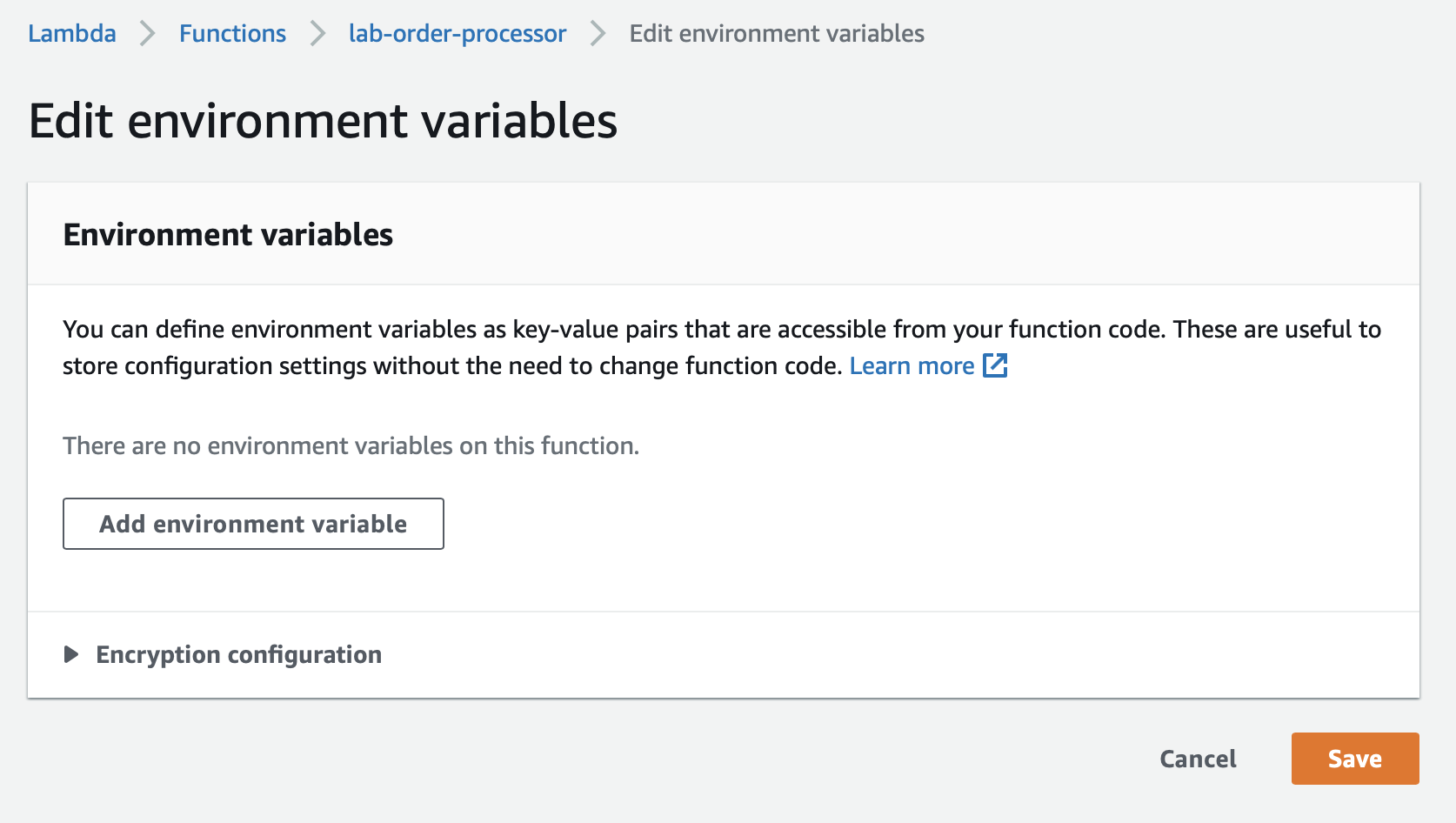

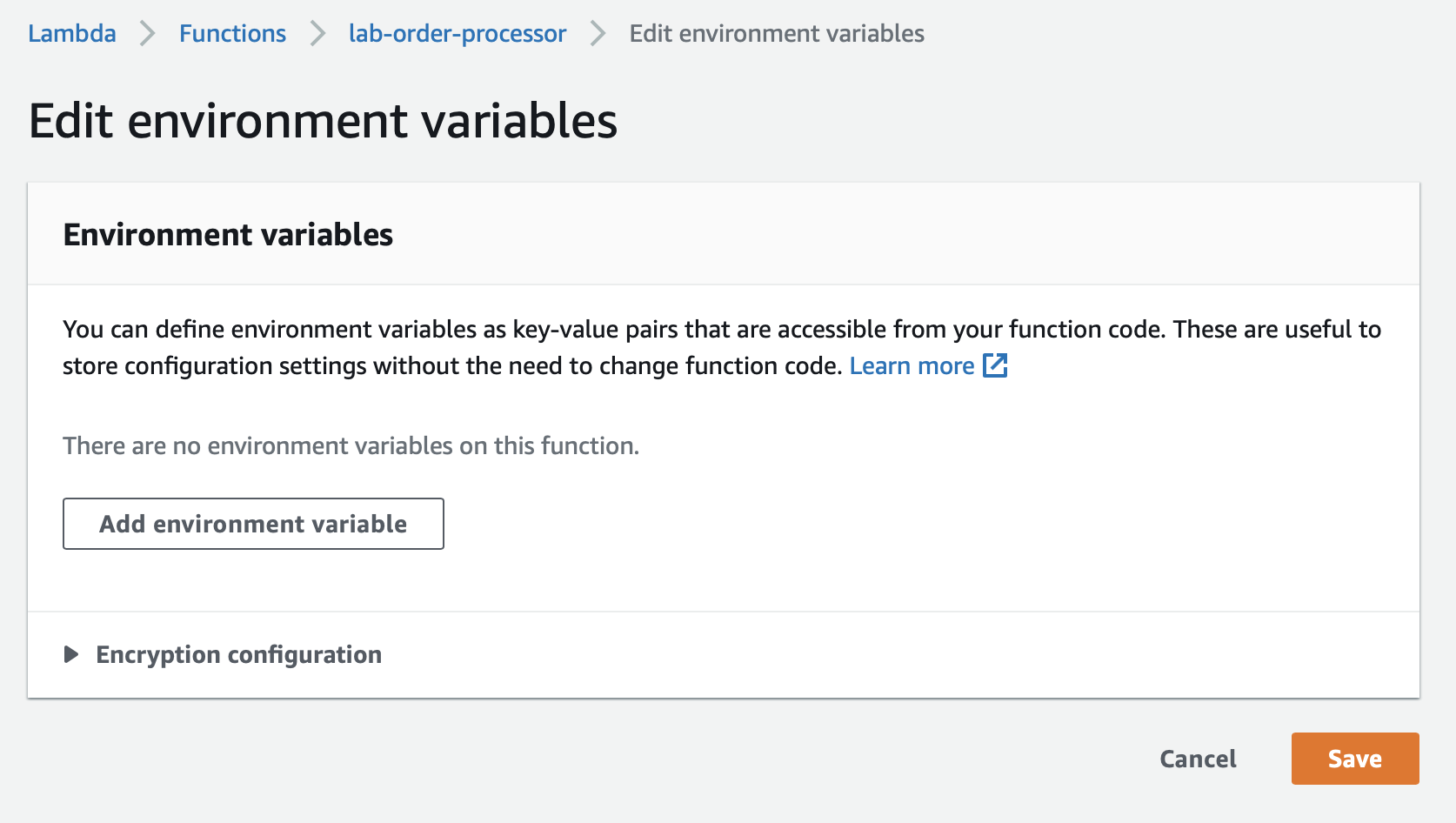

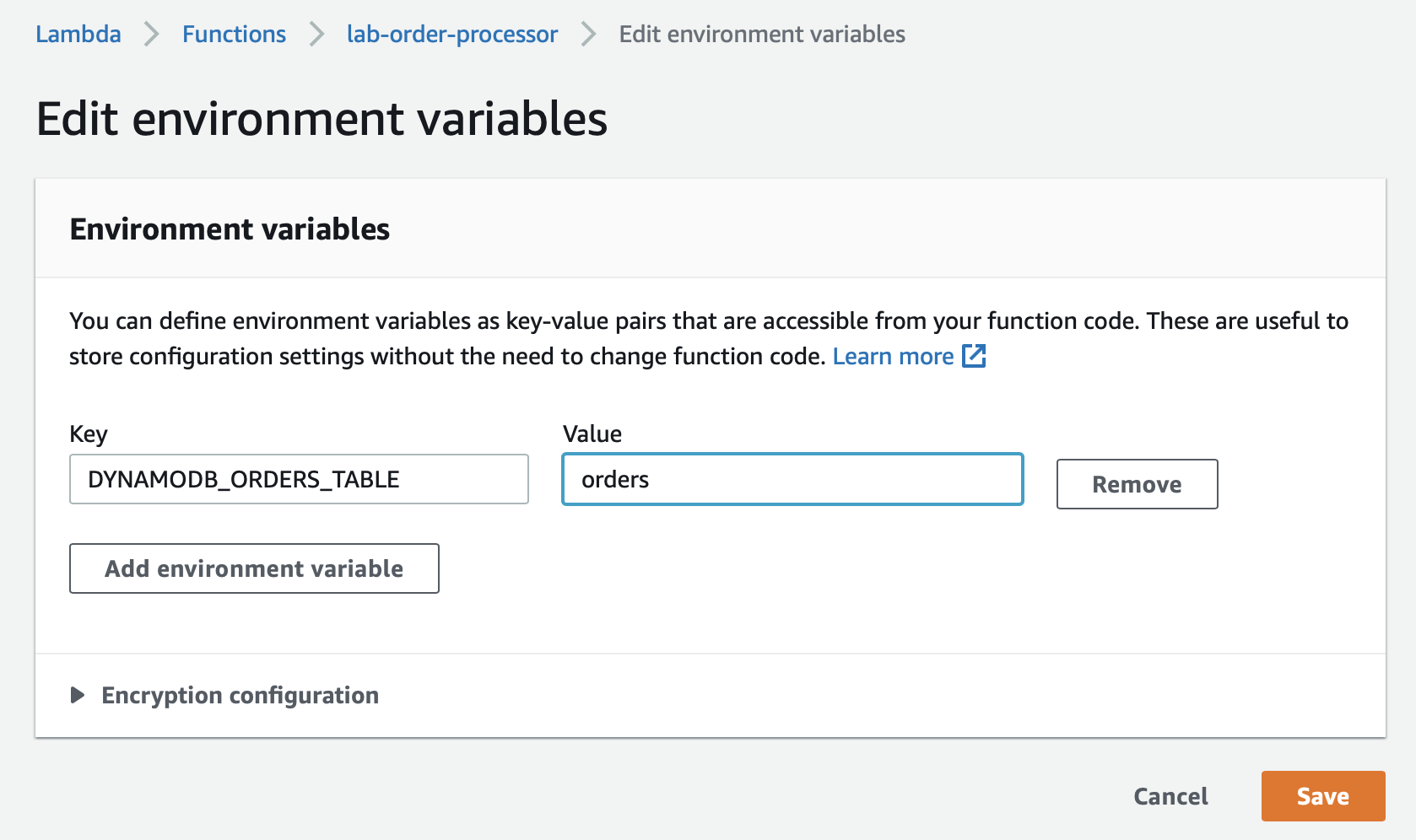

Click on the Configuration tab and select the Environment variables link in the left sidebar. Click on the Edit button.

- Click on the Add environment variable button.

- Enter DYNAMODB_ORDERS_TABLE as the Key. Put orders as the Value. Click on Save.

6. Click on the Code tab and then select the lambda_function.py file. Now clone the file lab-order-processor.py from github:https://github.com/pporumbescu/Create-a-Decoupled-Backend-Architecture-Using-Lambda-SQS-and-DynamoDB.git and paste in the Lambda function code editor and click Deploy.

To test your end-to-end architecture, you need to create customer orders by executing the order simulator Lambda (created in Task 3), which puts customer orders into the SQS queue. This, in turn, triggers the order processor Lambda (created in Task 4) that finally captures the customer order data in a DynamoDB table. Thus, the given architecture decouples the order-creating component with the order-processing component. The architecture can also handle any missing data or badly formatted data without breaking the workflow.

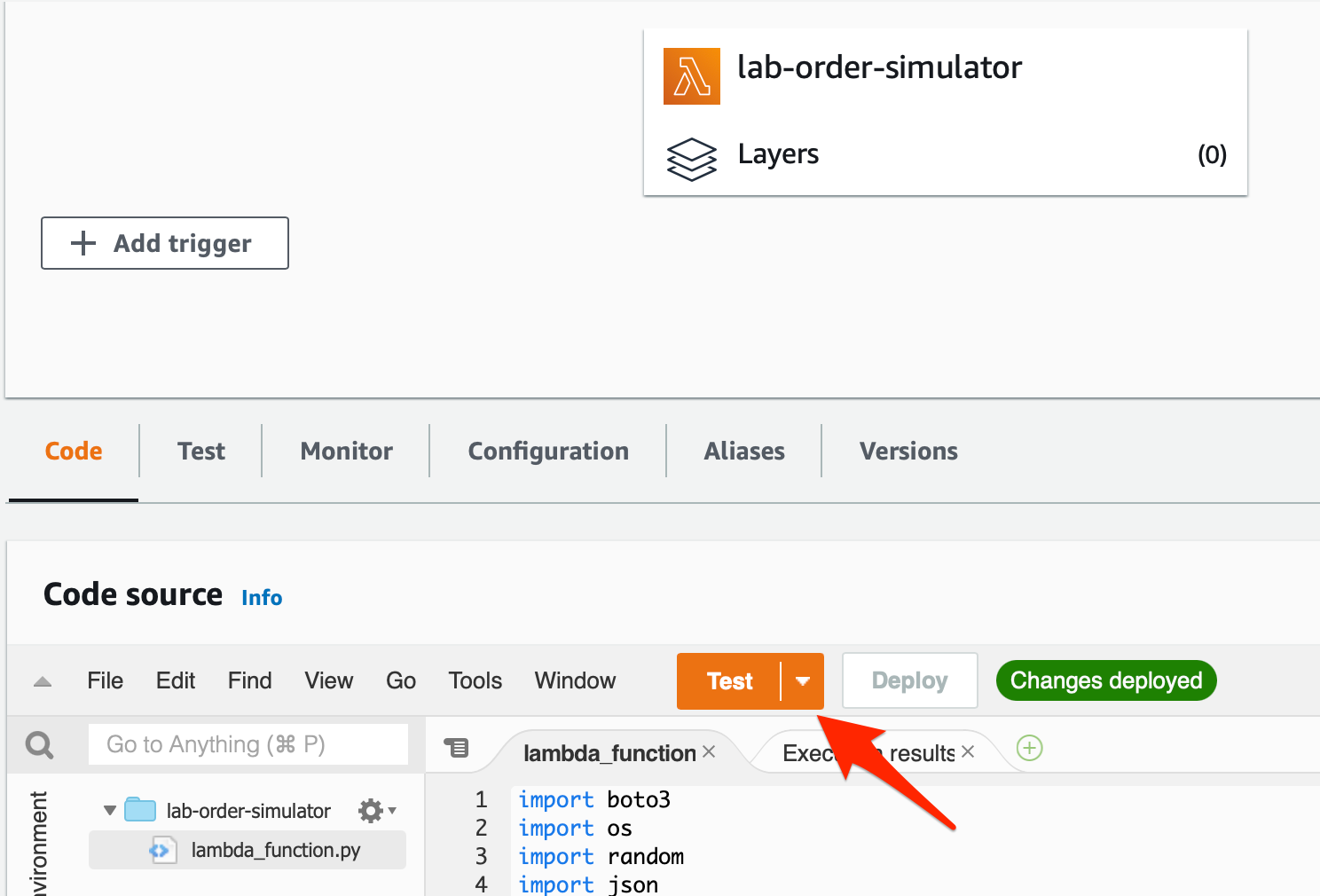

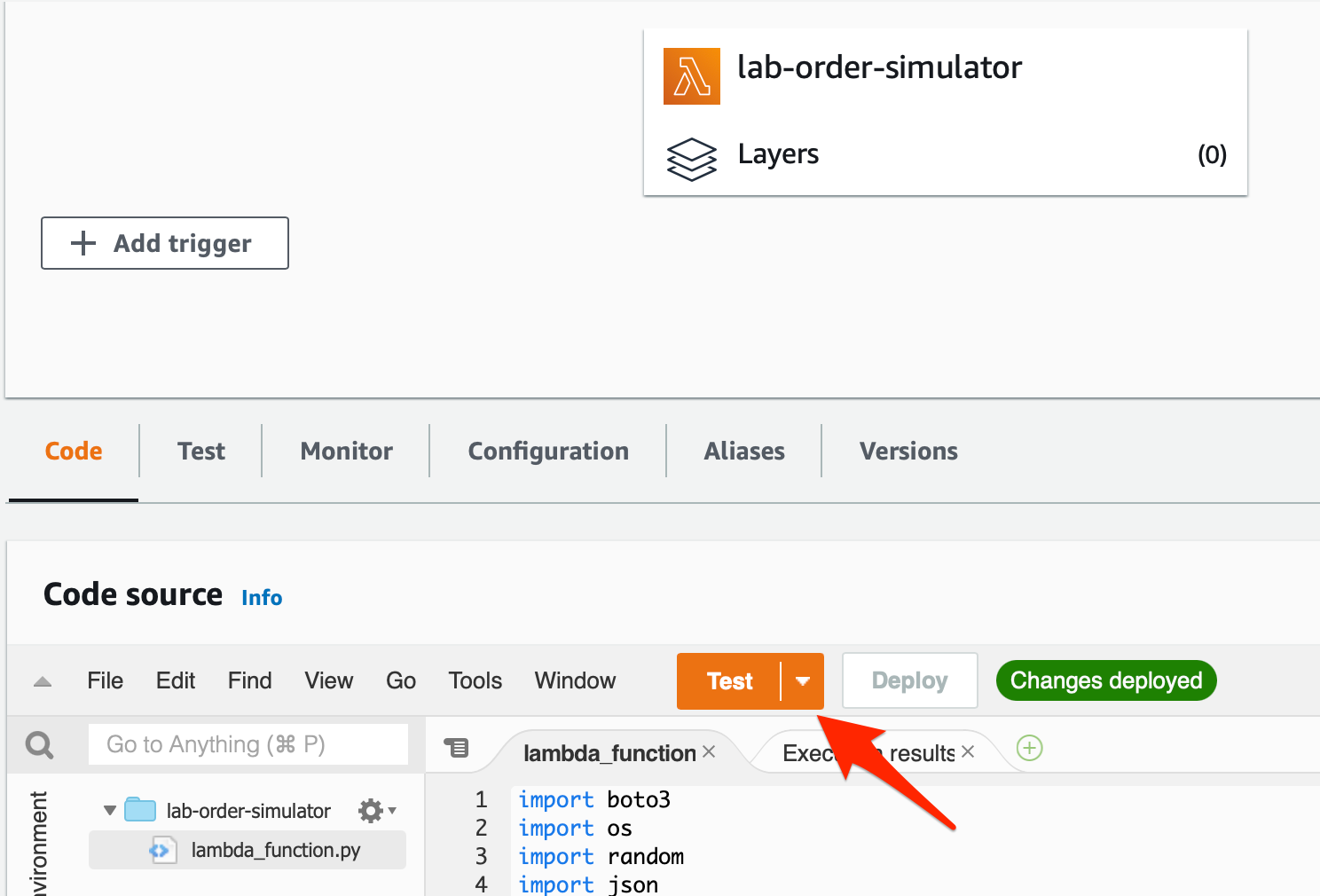

- Make sure that you are in the N.Virginia AWS Region on the AWS Management Console. Enter Lambda in the search bar and select Lambda service.

Search and select the lab-order-simulator lambda.

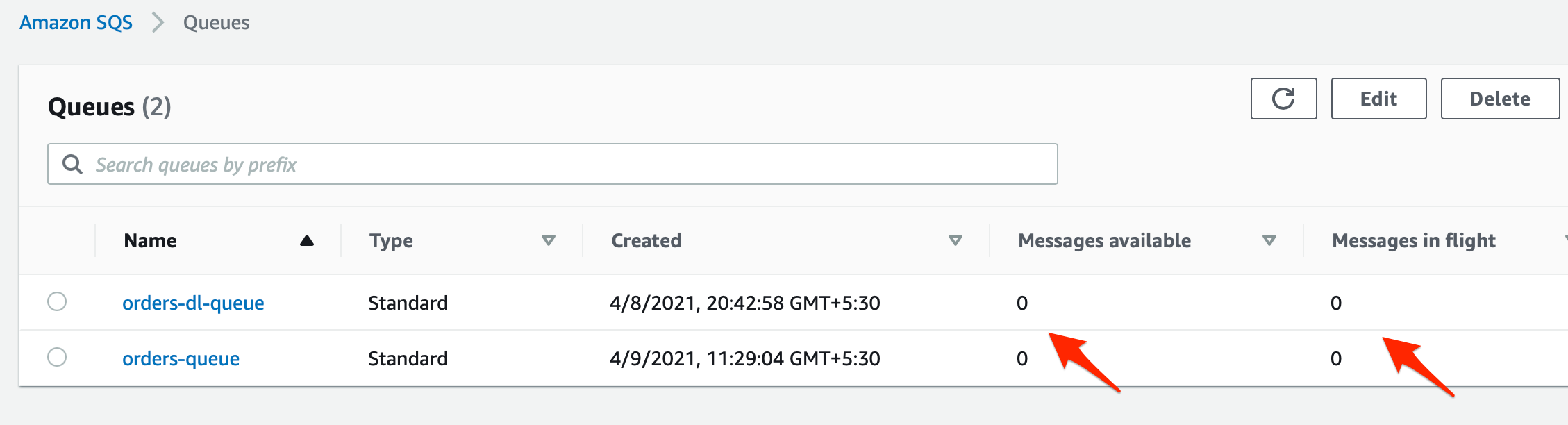

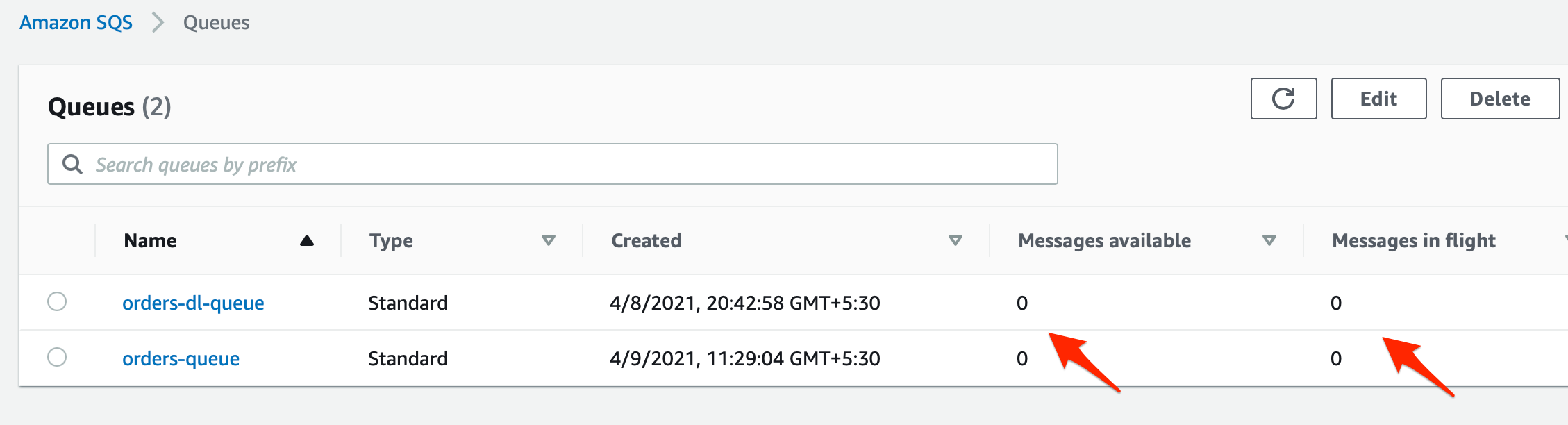

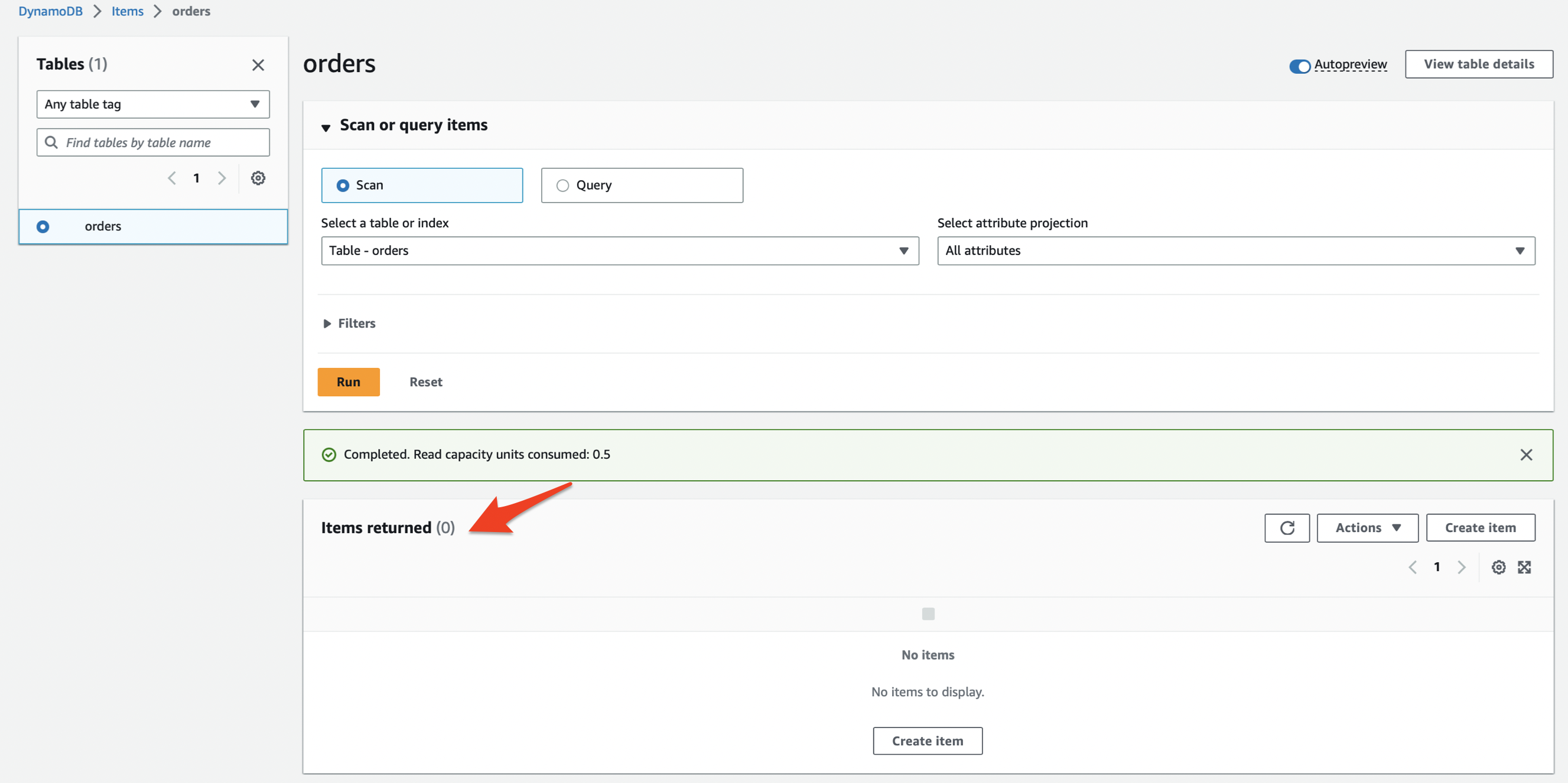

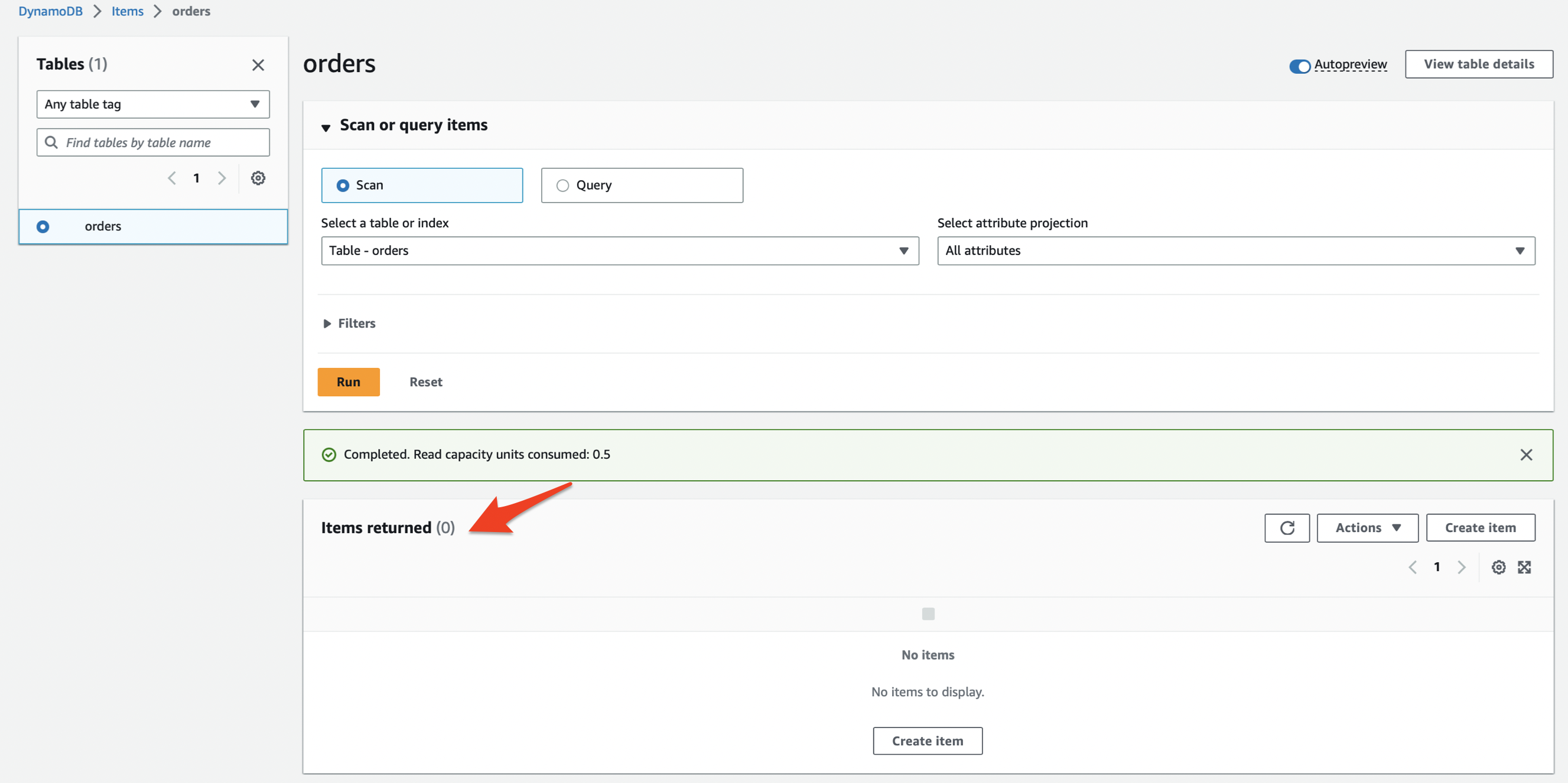

2. Before creating the orders, let’s make sure that there are no messages in the queues and that the DynamoDB orders table has no records.

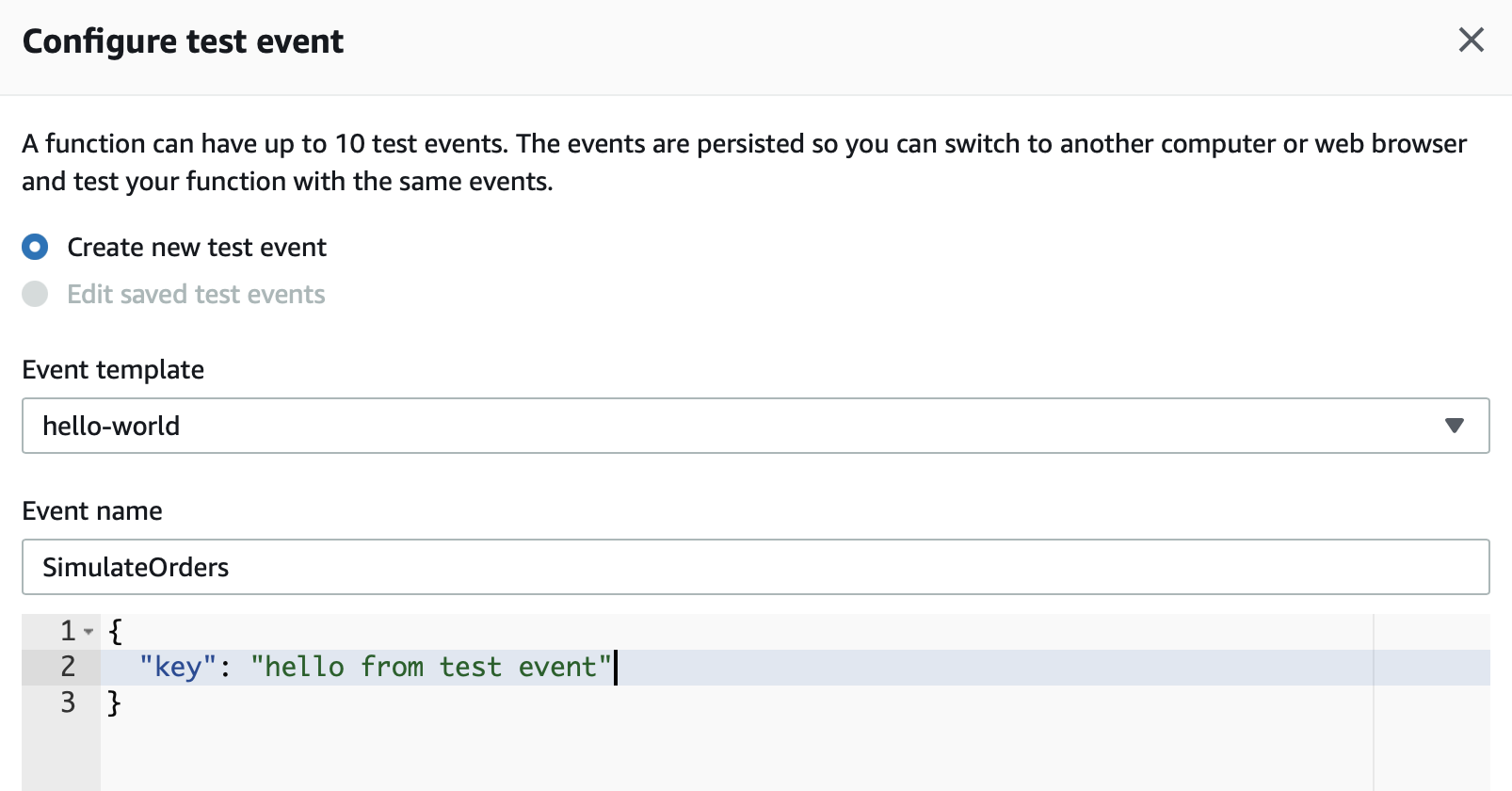

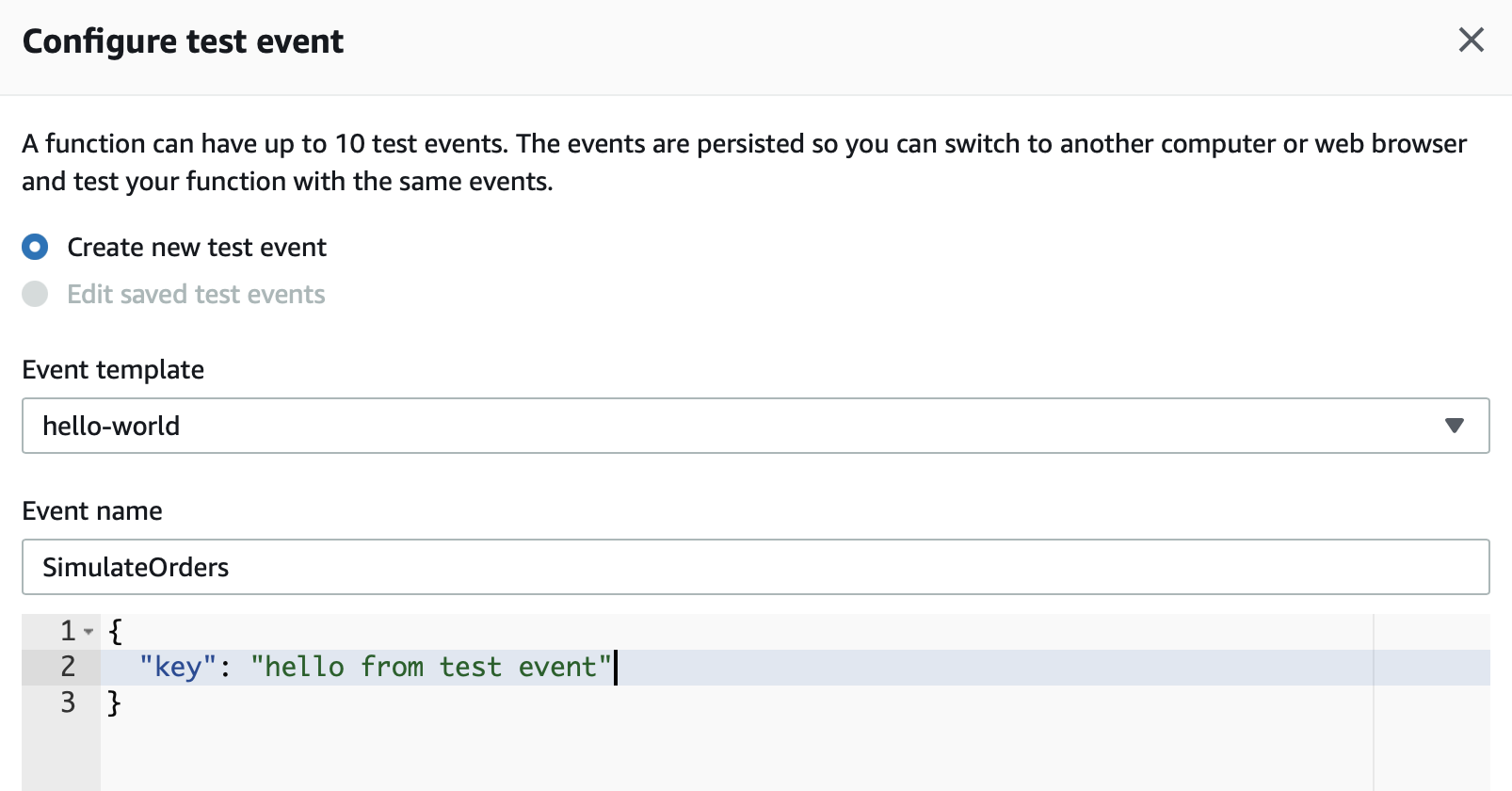

3. To invoke the lab-order-simulator lambda, you need to configure a test event. Click on the dropdown menu arrow next to Test and click on Configure test event.

Select Create new test event and enter SimulateOrders as the event name. Provide a key and value as shown below. Click on Create to complete the set-up.

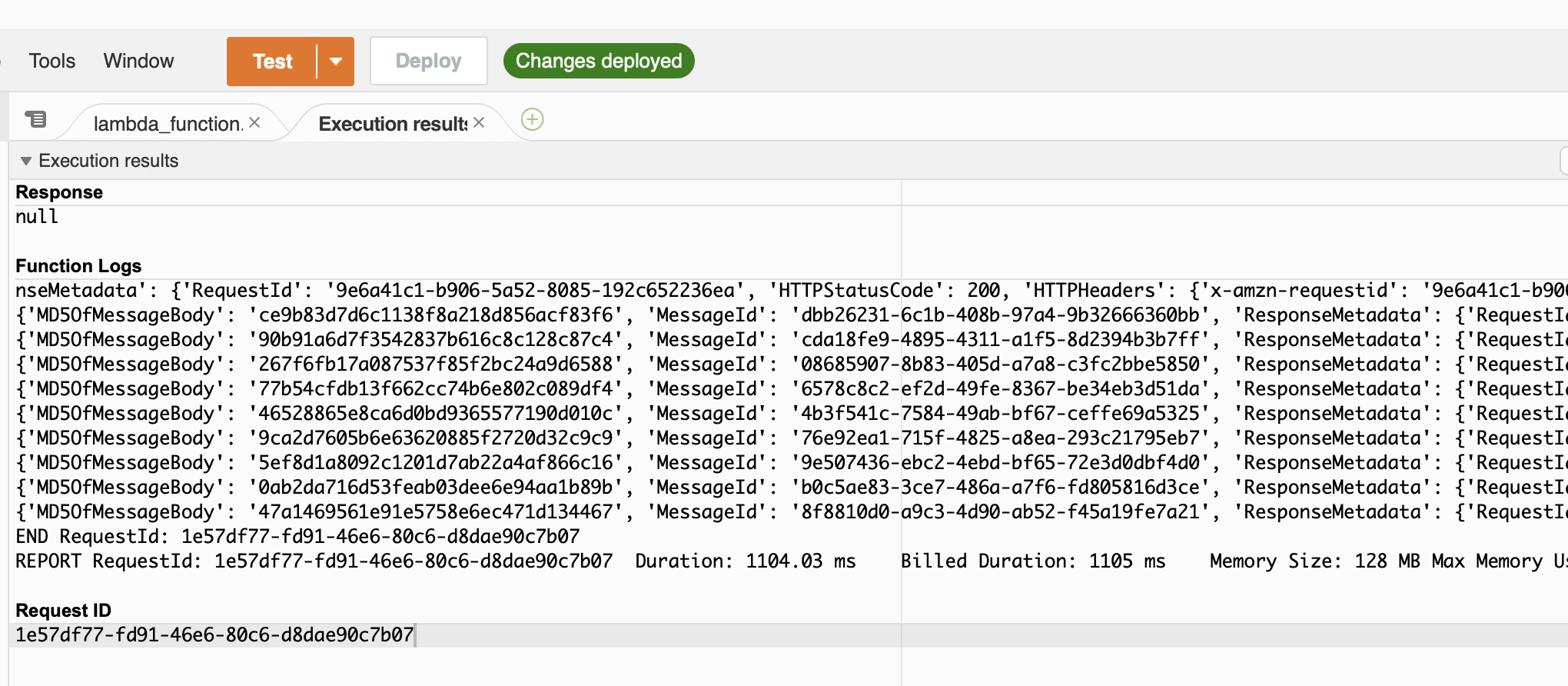

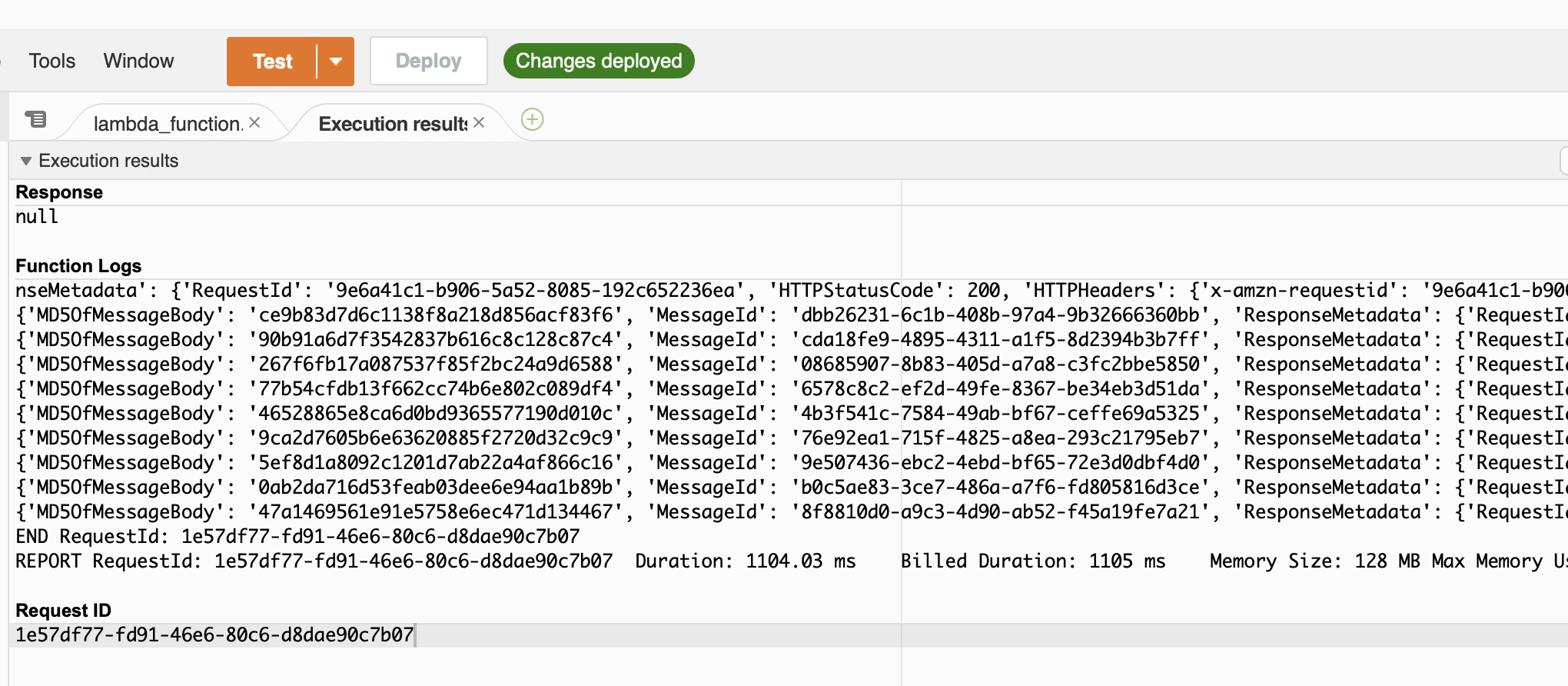

4. Now that the test event is ready, click on Test. You should notice the following:

The execution results show that the Lambda ran successfully.

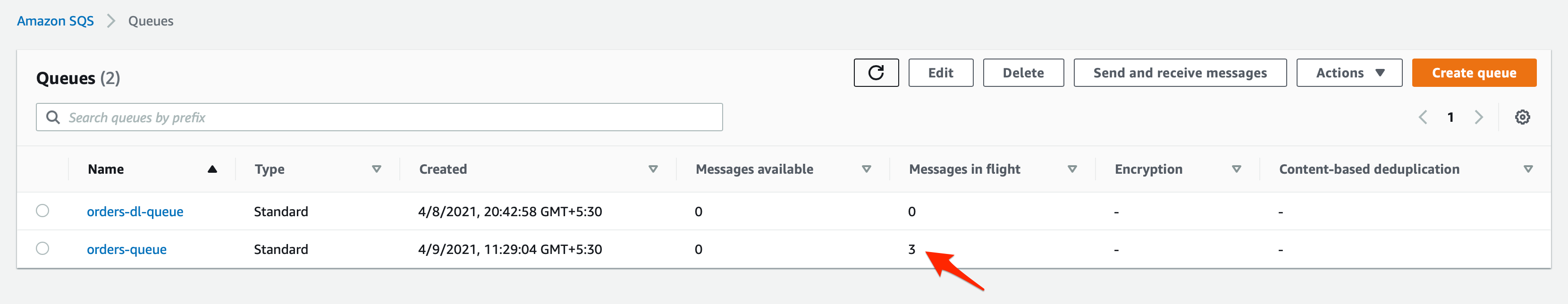

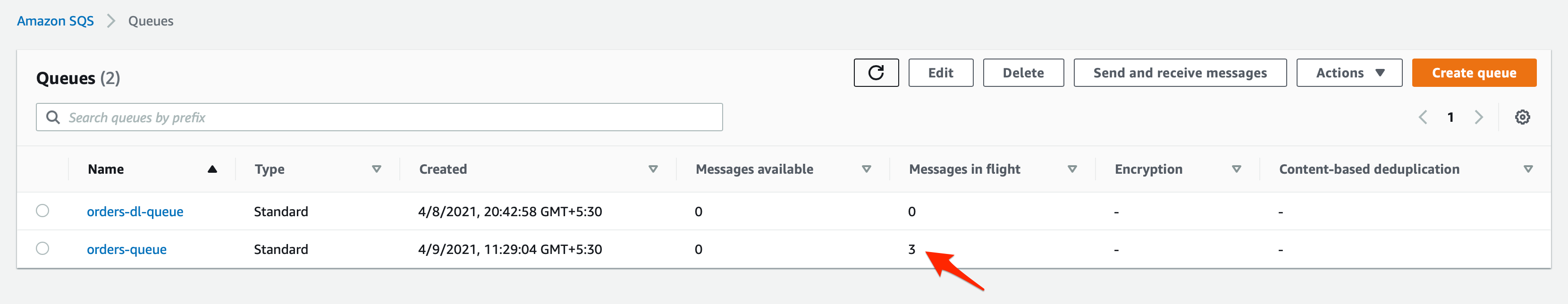

- Go to SQS and check the summary stats for the queues. For this run, we see that the orders-queue shows there are 3 messages in flight. These are the 3 messages which have an err value as the price_per_item and therefore rejected by the order processor Lambda. Please note that this number may be higher or lower for you as the value err is randomly generated. We will look at the actual messages in a later step

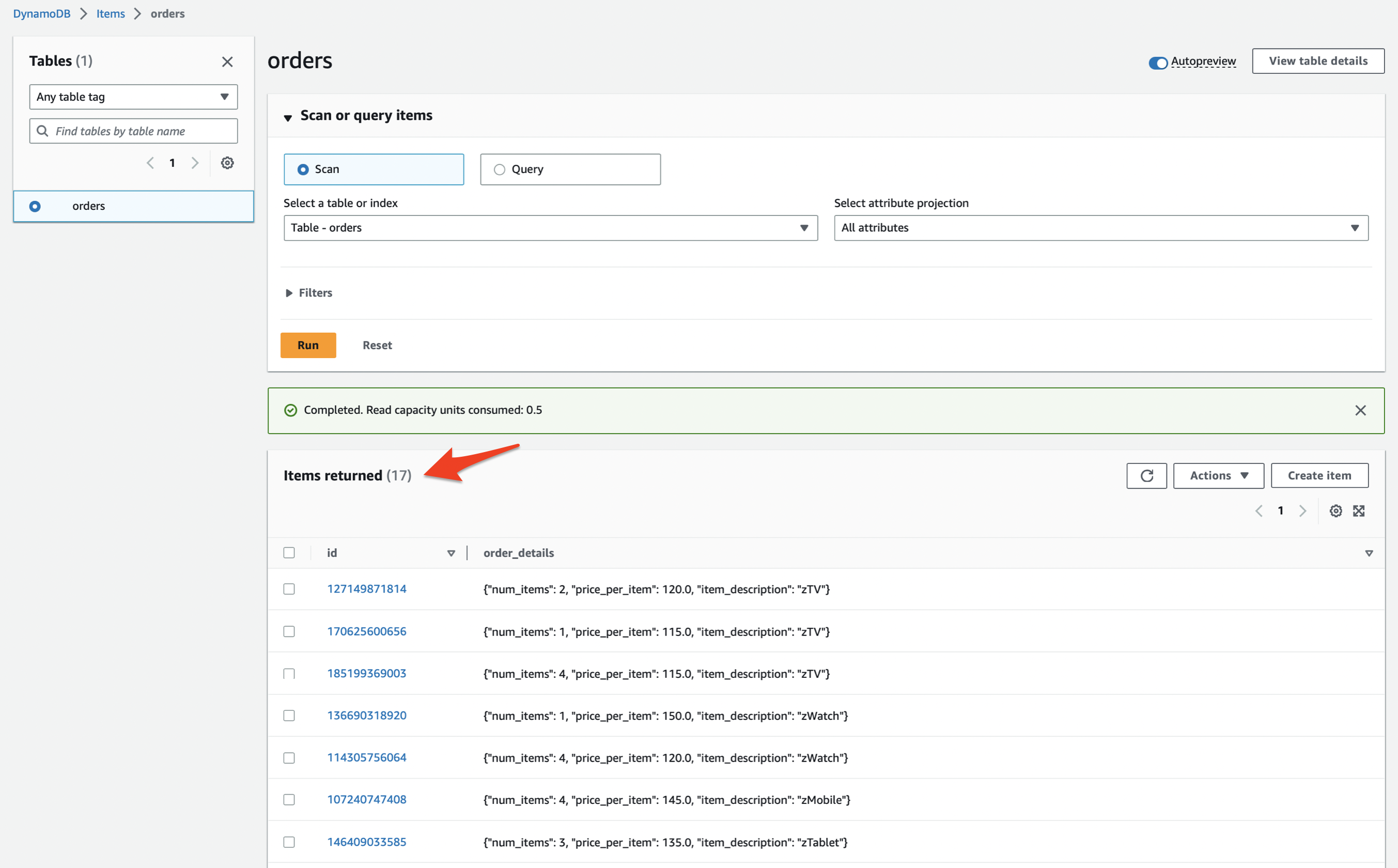

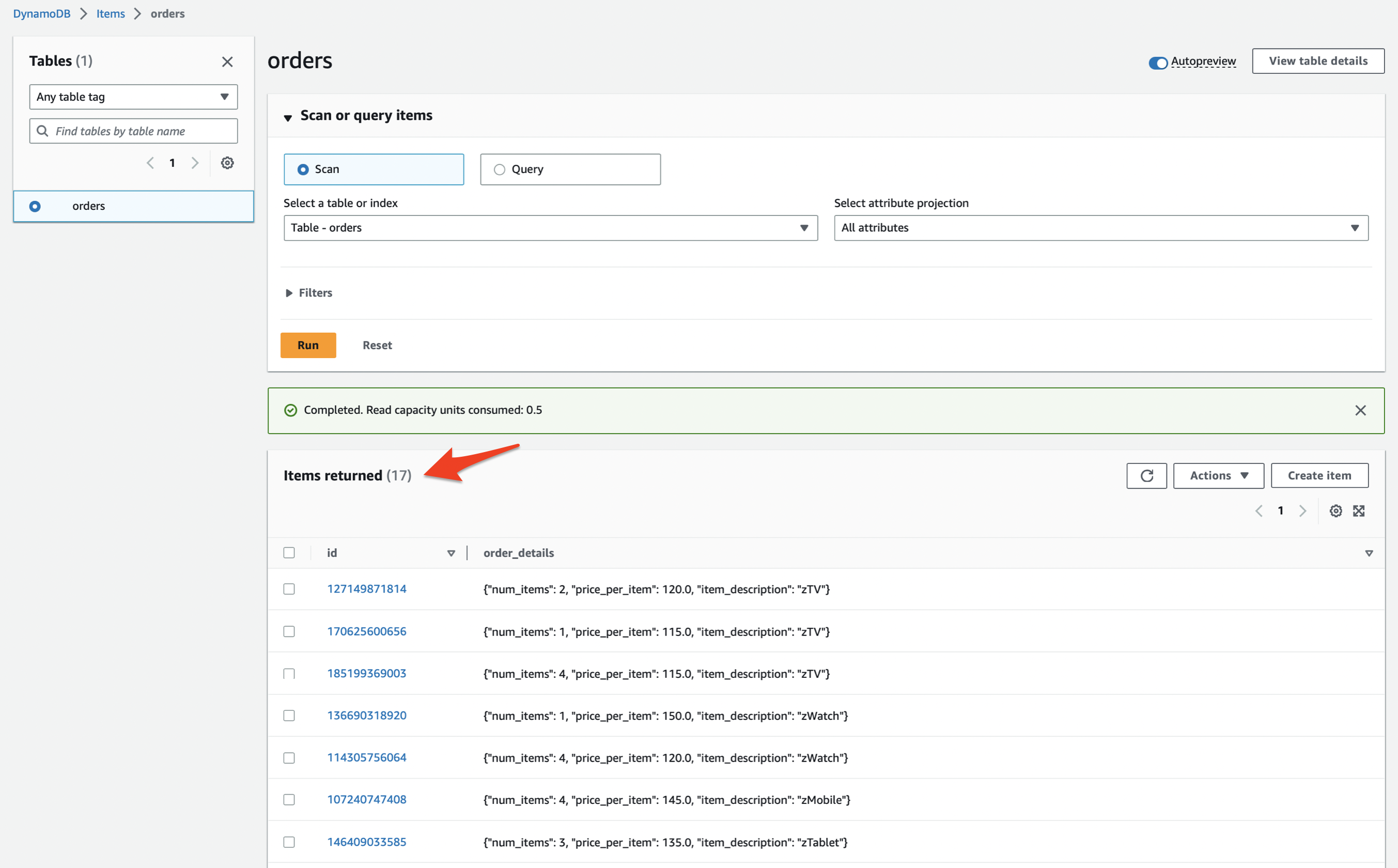

- Go to DynamoDB and check the record count in the orders table. For this run, we see that the orders table shows that there are 17 records. This makes sense, as 3 out of the 20 records created were erroneous. As mentioned earlier, these numbers may vary a little for you.

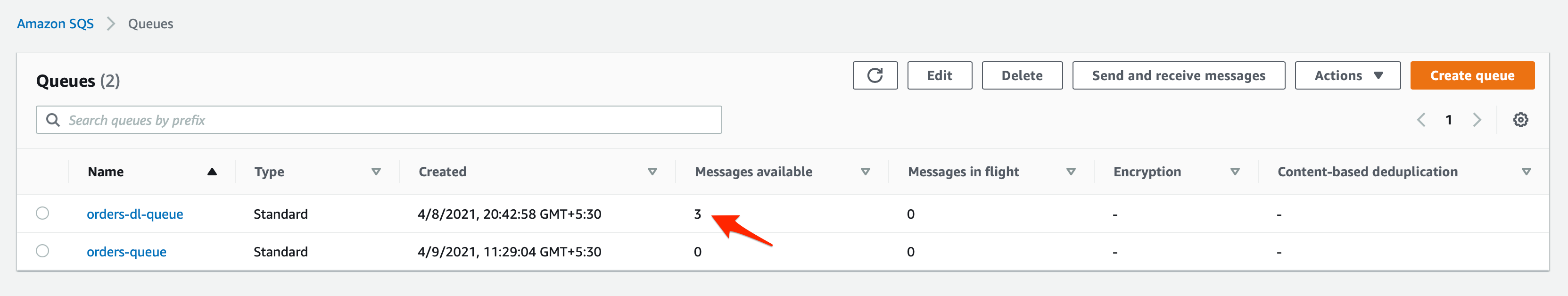

- Let’s go back to SQS, and within a couple of minutes, you should see that the 3 erroneous in-flight messages for the orders-queue are now available in the dead-letter-queue orders-dl-queue.

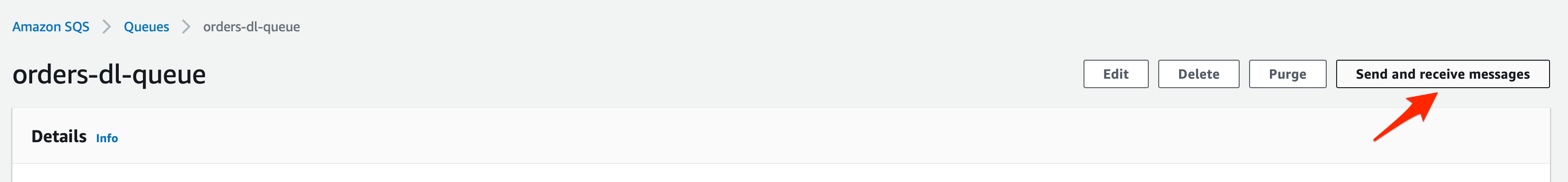

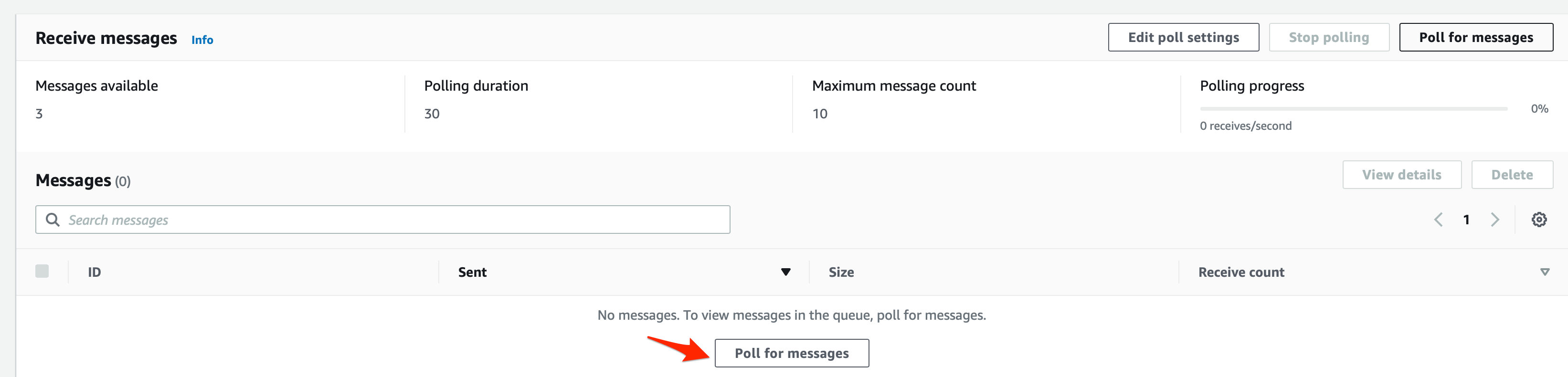

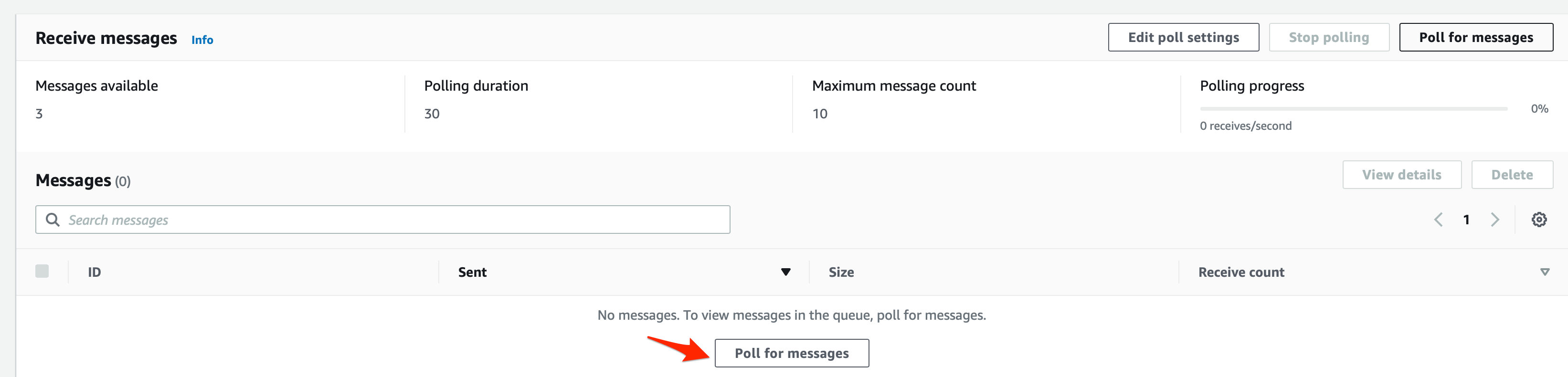

- Click on the orders-dl-queue to inspect the messages in this dead-letter-queue. Then click on the Send and receive messages button.

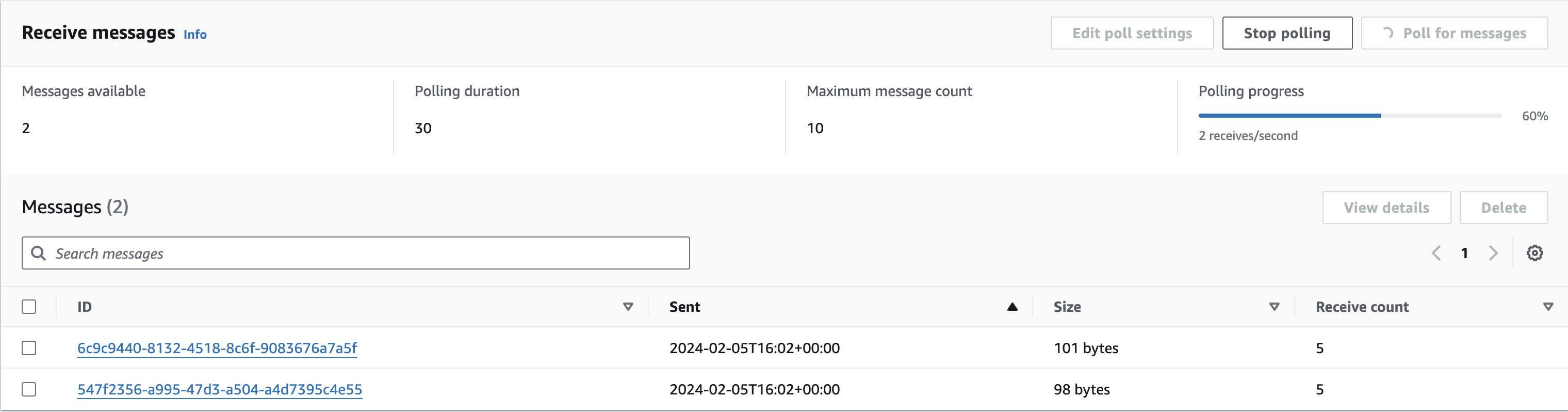

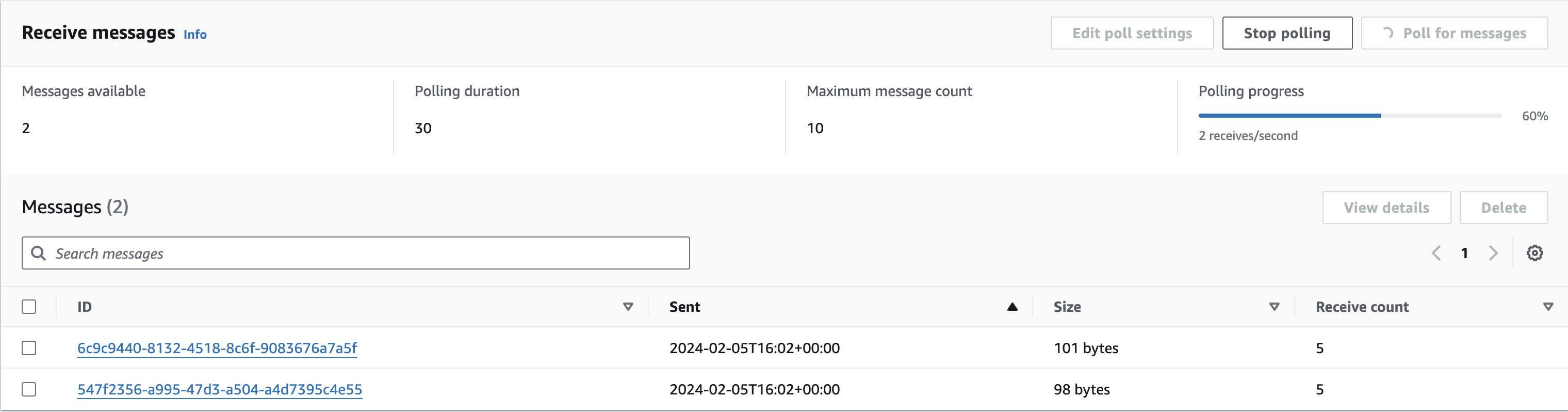

Click on Poll for messages to view the messages

You should see the list of messages available in the dead-letter-queue:

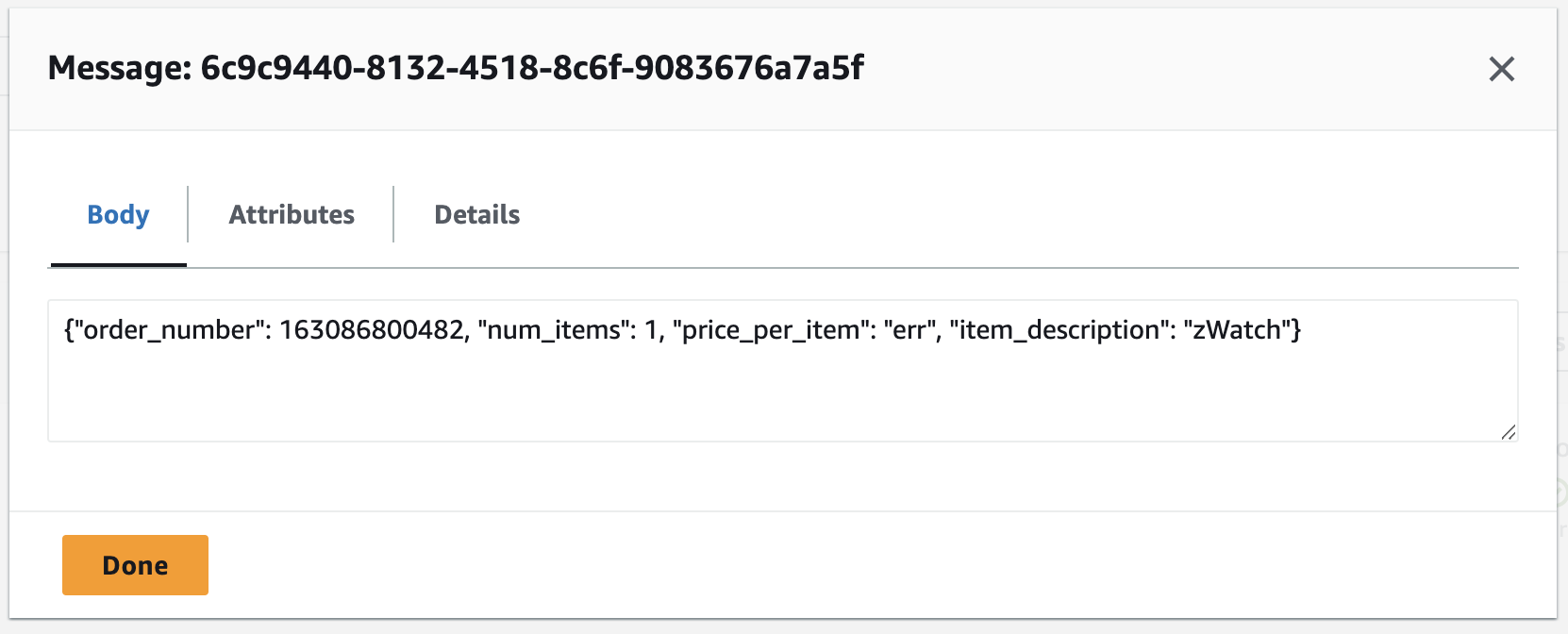

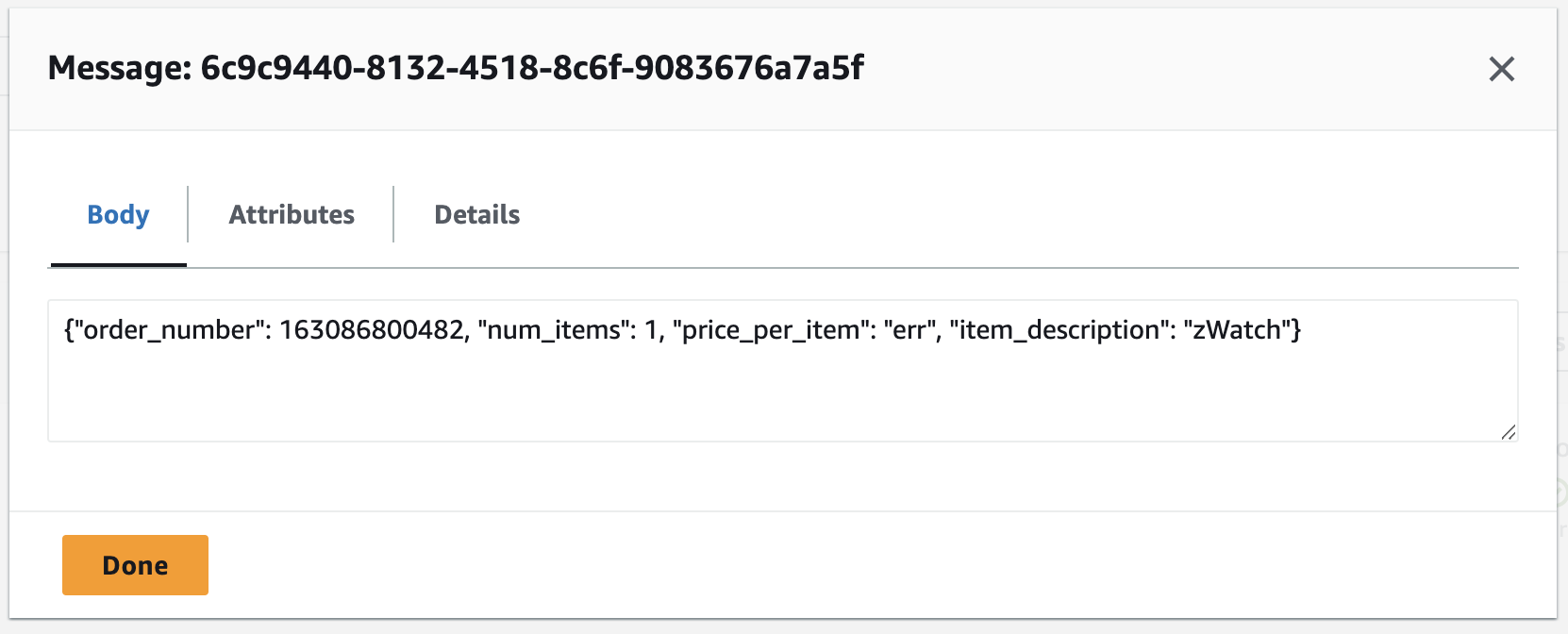

Click on each of the messages to ascertain that each message has the value err for price_per_item key.

Clean up the resources created in Task 1 through Task 5

- Let’s clean up the resources created for this lab:

Delete the two lambda functions – lab-order-simulator and lab-order-processor

Delete the two SQS queues – orders-queue and orders-dl-queue

Delete the DynamoDB orders table

2. Congratulations on completing this lab!

Conclusion:

To conclude, this hands-on lab module gave me good practice in using AWS services to build a decoupled backend architecture. Through a simulated scenario, I gained experience regarding real-world challenges around things like:

- Maintaining data integrity as order transactions flow through various components

By refactoring into decoupled components interacting via asynchronous queues and redundant data stores, I learned patterns applicable in production systems with similar transaction processing needs.

The knowledge around coordinating frontends with cloud-native backends via asynchronous flows will also be directly relevant to larger platforms. I look forward to applying these learnings to architect more complex solutions managing real-time data using Lambda, SQS and DynamoDB.