This function does the following:

- Creates an EC2 resource object for the specified region.

- Builds a list of filters based on the provided tags.

- Retrieves the volumes in the specified region, filtered by tags (if provided).

- Iterates over each volume and creates a snapshot.

- Prints the snapshot ID and volume ID upon successful creation.

- Handles any exceptions and prints an error message if a snapshot creation fails.

Step 3: Configure the Script

Now, let’s configure the script with the desired AWS region and tags:

region = 'us-east-1'

tags = {'env': 'prod'}Replace 'us-east-1' with the appropriate AWS region where your EC2 instances are located. Modify the tags dictionary to match the tags of the volumes you want to create snapshots for.

Step 4: Schedule the Snapshot Creation

To automate the snapshot creation process, we’ll use the schedule library to define the desired frequency:

schedule.every().day.at("00:00").do(create_volume_snapshots, region=region, tags=tags)This line schedules the create_volume_snapshots function to run every day at midnight (00:00). Adjust the schedule according to your requirements.

Step 5: Run the Script

Finally, we’ll add a loop to keep the script running and execute the scheduled tasks:

while True:

schedule.run_pending()

time.sleep(1)The script will continuously check for pending scheduled tasks and execute them when the scheduled time arrives. The time.sleep(1) statement adds a 1-second delay between each check to avoid excessive CPU usage.

For those interested in implementing or adapting this script, here’s the complete code:

import boto3

import schedule

import time

def create_volume_snapshots(region, tags=None):

"""

Creates snapshots for EC2 volumes in the specified region, filtered by tags (optional).

Args:

region (str): The AWS region where the volumes are located.

tags (dict, optional): A dictionary of tags to filter the volumes. Defaults to None.

"""

# Create an EC2 resource object for the specified region

ec2 = boto3.resource('ec2', region_name=region)

# Create a list to store the filters

filters = []

# If tags are provided, add them to the filters list

if tags:

for key, value in tags.items():

filters.append({'Name': f'tag:{key}', 'Values': [value]})

# Get all volumes in the specified region, filtered by tags (if provided)

volumes = ec2.volumes.filter(Filters=filters)

# Iterate over each volume

for volume in volumes:

try:

# Create a snapshot of the volume

snapshot = volume.create_snapshot()

print(f"Created snapshot: {snapshot.id} for volume {volume.id}")

except Exception as e:

# If an exception occurs, print an error message with the volume ID and exception details

print(f"Error creating snapshot for volume {volume.id}: {str(e)}")

# Specify the AWS region where your EC2 instance is located

region = 'us-east-1'

# Optional: Specify tags to filter volumes (e.g., {'Environment': 'Production'})

tags = {'env': 'prod'}

# Schedule the script to run once a day at midnight

schedule.every().day.at("00:00").do(create_volume_snapshots, region=region, tags=tags)

# Run the scheduled tasks indefinitely

while True:

# Check if any scheduled tasks are pending and run them

schedule.run_pending()

# Pause the script for 1 second before checking for pending tasks again

time.sleep(1)

Conclusion:

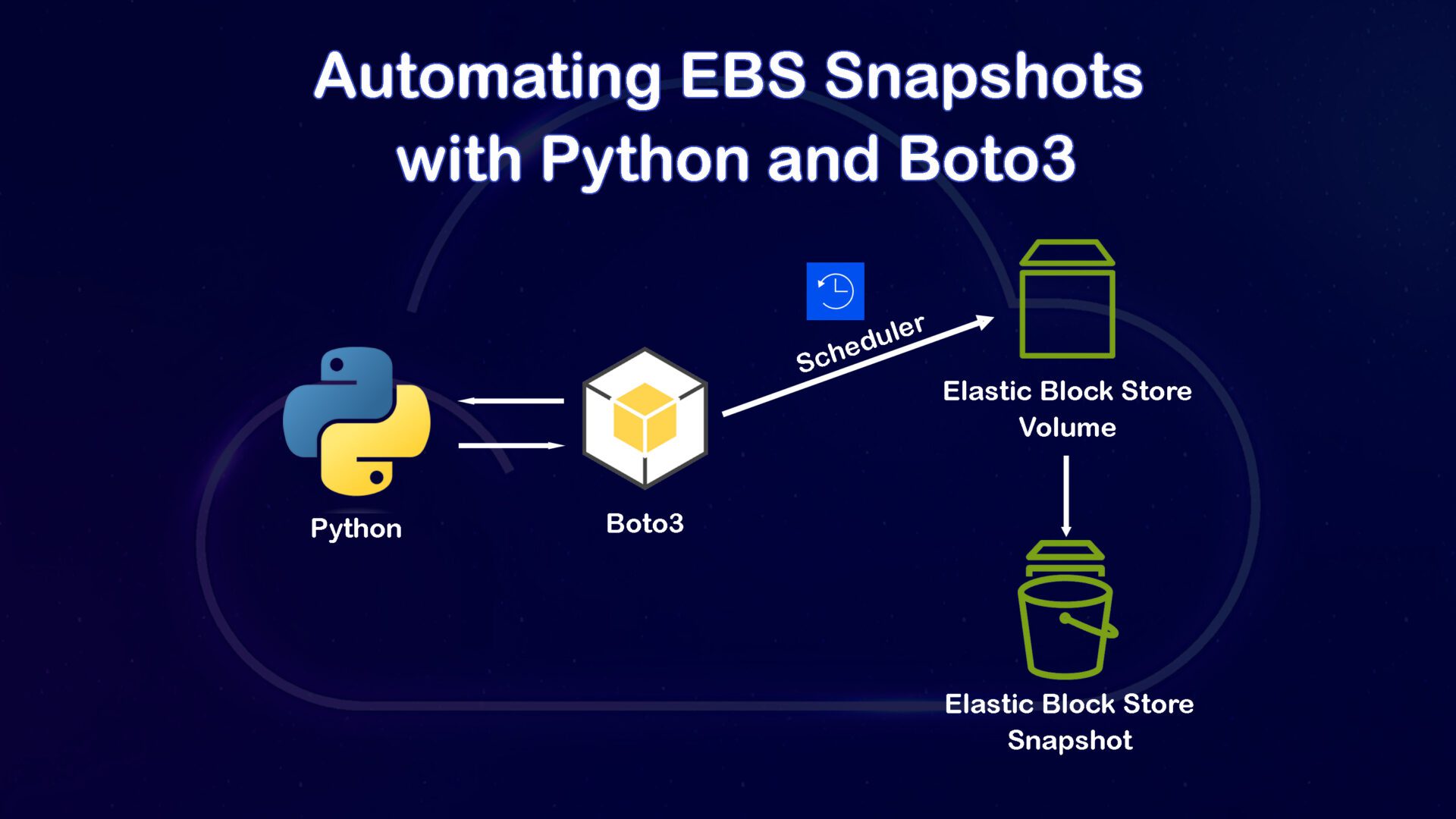

With this Python script, you can automate the process of creating EBS snapshots based on specific tags and schedule them to run at your desired frequency. By leveraging the power of Boto3 and the schedule library, you can ensure regular and consistent snapshot creation without manual intervention.

Remember to review and adjust the script according to your specific requirements, such as the AWS region, tags, and scheduling frequency. Additionally, make sure to monitor the snapshot creation process and set up appropriate monitoring and alerting mechanisms to stay informed about the status of your snapshots.

By automating EBS snapshots, you can enhance your data protection strategy, minimize the risk of data loss, and save time and effort in managing your AWS infrastructure.